Gao Tianci

Enhancing Sample Efficiency and Exploration in Reinforcement Learning through the Integration of Diffusion Models and Proximal Policy Optimization

Sep 02, 2024

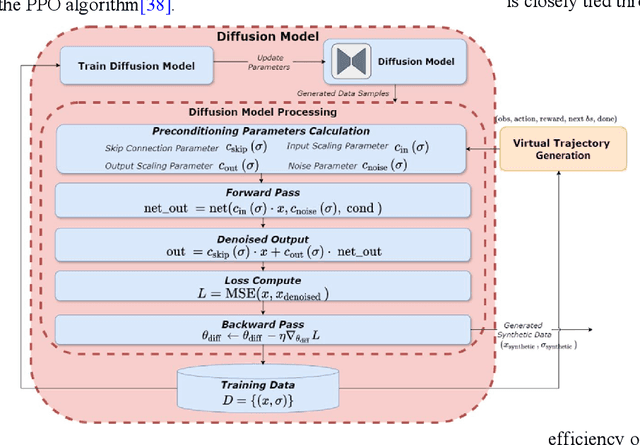

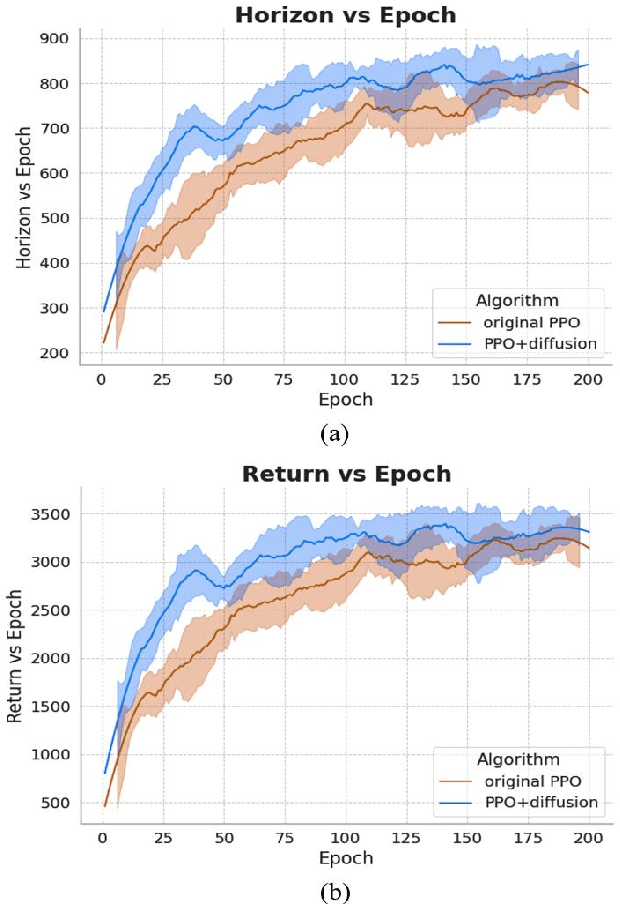

Abstract:Recent advancements in reinforcement learning (RL) have been fueled by large-scale data and deep neural networks, particularly for high-dimensional and complex tasks. Online RL methods like Proximal Policy Optimization (PPO) are effective in dynamic scenarios but require substantial real-time data, posing challenges in resource-constrained or slow simulation environments. Offline RL addresses this by pre-learning policies from large datasets, though its success depends on the quality and diversity of the data. This work proposes a framework that enhances PPO algorithms by incorporating a diffusion model to generate high-quality virtual trajectories for offline datasets. This approach improves exploration and sample efficiency, leading to significant gains in cumulative rewards, convergence speed, and strategy stability in complex tasks. Our contributions are threefold: we explore the potential of diffusion models in RL, particularly for offline datasets, extend the application of online RL to offline environments, and experimentally validate the performance improvements of PPO with diffusion models. These findings provide new insights and methods for applying RL to high-dimensional, complex tasks. Finally, we open-source our code at https://github.com/TianciGao/DiffPPO

Transformer-XL for Long Sequence Tasks in Robotic Learning from Demonstration

May 24, 2024Abstract:This paper presents an innovative application of Transformer-XL for long sequence tasks in robotic learning from demonstrations (LfD). The proposed framework effectively integrates multi-modal sensor inputs, including RGB-D images, LiDAR, and tactile sensors, to construct a comprehensive feature vector. By leveraging the advanced capabilities of Transformer-XL, particularly its attention mechanism and position encoding, our approach can handle the inherent complexities and long-term dependencies of multi-modal sensory data. The results of an extensive empirical evaluation demonstrate significant improvements in task success rates, accuracy, and computational efficiency compared to conventional methods such as Long Short-Term Memory (LSTM) networks and Convolutional Neural Networks (CNNs). The findings indicate that the Transformer-XL-based framework not only enhances the robot's perception and decision-making abilities but also provides a robust foundation for future advancements in robotic learning from demonstrations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge