Gang Dong

SGED: A Benchmark dataset for Performance Evaluation of Spiking Gesture Emotion Recognition

Apr 28, 2023

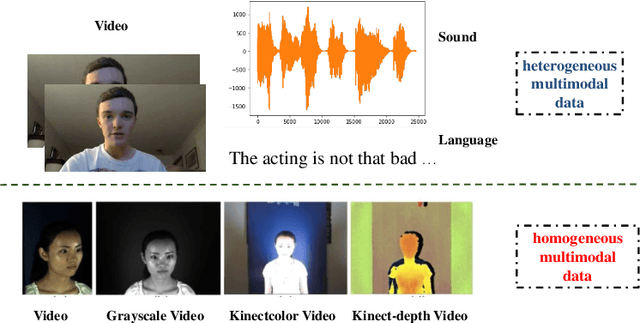

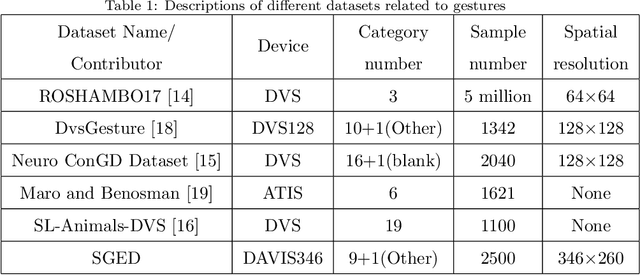

Abstract:In the field of affective computing, researchers in the community have promoted the performance of models and algorithms by using the complementarity of multimodal information. However, the emergence of more and more modal information makes the development of datasets unable to keep up with the progress of existing modal sensing equipment. Collecting and studying multimodal data is a complex and significant work. In order to supplement the challenge of partial missing of community data. We collected and labeled a new homogeneous multimodal gesture emotion recognition dataset based on the analysis of the existing data sets. This data set complements the defects of homogeneous multimodal data and provides a new research direction for emotion recognition. Moreover, we propose a pseudo dual-flow network based on this dataset, and verify the application potential of this dataset in the affective computing community. The experimental results demonstrate that it is feasible to use the traditional visual information and spiking visual information based on homogeneous multimodal data for visual emotion recognition.The dataset is available at \url{https://github.com/201528014227051/SGED}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge