Gabriel Moraes Barros

Using Soft Actor-Critic for Low-Level UAV Control

Oct 05, 2020

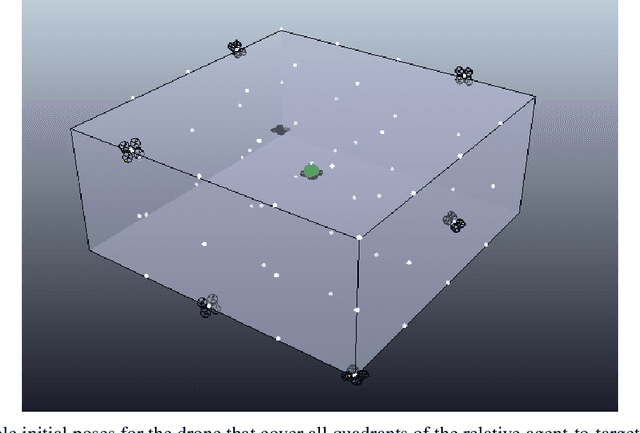

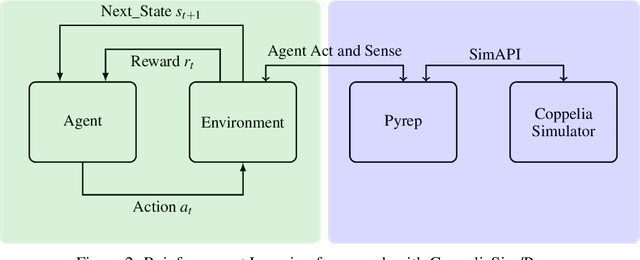

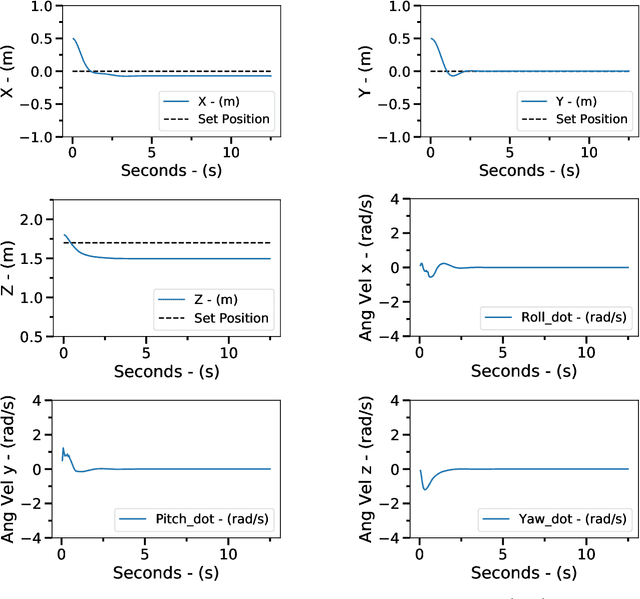

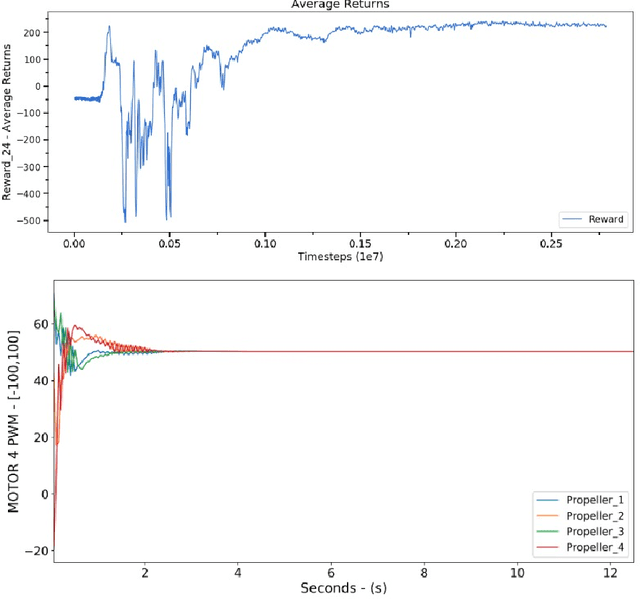

Abstract:Unmanned Aerial Vehicles (UAVs), or drones, have recently been used in several civil application domains from organ delivery to remote locations to wireless network coverage. These platforms, however, are naturally unstable systems for which many different control approaches have been proposed. Generally based on classic and modern control, these algorithms require knowledge of the robot's dynamics. However, recently, model-free reinforcement learning has been successfully used for controlling drones without any prior knowledge of the robot model. In this work, we present a framework to train the Soft Actor-Critic (SAC) algorithm to low-level control of a quadrotor in a go-to-target task. All experiments were conducted under simulation. With the experiments, we show that SAC can not only learn a robust policy, but it can also cope with unseen scenarios. Videos from the simulations are available in https://www.youtube.com/watch?v=9z8vGs0Ri5g and the code in https://github.com/larocs/SAC_uav.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge