Gabriel Luque

Energy and Quality of Surrogate-Assisted Search Algorithms: a First Analysis

Aug 11, 2025Abstract:Solving complex real problems often demands advanced algorithms, and then continuous improvements in the internal operations of a search technique are needed. Hybrid algorithms, parallel techniques, theoretical advances, and much more are needed to transform a general search algorithm into an efficient, useful one in practice. In this paper, we study how surrogates are helping metaheuristics from an important and understudied point of view: their energy profile. Even if surrogates are a great idea for substituting a time-demanding complex fitness function, the energy profile, general efficiency, and accuracy of the resulting surrogate-assisted metaheuristic still need considerable research. In this work, we make a first step in analyzing particle swarm optimization in different versions (including pre-trained and retrained neural networks as surrogates) for its energy profile (for both processor and memory), plus a further study on the surrogate accuracy to properly drive the search towards an acceptable solution. Our conclusions shed new light on this topic and could be understood as the first step towards a methodology for assessing surrogate-assisted algorithms not only accounting for time or numerical efficiency but also for energy and surrogate accuracy for a better, more holistic characterization of optimization and learning techniques.

* 8 pages, 8 figures, 2024 IEEE Congress on Evolutionary Computation (CEC)

Optimising Communication Overhead in Federated Learning Using NSGA-II

Apr 01, 2022

Abstract:Federated learning is a training paradigm according to which a server-based model is cooperatively trained using local models running on edge devices and ensuring data privacy. These devices exchange information that induces a substantial communication load, which jeopardises the functioning efficiency. The difficulty of reducing this overhead stands in achieving this without decreasing the model's efficiency (contradictory relation). To do so, many works investigated the compression of the pre/mid/post-trained models and the communication rounds, separately, although they jointly contribute to the communication overload. Our work aims at optimising communication overhead in federated learning by (I) modelling it as a multi-objective problem and (II) applying a multi-objective optimization algorithm (NSGA-II) to solve it. To the best of the author's knowledge, this is the first work that \texttt{(I)} explores the add-in that evolutionary computation could bring for solving such a problem, and \texttt{(II)} considers both the neuron and devices features together. We perform the experimentation by simulating a server/client architecture with 4 slaves. We investigate both convolutional and fully-connected neural networks with 12 and 3 layers, 887,530 and 33,400 weights, respectively. We conducted the validation on the \texttt{MNIST} dataset containing 70,000 images. The experiments have shown that our proposal could reduce communication by 99% and maintain an accuracy equal to the one obtained by the FedAvg Algorithm that uses 100% of communications.

A Fresh Approach to Evaluate Performance in Distributed Parallel Genetic Algorithms

Jun 18, 2021

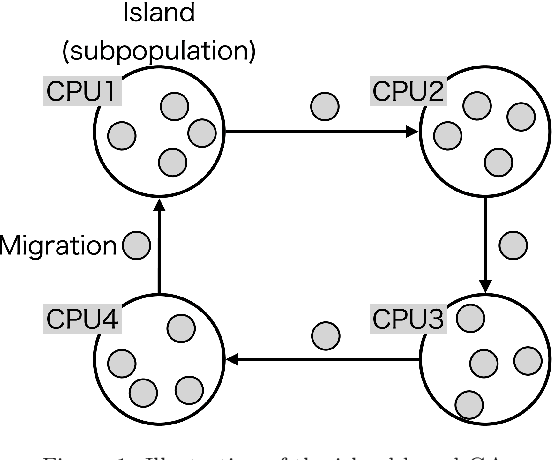

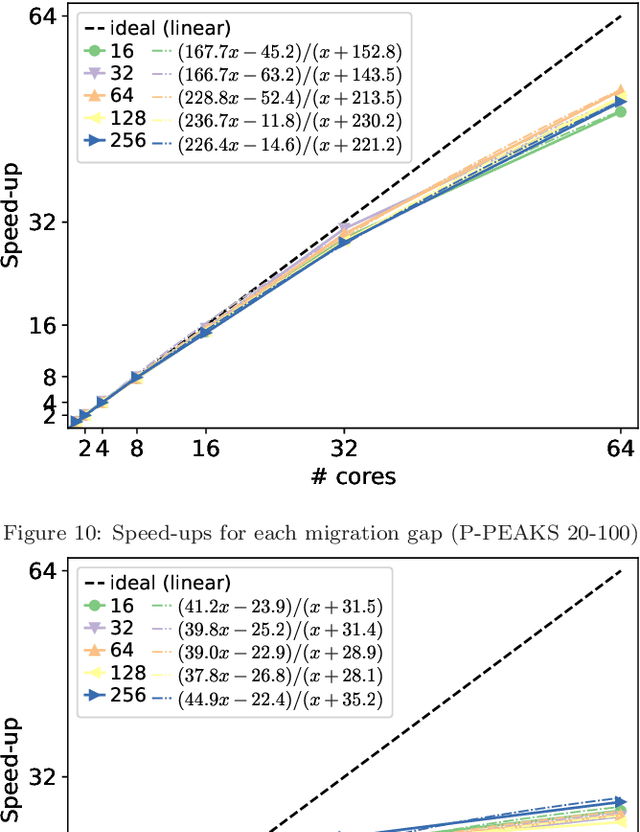

Abstract:This work proposes a novel approach to evaluate and analyze the behavior of multi-population parallel genetic algorithms (PGAs) when running on a cluster of multi-core processors. In particular, we deeply study their numerical and computational behavior by proposing a mathematical model representing the observed performance curves. In them, we discuss the emerging mathematical descriptions of PGA performance instead of, e.g., individual isolated results subject to visual inspection, for a better understanding of the effects of the number of cores used (scalability), their migration policy (the migration gap, in this paper), and the features of the solved problem (type of encoding and problem size). The conclusions based on the real figures and the numerical models fitting them represent a fresh way of understanding their speed-up, running time, and numerical effort, allowing a comparison based on a few meaningful numeric parameters. This represents a set of conclusions beyond the usual textual lessons found in past works on PGAs. It can be used as an estimation tool for the future performance of the algorithms and a way of finding out their limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge