Görkem Algan

Semantic Segmentation for Thermal Images: A Comparative Survey

May 26, 2022

Abstract:Semantic segmentation is a challenging task since it requires excessively more low-level spatial information of the image compared to other computer vision problems. The accuracy of pixel-level classification can be affected by many factors, such as imaging limitations and the ambiguity of object boundaries in an image. Conventional methods exploit three-channel RGB images captured in the visible spectrum with deep neural networks (DNN). Thermal images can significantly contribute during the segmentation since thermal imaging cameras are capable of capturing details despite the weather and illumination conditions. Using infrared spectrum in semantic segmentation has many real-world use cases, such as autonomous driving, medical imaging, agriculture, defense industry, etc. Due to this wide range of use cases, designing accurate semantic segmentation algorithms with the help of infrared spectrum is an important challenge. One approach is to use both visible and infrared spectrum images as inputs. These methods can accomplish higher accuracy due to enriched input information, with the cost of extra effort for the alignment and processing of multiple inputs. Another approach is to use only thermal images, enabling less hardware cost for smaller use cases. Even though there are multiple surveys on semantic segmentation methods, the literature lacks a comprehensive survey centered explicitly around semantic segmentation using infrared spectrum. This work aims to fill this gap by presenting algorithms in the literature and categorizing them by their input images.

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels

Mar 19, 2021

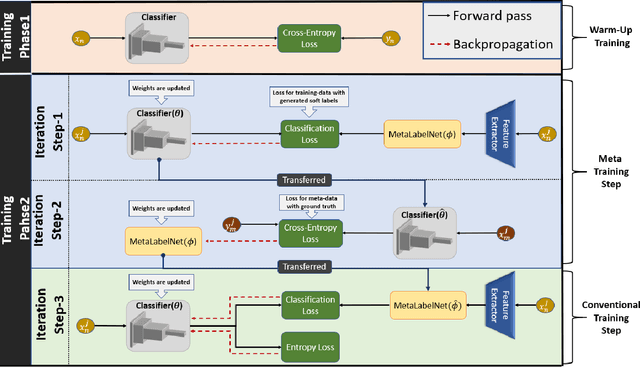

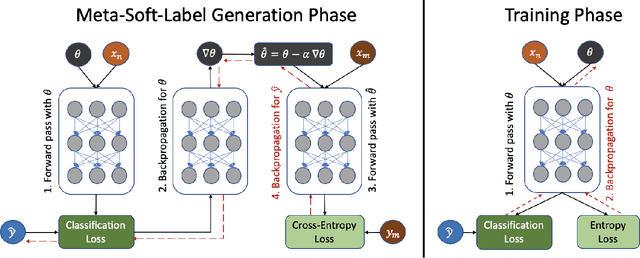

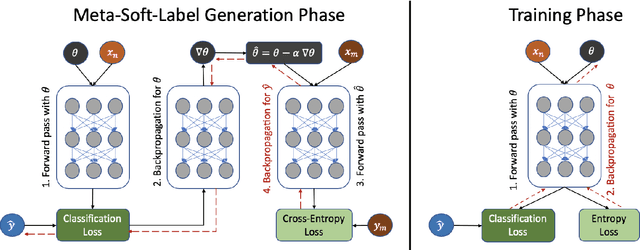

Abstract:Real-world datasets commonly have noisy labels, which negatively affects the performance of deep neural networks (DNNs). In order to address this problem, we propose a label noise robust learning algorithm, in which the base classifier is trained on soft-labels that are produced according to a meta-objective. In each iteration, before conventional training, the meta-objective reshapes the loss function by changing soft-labels, so that resulting gradient updates would lead to model parameters with minimum loss on meta-data. Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data. Our algorithm uses a small amount of clean data as meta-data, which can be obtained effortlessly for many cases. We perform extensive experiments on benchmark datasets with both synthetic and real-world noises. Results show that our approach outperforms existing baselines.

Deep Learning from Small Amount of Medical Data with Noisy Labels: A Meta-Learning Approach

Oct 14, 2020

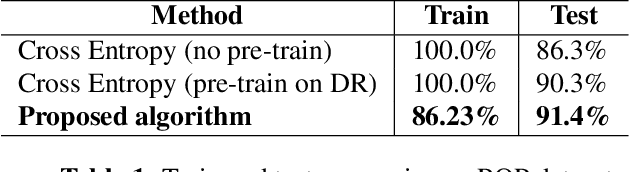

Abstract:Computer vision systems recently made a big leap thanks to deep neural networks. However, these systems require correctly labeled large datasets in order to be trained properly, which is very difficult to obtain for medical applications. Two main reasons for label noise in medical applications are the high complexity of the data and conflicting opinions of experts. Moreover, medical imaging datasets are commonly tiny, which makes each data very important in learning. As a result, if not handled properly, label noise significantly degrades the performance. Therefore, we propose a label-noise-robust learning algorithm that makes use of the meta-learning paradigm. We tested our proposed solution on retinopathy of prematurity (ROP) dataset with a very high label noise of 68%. Our results show that the proposed algorithm significantly improves the classification algorithm's performance in the presence of noisy labels.

Meta Soft Label Generation for Noisy Labels

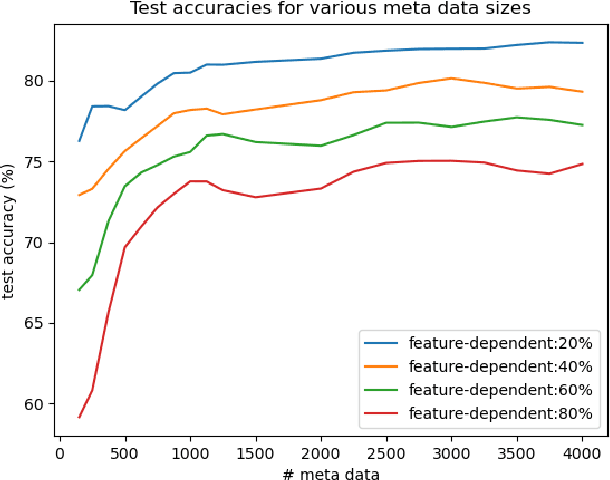

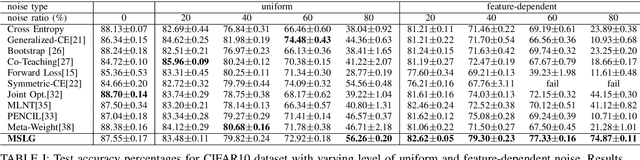

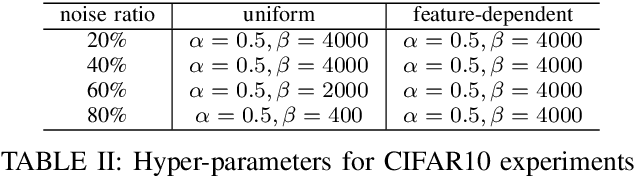

Jul 11, 2020

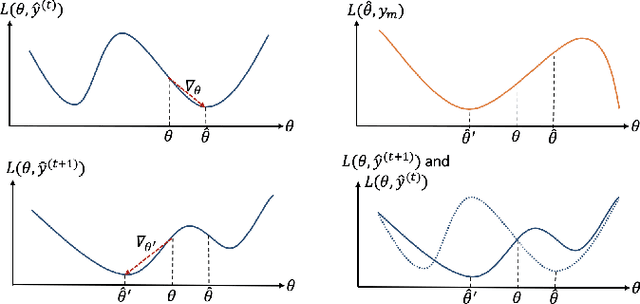

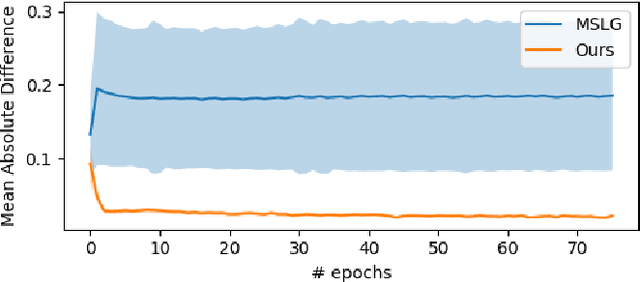

Abstract:The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal label distribution by checking gradient directions on both noisy training data and noise-free meta-data. In order to iteratively update soft labels, meta-gradient descent step is performed on estimated labels, which would minimize the loss of noise-free meta samples. In each iteration, the base classifier is trained on estimated meta labels. MSLG is model-agnostic and can be added on top of any existing model at hand with ease. We performed extensive experiments on CIFAR10, Clothing1M and Food101N datasets. Results show that our approach outperforms other state-of-the-art methods by a large margin.

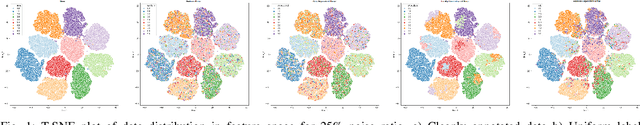

Label Noise Types and Their Effects on Deep Learning

Mar 23, 2020

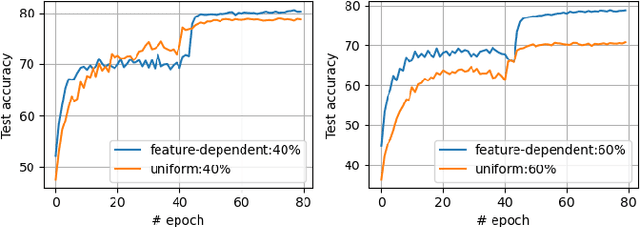

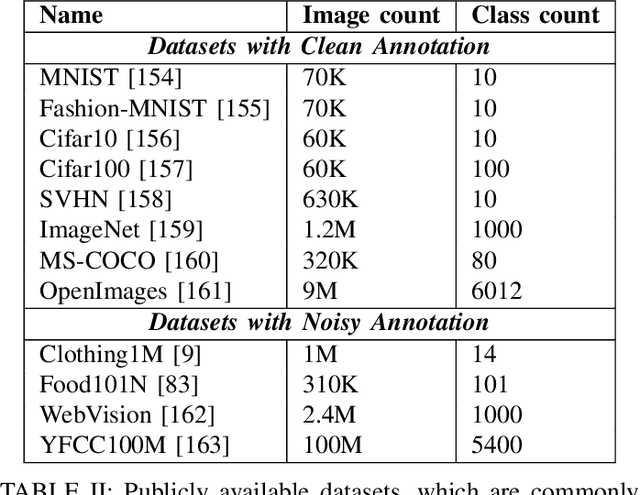

Abstract:The recent success of deep learning is mostly due to the availability of big datasets with clean annotations. However, gathering a cleanly annotated dataset is not always feasible due to practical challenges. As a result, label noise is a common problem in datasets, and numerous methods to train deep neural networks in the presence of noisy labels are proposed in the literature. These methods commonly use benchmark datasets with synthetic label noise on the training set. However, there are multiple types of label noise, and each of them has its own characteristic impact on learning. Since each work generates a different kind of label noise, it is problematic to test and compare those algorithms in the literature fairly. In this work, we provide a detailed analysis of the effects of different kinds of label noise on learning. Moreover, we propose a generic framework to generate feature-dependent label noise, which we show to be the most challenging case for learning. Our proposed method aims to emphasize similarities among data instances by sparsely distributing them in the feature domain. By this approach, samples that are more likely to be mislabeled are detected from their softmax probabilities, and their labels are flipped to the corresponding class. The proposed method can be applied to any clean dataset to synthesize feature-dependent noisy labels. For the ease of other researchers to test their algorithms with noisy labels, we share corrupted labels for the most commonly used benchmark datasets. Our code and generated noisy synthetic labels are available online.

Image Classification with Deep Learning in the Presence of Noisy Labels: A Survey

Dec 11, 2019

Abstract:Image classification systems recently made a big leap with the advancement of deep neural networks. However, these systems require excessive amount of labeled data in order to be trained properly. This is not always feasible due to several factors, such as expensiveness of labeling process or difficulty of correctly classifying data even for the experts. Because of these practical challenges, label noise is a common problem in datasets and numerous methods to train deep networks with label noise are proposed in literature. Deep networks are known to be relatively robust to label noise, however their tendency to overfit data makes them vulnerable to memorizing even total random noise. Therefore, it is crucial to consider the existence of label noise and develop counter algorithms to fade away its negative effects for training deep neural networks efficiently. Even though an extensive survey of machine learning techniques under label noise exists, literature lacks a comprehensive survey of methodologies specifically centered around deep learning in the presence of noisy labels. This paper aims to present these algorithms while categorizing them according to their similarity in proposed methodology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge