Fumio Hashimoto

List-Mode PET Image Reconstruction Using Dykstra-Like Splitting

Mar 01, 2024Abstract:To converge the block iterative method in image reconstruction for positron emission tomography (PET), careful control of relaxation parameters is required, which is a challenging task. The automatic determination of relaxation parameters for list-mode reconstructions also remains challenging. Therefore, a different approach than controlling relaxation parameters would be desired by list-mode PET reconstruction. In this study, we propose a list-mode maximum likelihood Dykstra-like splitting PET reconstruction (LM-MLDS). LM-MLDS converges the list-mode block iterative method by adding the distance from an initial image as a penalty term into an objective function. LM-MLDS takes a two-step approach because its performance depends on the quality of the initial image. The first step uses a uniform image as the initial image, and then the second step uses a reconstructed image after one main iteration as the initial image. We evaluated LM-MLDS using simulation and clinical data. LM-MLDS provided a higher peak signal-to-noise ratio and suppressed an oscillation of tradeoff curves between noise and contrast than the other block iterative methods. In a clinical study, LM-MLDS removed the false hotspots at the edge of the axial field of view and improved the image quality of slices covering the top of the head to the cerebellum. LM-MLDS showed different noise properties than the other methods due to Gaussian denoising induced by the proximity operator. The list-mode proximal splitting PET reconstruction is useful not only for optimizing nondifferentiable functions such as total variation but also for converging block iterative methods without controlling relaxation parameters.

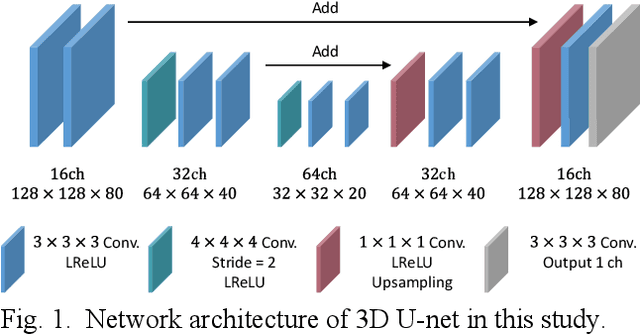

ReconU-Net: a direct PET image reconstruction using U-Net architecture with back projection-induced skip connection

Dec 05, 2023Abstract:[Objective] This study aims to introduce a novel back projection-induced U-Net-shaped architecture, called ReconU-Net, for deep learning-based direct positron emission tomography (PET) image reconstruction. Additionally, our objective is to analyze the behavior of direct PET image reconstruction and gain deeper insights by comparing the proposed ReconU-Net architecture with other encoder-decoder architectures without skip connections. [Approach] The proposed ReconU-Net architecture uniquely integrates the physical model of the back projection operation into the skip connection. This distinctive feature facilitates the effective transfer of intrinsic spatial information from the input sinogram to the reconstructed image via an embedded physical model. The proposed ReconU-Net was trained using Monte Carlo simulation data from the Brainweb phantom and tested on both simulated and real Hoffman brain phantom data. [Main results] The proposed ReconU-Net method generated a reconstructed image with a more accurate structure compared to other deep learning-based direct reconstruction methods. Further analysis showed that the proposed ReconU-Net architecture has the ability to transfer features of multiple resolutions, especially non-abstract high-resolution information, through skip connections. Despite limited training on simulated data, the proposed ReconU-Net successfully reconstructed the real Hoffman brain phantom, unlike other deep learning-based direct reconstruction methods, which failed to produce a reconstructed image. [Significance] The proposed ReconU-Net can improve the fidelity of direct PET image reconstruction, even when dealing with small training datasets, by leveraging the synergistic relationship between data-driven modeling and the physics model of the imaging process.

Self-Supervised Pre-Training for Deep Image Prior-Based Robust PET Image Denoising

Feb 27, 2023

Abstract:Deep image prior (DIP) has been successfully applied to positron emission tomography (PET) image restoration, enabling represent implicit prior using only convolutional neural network architecture without training dataset, whereas the general supervised approach requires massive low- and high-quality PET image pairs. To answer the increased need for PET imaging with DIP, it is indispensable to improve the performance of the underlying DIP itself. Here, we propose a self-supervised pre-training model to improve the DIP-based PET image denoising performance. Our proposed pre-training model acquires transferable and generalizable visual representations from only unlabeled PET images by restoring various degraded PET images in a self-supervised approach. We evaluated the proposed method using clinical brain PET data with various radioactive tracers ($^{18}$F-florbetapir, $^{11}$C-Pittsburgh compound-B, $^{18}$F-fluoro-2-deoxy-D-glucose, and $^{15}$O-CO$_{2}$) acquired from different PET scanners. The proposed method using the self-supervised pre-training model achieved robust and state-of-the-art denoising performance while retaining spatial details and quantification accuracy compared to other unsupervised methods and pre-training model. These results highlight the potential that the proposed method is particularly effective against rare diseases and probes and helps reduce the scan time or the radiotracer dose without affecting the patients.

Fully 3D Implementation of the End-to-end Deep Image Prior-based PET Image Reconstruction Using Block Iterative Algorithm

Dec 22, 2022Abstract:Deep image prior (DIP) has recently attracted attention owing to its unsupervised positron emission tomography (PET) image reconstruction, which does not require any prior training dataset. In this paper, we present the first attempt to implement an end-to-end DIP-based fully 3D PET image reconstruction method that incorporates a forward-projection model into a loss function. To implement a practical fully 3D PET image reconstruction, which could not be performed due to a graphics processing unit memory limitation, we modify the DIP optimization to block-iteration and sequentially learn an ordered sequence of block sinograms. Furthermore, the relative difference penalty (RDP) term was added to the loss function to enhance the quantitative PET image accuracy. We evaluated our proposed method using Monte Carlo simulation with [$^{18}$F]FDG PET data of a human brain and a preclinical study on monkey brain [$^{18}$F]FDG PET data. The proposed method was compared with the maximum-likelihood expectation maximization (EM), maximum-a-posterior EM with RDP, and hybrid DIP-based PET reconstruction methods. The simulation results showed that the proposed method improved the PET image quality by reducing statistical noise and preserved a contrast of brain structures and inserted tumor compared with other algorithms. In the preclinical experiment, finer structures and better contrast recovery were obtained by the proposed method. This indicated that the proposed method can produce high-quality images without a prior training dataset. Thus, the proposed method is a key enabling technology for the straightforward and practical implementation of end-to-end DIP-based fully 3D PET image reconstruction.

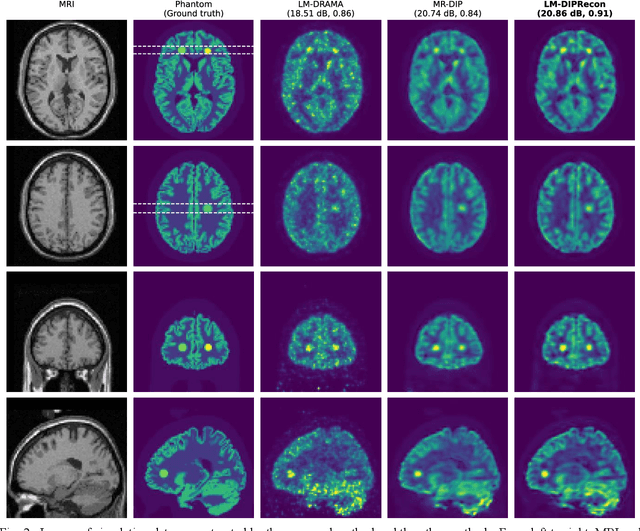

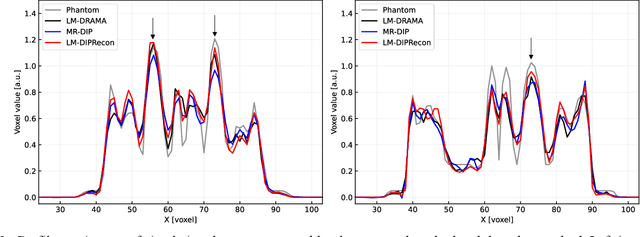

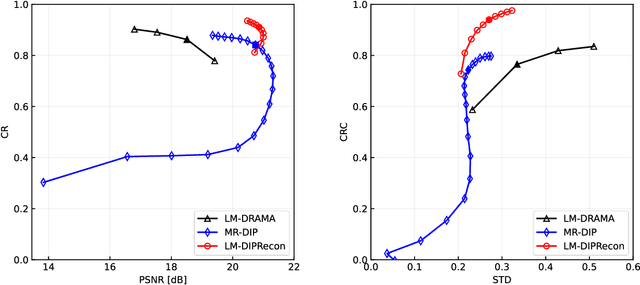

List-Mode PET Image Reconstruction Using Deep Image Prior

Apr 28, 2022

Abstract:List-mode positron emission tomography (PET) image reconstruction is an important tool for PET scanners with many lines-of-response (LORs) and additional information such as time-of-flight and depth-of-interaction. Deep learning is one possible solution to enhance the quality of PET image reconstruction. However, the application of deep learning techniques to list-mode PET image reconstruction have not been progressed because list data is a sequence of bit codes and unsuitable for processing by convolutional neural networks (CNN). In this study, we propose a novel list-mode PET image reconstruction method using an unsupervised CNN called deep image prior (DIP) and a framework of alternating direction method of multipliers. The proposed list-mode DIP reconstruction (LM-DIPRecon) method alternatively iterates regularized list-mode dynamic row action maximum likelihood algorithm (LM-DRAMA) and magnetic resonance imaging conditioned DIP (MR-DIP). We evaluated LM-DIPRecon using both simulation and clinical data, and it achieved sharper images and better tradeoff curves between contrast and noise than the LM-DRAMA and MR-DIP. These results indicated that the LM-DIPRecon is useful for quantitative PET imaging with limited events. In addition, as list data has finer temporal information than dynamic sinograms, list-mode deep image prior reconstruction is expected to be useful for 4D PET imaging and motion correction.

Anatomical-Guided Attention Enhances Unsupervised PET Image Denoising Performance

Sep 08, 2021

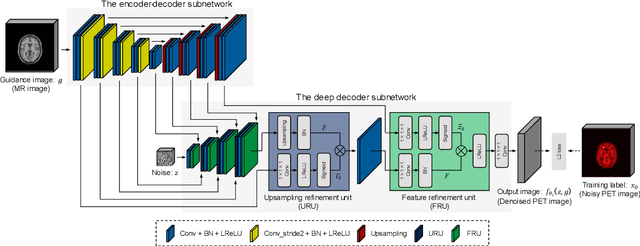

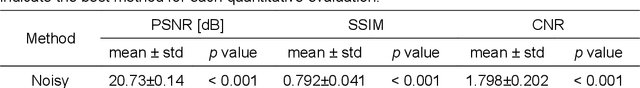

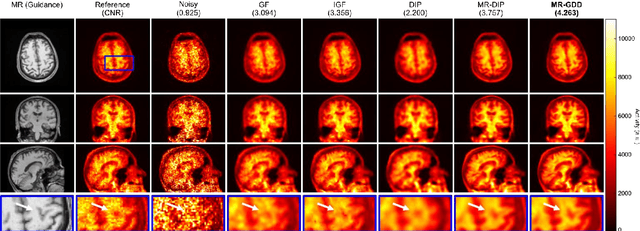

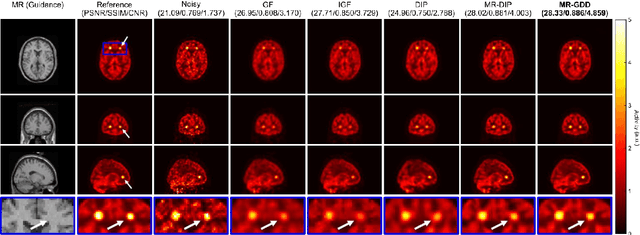

Abstract:Although supervised convolutional neural networks (CNNs) often outperform conventional alternatives for denoising positron emission tomography (PET) images, they require many low- and high-quality reference PET image pairs. Herein, we propose an unsupervised 3D PET image denoising method based on an anatomical information-guided attention mechanism. The proposed magnetic resonance-guided deep decoder (MR-GDD) utilizes the spatial details and semantic features of MR-guidance image more effectively by introducing encoder-decoder and deep decoder subnetworks. Moreover, the specific shapes and patterns of the guidance image do not affect the denoised PET image, because the guidance image is input to the network through an attention gate. In a Monte Carlo simulation of [$^{18}$F]fluoro-2-deoxy-D-glucose (FDG), the proposed method achieved the highest peak signal-to-noise ratio and structural similarity (27.92 $\pm$ 0.44 dB/0.886 $\pm$ 0.007), as compared with Gaussian filtering (26.68 $\pm$ 0.10 dB/0.807 $\pm$ 0.004), image guided filtering (27.40 $\pm$ 0.11 dB/0.849 $\pm$ 0.003), deep image prior (DIP) (24.22 $\pm$ 0.43 dB/0.737 $\pm$ 0.017), and MR-DIP (27.65 $\pm$ 0.42 dB/0.879 $\pm$ 0.007). Furthermore, we experimentally visualized the behavior of the optimization process, which is often unknown in unsupervised CNN-based restoration problems. For preclinical (using [$^{18}$F]FDG and [$^{11}$C]raclopride) and clinical (using [$^{18}$F]florbetapir) studies, the proposed method demonstrates state-of-the-art denoising performance while retaining spatial resolution and quantitative accuracy, despite using a common network architecture for various noisy PET images with 1/10th of the full counts. These results suggest that the proposed MR-GDD can reduce PET scan times and PET tracer doses considerably without impacting patients.

* 30 pages, 12 figures

Direct PET Image Reconstruction Incorporating Deep Image Prior and a Forward Projection Model

Sep 02, 2021

Abstract:Convolutional neural networks (CNNs) have recently achieved remarkable performance in positron emission tomography (PET) image reconstruction. In particular, CNN-based direct PET image reconstruction, which directly generates the reconstructed image from the sinogram, has potential applicability to PET image enhancements because it does not require image reconstruction algorithms, which often produce some artifacts. However, these deep learning-based, direct PET image reconstruction algorithms have the disadvantage that they require a large number of high-quality training datasets. In this study, we propose an unsupervised direct PET image reconstruction method that incorporates a deep image prior framework. Our proposed method incorporates a forward projection model with a loss function to achieve unsupervised direct PET image reconstruction from sinograms. To compare our proposed direct reconstruction method with the filtered back projection (FBP) and maximum likelihood expectation maximization (ML-EM) algorithms, we evaluated using Monte Carlo simulation data of brain [$^{18}$F]FDG PET scans. The results demonstrate that our proposed direct reconstruction quantitatively and qualitatively outperforms the FBP and ML-EM algorithms with respect to peak signal-to-noise ratio and structural similarity index.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge