Francois-Louis Tariolle

Directing Cinematographic Drones

Dec 14, 2017

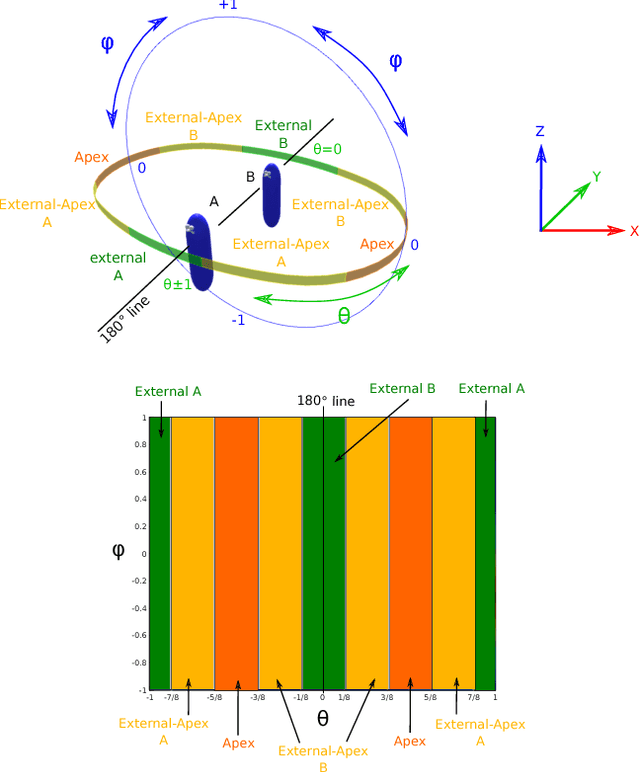

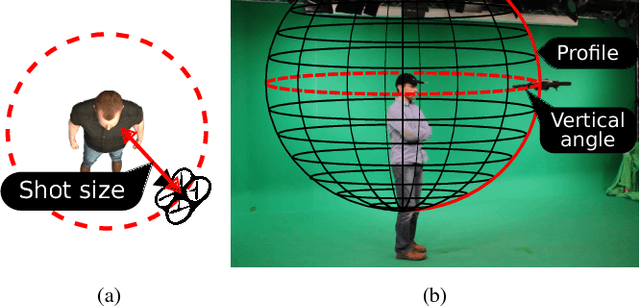

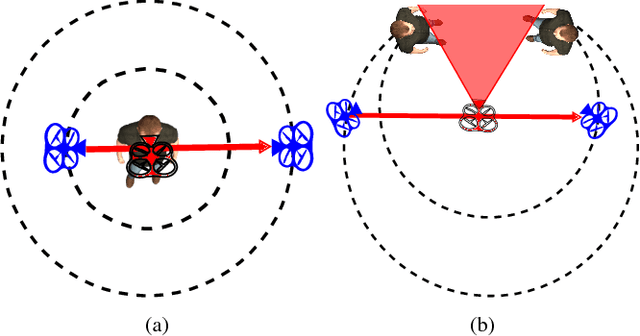

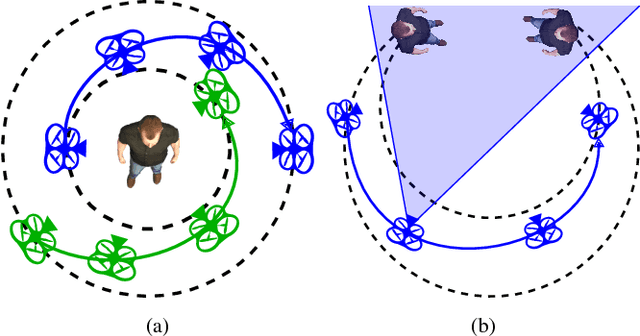

Abstract:Quadrotor drones equipped with high quality cameras have rapidely raised as novel, cheap and stable devices for filmmakers. While professional drone pilots can create aesthetically pleasing videos in short time, the smooth -- and cinematographic -- control of a camera drone remains challenging for most users, despite recent tools that either automate part of the process or enable the manual design of waypoints to create drone trajectories. This paper proposes to move a step further towards more accessible cinematographic drones by designing techniques to automatically or interactively plan quadrotor drone motions in 3D dynamic environments that satisfy both cinematographic and physical quadrotor constraints. We first propose the design of a Drone Toric Space as a dedicated camera parameter space with embedded constraints and derive some intuitive on-screen viewpoint manipulators. Second, we propose a specific path planning technique which ensures both that cinematographic properties can be enforced along the path, and that the path is physically feasible by a quadrotor drone. At last, we build on the Drone Toric Space and the specific path planning technique to coordinate the motion of multiple drones around dynamic targets. A number of results then demonstrate the interactive and automated capacities of our approaches on a number of use-cases.

Automated Cinematography with Unmanned Aerial Vehicles

Dec 12, 2017

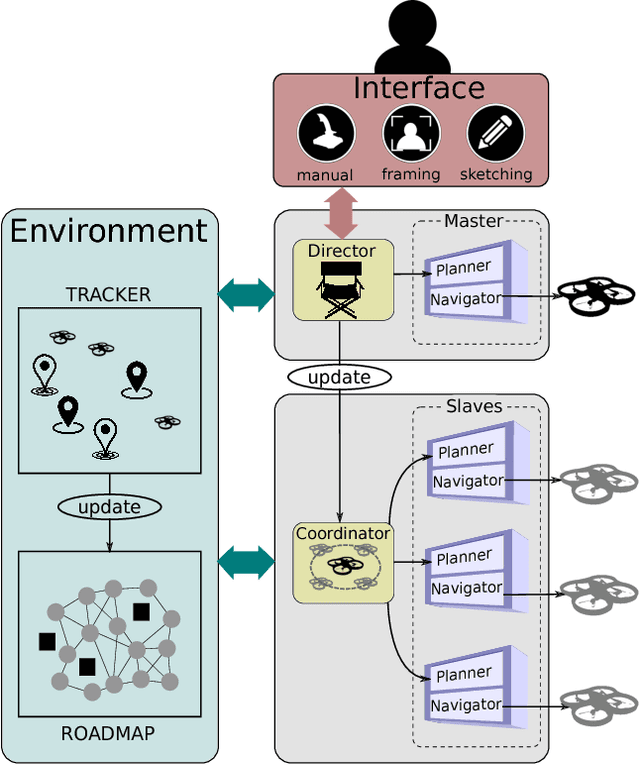

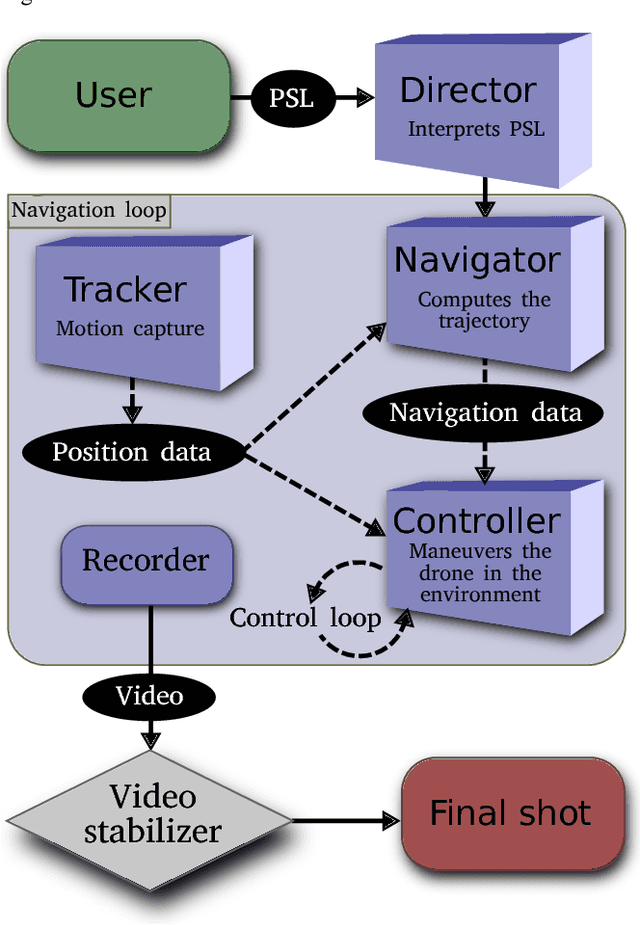

Abstract:The rise of Unmanned Aerial Vehicles and their increasing use in the cinema industry calls for the creation of dedicated tools. Though there is a range of techniques to automatically control drones for a variety of applications, none have considered the problem of producing cinematographic camera motion in real-time for shooting purposes. In this paper we present our approach to UAV navigation for autonomous cinematography. The contributions of this research are twofold: (i) we adapt virtual camera control techniques to UAV navigation; (ii) we introduce a drone-independent platform for high-level user interactions that integrates cinematographic knowledge. The results presented in this paper demonstrate the capacities of our tool to capture live movie scenes involving one or two moving actors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge