Francesco Topputo

Modular pipeline for small bodies gravity field modeling: an efficient representation of variable density spherical harmonics coefficients

Sep 04, 2024Abstract:Proximity operations to small bodies, such as asteroids and comets, demand high levels of autonomy to achieve cost-effective, safe, and reliable Guidance, Navigation and Control (GNC) solutions. Enabling autonomous GNC capabilities in the vicinity of these targets is thus vital for future space applications. However, the highly non-linear and uncertain environment characterizing their vicinity poses unique challenges that need to be assessed to grant robustness against unknown shapes and gravity fields. In this paper, a pipeline designed to generate variable density gravity field models is proposed, allowing the generation of a coherent set of scenarios that can be used for design, validation, and testing of GNC algorithms. The proposed approach consists in processing a polyhedral shape model of the body with a given density distribution to compute the coefficients of the spherical harmonics expansion associated with the gravity field. To validate the approach, several comparison are conducted against analytical solutions, literature results, and higher fidelity models, across a diverse set of targets with varying morphological and physical properties. Simulation results demonstrate the effectiveness of the methodology, showing good performances in terms of modeling accuracy and computational efficiency. This research presents a faster and more robust framework for generating environmental models to be used in simulation and hardware-in-the-loop testing of onboard GNC algorithms.

RETINA: a hardware-in-the-loop optical facility with reduced optical aberrations

Jul 02, 2024Abstract:The increasing interest in spacecraft autonomy and the complex tasks to be accomplished by the spacecraft raise the need for a trustworthy approach to perform Verification & Validation of Guidance, Navigation, and Control algorithms. In the context of autonomous operations, vision-based navigation algorithms have established themselves as effective solutions to determine the spacecraft state in orbit with low-cost and versatile sensors. Nevertheless, detailed testing must be performed on ground to understand the algorithm's robustness and performance on flight hardware. Given the impossibility of testing directly on orbit these algorithms, a dedicated simulation framework must be developed to emulate the orbital environment in a laboratory setup. This paper presents the design of a low-aberration optical facility called RETINA to perform this task. RETINA is designed to accommodate cameras with different characteristics (e.g., sensor size and focal length) while ensuring the correct stimulation of the camera detector. A preliminary design is performed to identify the range of possible components to be used in the facility according to the facility requirements. Then, a detailed optical design is performed in Zemax OpticStudio to optimize the number and characteristics of the lenses composing the facility's optical systems. The final design is compared against the preliminary design to show the superiority of the optical performance achieved with this approach. This work presents also a calibration procedure to estimate the misalignment and the centering errors in the facility. These estimated parameters are used in a dedicated compensation algorithm, enabling the stimulation of the camera at tens of arcseconds of precision. Finally, two different applications are presented to show the versatility of RETINA in accommodating different cameras and in simulating different mission scenarios.

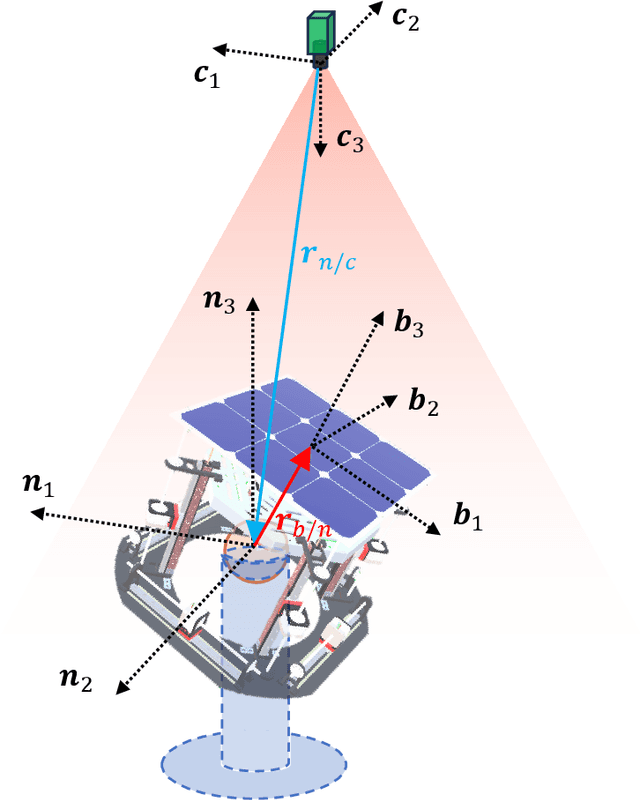

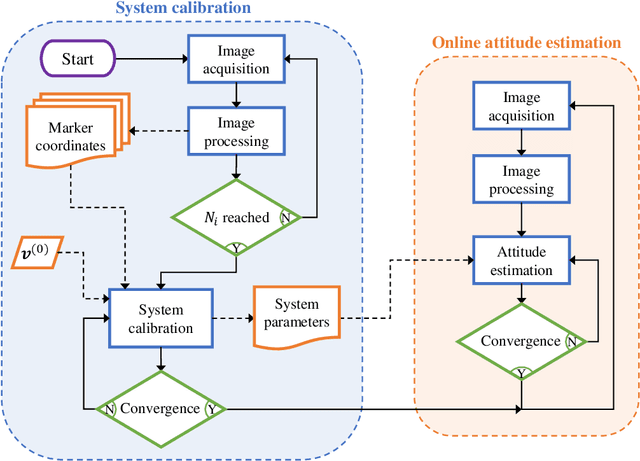

High-accuracy Vision-Based Attitude Estimation System for Air-Bearing Spacecraft Simulators

Dec 13, 2023

Abstract:Air-bearing platforms for simulating the rotational dynamics of satellites require highly precise ground truth systems. Unfortunately, commercial motion capture systems used for this scope are complex and expensive. This paper shows a novel and versatile method to compute the attitude of rotational air-bearing platforms using a monocular camera and sets of fiducial markers. The work proposes a geometry-based iterative algorithm that is significantly more accurate than other literature methods that involve the solution of the Perspective-n-Point problem. Additionally, auto-calibration procedures to perform a preliminary estimation of the system parameters are shown. The developed methodology is deployed onto a Raspberry Pi 4 micro-computer and tested with a set of LED markers. Data obtained with this setup are compared against computer simulations of the same system to understand and validate the attitude estimation performances. Simulation results show expected 1-sigma accuracies in the order of $\sim$ 12 arcsec and $\sim$ 37 arcsec for about- and cross-boresight rotations of the platform, and average latency times of 6 ms.

An Autonomous Vision-Based Algorithm for Interplanetary Navigation

Sep 18, 2023

Abstract:The surge of deep-space probes makes it unsustainable to navigate them with standard radiometric tracking. Self-driving interplanetary satellites represent a solution to this problem. In this work, a full vision-based navigation algorithm is built by combining an orbit determination method with an image processing pipeline suitable for interplanetary transfers of autonomous platforms. To increase the computational efficiency of the algorithm, a non-dimensional extended Kalman filter is selected as state estimator, fed by the positions of the planets extracted from deep-space images. An enhancement of the estimation accuracy is performed by applying an optimal strategy to select the best pair of planets to track. Moreover, a novel analytical measurement model for deep-space navigation is developed providing a first-order approximation of the light-aberration and light-time effects. Algorithm performance is tested on a high-fidelity, Earth--Mars interplanetary transfer, showing the algorithm applicability for deep-space navigation.

An Image Processing Pipeline for Autonomous Deep-Space Optical Navigation

Feb 14, 2023

Abstract:A new era of space exploration and exploitation is fast approaching. A multitude of spacecraft will flow in the future decades under the propulsive momentum of the new space economy. Yet, the flourishing proliferation of deep-space assets will make it unsustainable to pilot them from ground with standard radiometric tracking. The adoption of autonomous navigation alternatives is crucial to overcoming these limitations. Among these, optical navigation is an affordable and fully ground-independent approach. Probes can triangulate their position by observing visible beacons, e.g., planets or asteroids, by acquiring their line-of-sight in deep space. To do so, developing efficient and robust image processing algorithms providing information to navigation filters is a necessary action. This paper proposes an innovative pipeline for unresolved beacon recognition and line-of-sight extraction from images for autonomous interplanetary navigation. The developed algorithm exploits the k-vector method for the non-stellar object identification and statistical likelihood to detect whether any beacon projection is visible in the image. Statistical results show that the accuracy in detecting the planet position projection is independent of the spacecraft position uncertainty. Whereas, the planet detection success rate is higher than 95% when the spacecraft position is known with a 3sigma accuracy up to 10^5 km.

Design of Convolutional Extreme Learning Machines for Vision-Based Navigation Around Small Bodies

Oct 28, 2022

Abstract:Deep learning architectures such as convolutional neural networks are the standard in computer vision for image processing tasks. Their accuracy however often comes at the cost of long and computationally expensive training, the need for large annotated datasets, and extensive hyper-parameter searches. On the other hand, a different method known as convolutional extreme learning machine has shown the potential to perform equally with a dramatic decrease in training time. Space imagery, especially about small bodies, could be well suited for this method. In this work, convolutional extreme learning machine architectures are designed and tested against their deep-learning counterparts. Because of the relatively fast training time of the former, convolutional extreme learning machine architectures enable efficient exploration of the architecture design space, which would have been impractical with the latter, introducing a methodology for an efficient design of a neural network architecture for computer vision tasks. Also, the coupling between the image processing method and labeling strategy is investigated and demonstrated to play a major role when considering vision-based navigation around small bodies.

Boulders Identification on Small Bodies Under Varying Illumination Conditions

Oct 28, 2022Abstract:The capability to detect boulders on the surface of small bodies is beneficial for vision-based applications such as navigation and hazard detection during critical operations. This task is challenging due to the wide assortment of irregular shapes, the characteristics of the boulders population, and the rapid variability in the illumination conditions. The authors address this challenge by designing a multi-step training approach to develop a data-driven image processing pipeline to robustly detect and segment boulders scattered over the surface of a small body. Due to the limited availability of labeled image-mask pairs, the developed methodology is supported by two artificial environments designed in Blender specifically for this work. These are used to generate a large amount of synthetic image-label sets, which are made publicly available to the image processing community. The methodology presented addresses the challenges of varying illumination conditions, irregular shapes, fast training time, extensive exploration of the architecture design space, and domain gap between synthetic and real images from previously flown missions. The performance of the developed image processing pipeline is tested both on synthetic and real images, exhibiting good performances, and high generalization capabilities

DOORS: Dataset fOr bOuldeRs Segmentation. Statistical properties and Blender setup

Oct 28, 2022Abstract:The capability to detect boulders on the surface of small bodies is beneficial for vision-based applications such as hazard detection during critical operations and navigation. This task is challenging due to the wide assortment of irregular shapes, the characteristics of the boulders population, and the rapid variability in the illumination conditions. Moreover, the lack of publicly available labeled datasets for these applications damps the research about data-driven algorithms. In this work, the authors provide a statistical characterization and setup used for the generation of two datasets about boulders on small bodies that are made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge