Fabio Ornati

RETINA: a hardware-in-the-loop optical facility with reduced optical aberrations

Jul 02, 2024Abstract:The increasing interest in spacecraft autonomy and the complex tasks to be accomplished by the spacecraft raise the need for a trustworthy approach to perform Verification & Validation of Guidance, Navigation, and Control algorithms. In the context of autonomous operations, vision-based navigation algorithms have established themselves as effective solutions to determine the spacecraft state in orbit with low-cost and versatile sensors. Nevertheless, detailed testing must be performed on ground to understand the algorithm's robustness and performance on flight hardware. Given the impossibility of testing directly on orbit these algorithms, a dedicated simulation framework must be developed to emulate the orbital environment in a laboratory setup. This paper presents the design of a low-aberration optical facility called RETINA to perform this task. RETINA is designed to accommodate cameras with different characteristics (e.g., sensor size and focal length) while ensuring the correct stimulation of the camera detector. A preliminary design is performed to identify the range of possible components to be used in the facility according to the facility requirements. Then, a detailed optical design is performed in Zemax OpticStudio to optimize the number and characteristics of the lenses composing the facility's optical systems. The final design is compared against the preliminary design to show the superiority of the optical performance achieved with this approach. This work presents also a calibration procedure to estimate the misalignment and the centering errors in the facility. These estimated parameters are used in a dedicated compensation algorithm, enabling the stimulation of the camera at tens of arcseconds of precision. Finally, two different applications are presented to show the versatility of RETINA in accommodating different cameras and in simulating different mission scenarios.

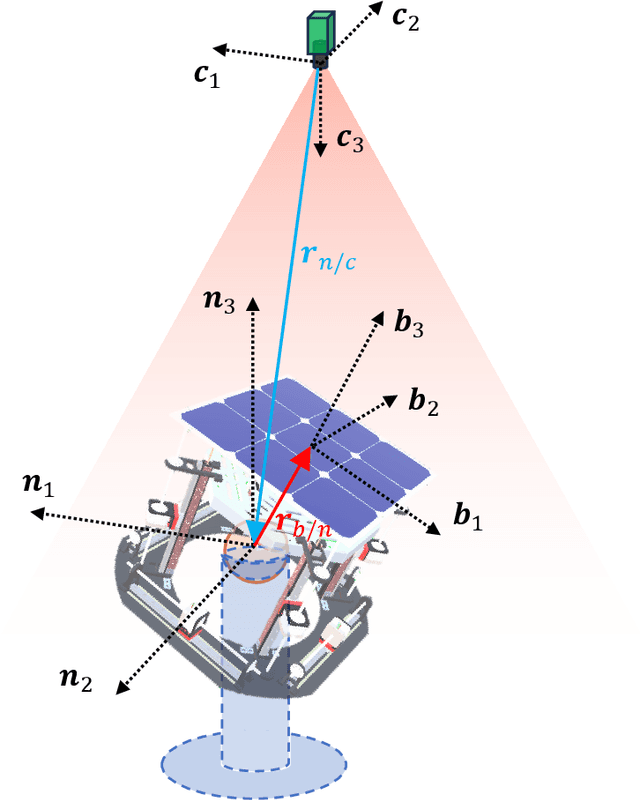

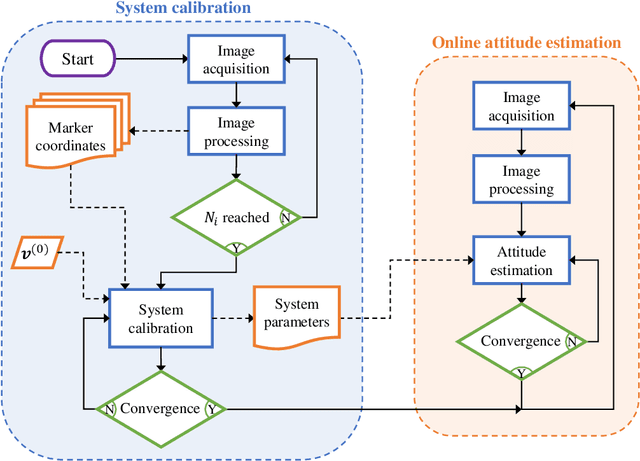

High-accuracy Vision-Based Attitude Estimation System for Air-Bearing Spacecraft Simulators

Dec 13, 2023

Abstract:Air-bearing platforms for simulating the rotational dynamics of satellites require highly precise ground truth systems. Unfortunately, commercial motion capture systems used for this scope are complex and expensive. This paper shows a novel and versatile method to compute the attitude of rotational air-bearing platforms using a monocular camera and sets of fiducial markers. The work proposes a geometry-based iterative algorithm that is significantly more accurate than other literature methods that involve the solution of the Perspective-n-Point problem. Additionally, auto-calibration procedures to perform a preliminary estimation of the system parameters are shown. The developed methodology is deployed onto a Raspberry Pi 4 micro-computer and tested with a set of LED markers. Data obtained with this setup are compared against computer simulations of the same system to understand and validate the attitude estimation performances. Simulation results show expected 1-sigma accuracies in the order of $\sim$ 12 arcsec and $\sim$ 37 arcsec for about- and cross-boresight rotations of the platform, and average latency times of 6 ms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge