Francesco Semeraro

Towards Multi-User Activity Recognition through Facilitated Training Data and Deep Learning for Human-Robot Collaboration Applications

Feb 11, 2023

Abstract:Human-robot interaction (HRI) research is progressively addressing multi-party scenarios, where a robot interacts with more than one human user at the same time. Conversely, research is still at an early stage for human-robot collaboration (HRC). The use of machine learning techniques to handle such type of collaboration requires data that are less feasible to produce than in a typical HRC setup. This work outlines concepts of design of concurrent tasks for non-dyadic HRC applications. Based upon these concepts, this study also proposes an alternative way of gathering data regarding multiuser activity, by collecting data related to single subjects and merging them in post-processing, to reduce the effort involved in producing recordings of pair settings. To validate this statement, 3D skeleton poses of activity of single subjects were collected and merged in pairs. After this, the datapoints were used to separately train a long short-term memory (LSTM) network and a variational autoencoder (VAE) composed of spatio-temporal graph convolutional networks (STGCN) to recognise the joint activities of the pairs of people. The results showed that it is possible to make use of data collected in this way for pair HRC settings and get similar performances compared to using data regarding groups of users recorded under the same settings, relieving from the technical difficulties involved in producing these data.

Human-Robot Collaboration and Machine Learning: A Systematic Review of Recent Research

Oct 14, 2021

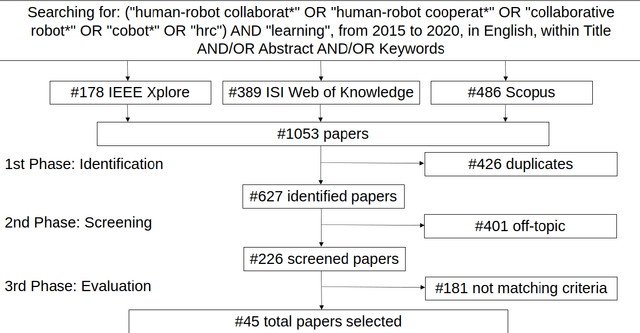

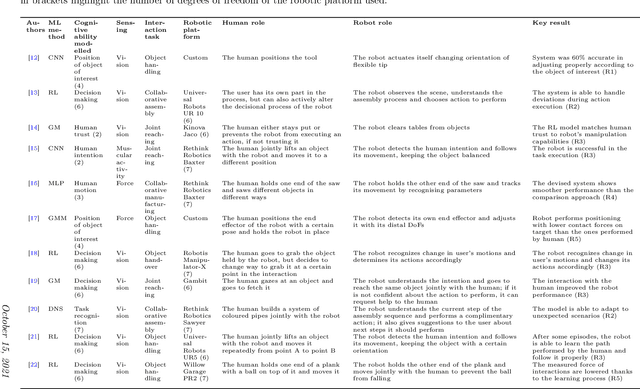

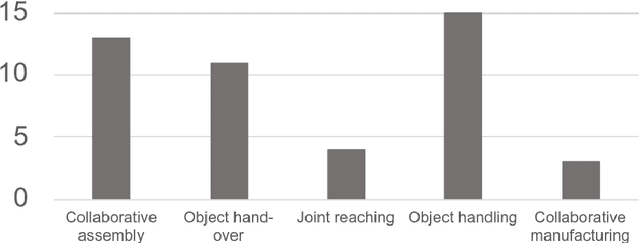

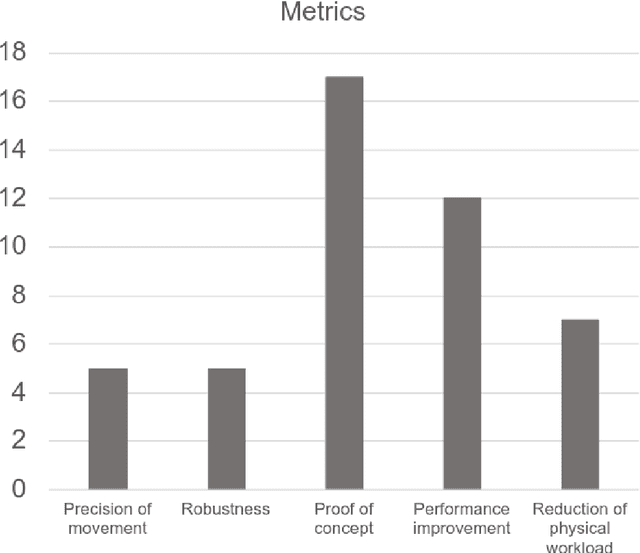

Abstract:Technological progress increasingly envisions the use of robots interacting with people in everyday life. Human-robot collaboration (HRC) is the approach that explores the interaction between a human and a robot, during the completion of an actual physical task. Such interplay is explored both at the cognitive and physical level, by respectively analysing the mutual exchange of information and mechanical power. In HRC works, a cognitive model is typically built, which collects inputs from the environment and from the user, elaborates and translates these into information that can be used by the robot itself. HRC studies progressively employ machine learning algorithms to build the cognitive models and behavioural block that elaborates the acquired external inputs. This is a promising approach still in its early stages and with the potential of significant benefit from the growing field of machine learning. Consequently, this paper proposes a thorough literature review of the use of machine learning techniques in the context of human-robot collaboration. The collection,selection and analysis of the set of 45 key papers, selected from the wide review of the literature on robotics and machine learning, allowed the identification of the current trends in HRC. In particular, a clustering of works based on the type of collaborative tasks, evaluation metrics and cognitive variables modelled is proposed. With these premises, a deep analysis on different families of machine learning algorithms and their properties, along with the sensing modalities used, was carried out. The salient aspects of the analysis are discussed to show trends and suggest possible challenges to tackle in the future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge