Francesco Ranzato

Fair Training of Decision Tree Classifiers

Jan 04, 2021

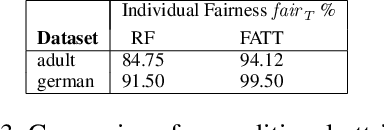

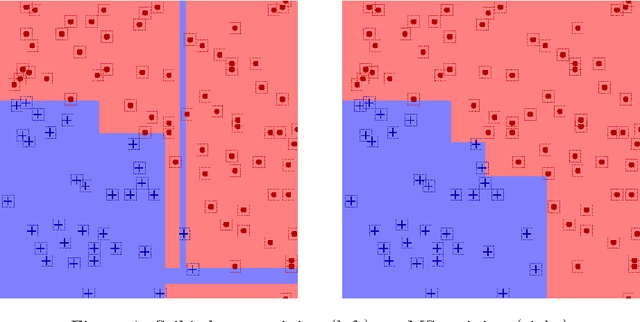

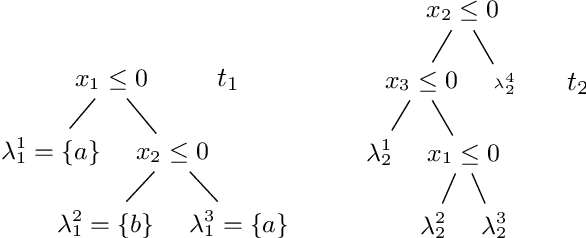

Abstract:We study the problem of formally verifying individual fairness of decision tree ensembles, as well as training tree models which maximize both accuracy and individual fairness. In our approach, fairness verification and fairness-aware training both rely on a notion of stability of a classification model, which is a variant of standard robustness under input perturbations used in adversarial machine learning. Our verification and training methods leverage abstract interpretation, a well established technique for static program analysis which is able to automatically infer assertions about stability properties of decision trees. By relying on a tool for adversarial training of decision trees, our fairness-aware learning method has been implemented and experimentally evaluated on the reference datasets used to assess fairness properties. The experimental results show that our approach is able to train tree models exhibiting a high degree of individual fairness w.r.t. the natural state-of-the-art CART trees and random forests. Moreover, as a by-product, these fair decision trees turn out to be significantly compact, thus enhancing the interpretability of their fairness properties.

Genetic Adversarial Training of Decision Trees

Dec 21, 2020

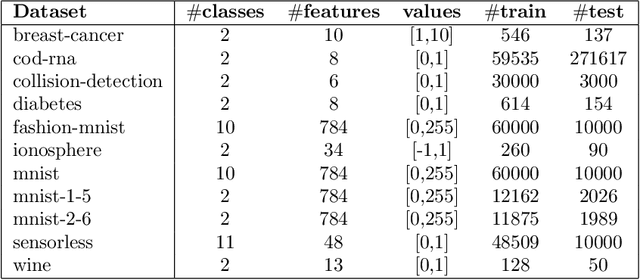

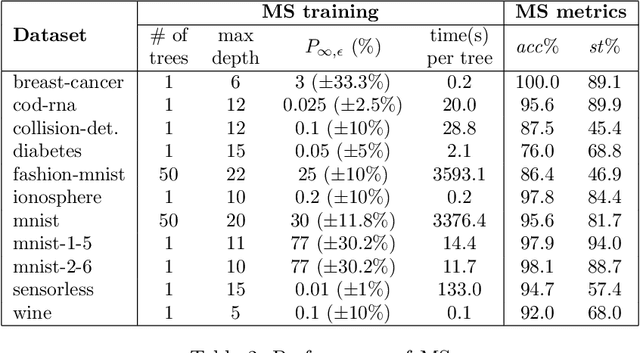

Abstract:We put forward a novel learning methodology for ensembles of decision trees based on a genetic algorithm which is able to train a decision tree for maximizing both its accuracy and its robustness to adversarial perturbations. This learning algorithm internally leverages a complete formal verification technique for robustness properties of decision trees based on abstract interpretation, a well known static program analysis technique. We implemented this genetic adversarial training algorithm in a tool called Meta-Silvae (MS) and we experimentally evaluated it on some reference datasets used in adversarial training. The experimental results show that MS is able to train robust models that compete with and often improve on the current state-of-the-art of adversarial training of decision trees while being much more compact and therefore interpretable and efficient tree models.

Robustness Verification of Support Vector Machines

Apr 26, 2019

Abstract:We study the problem of formally verifying the robustness to adversarial examples of support vector machines (SVMs), a major machine learning model for classification and regression tasks. Following a recent stream of works on formal robustness verification of (deep) neural networks, our approach relies on a sound abstract version of a given SVM classifier to be used for checking its robustness. This methodology is parametric on a given numerical abstraction of real values and, analogously to the case of neural networks, needs neither abstract least upper bounds nor widening operators on this abstraction. The standard interval domain provides a simple instantiation of our abstraction technique, which is enhanced with the domain of reduced affine forms, which is an efficient abstraction of the zonotope abstract domain. This robustness verification technique has been fully implemented and experimentally evaluated on SVMs based on linear and nonlinear (polynomial and radial basis function) kernels, which have been trained on the popular MNIST dataset of images and on the recent and more challenging Fashion-MNIST dataset. The experimental results of our prototype SVM robustness verifier appear to be encouraging: this automated verification is fast, scalable and shows significantly high percentages of provable robustness on the test set of MNIST, in particular compared to the analogous provable robustness of neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge