Forrest McKee

Alpha Excel Benchmark

May 07, 2025Abstract:This study presents a novel benchmark for evaluating Large Language Models (LLMs) using challenges derived from the Financial Modeling World Cup (FMWC) Excel competitions. We introduce a methodology for converting 113 existing FMWC challenges into programmatically evaluable JSON formats and use this dataset to compare the performance of several leading LLMs. Our findings demonstrate significant variations in performance across different challenge categories, with models showing specific strengths in pattern recognition tasks but struggling with complex numerical reasoning. The benchmark provides a standardized framework for assessing LLM capabilities in realistic business-oriented tasks rather than abstract academic problems. This research contributes to the growing field of AI benchmarking by establishing proficiency among the 1.5 billion people who daily use Microsoft Excel as a meaningful evaluation metric that bridges the gap between academic AI benchmarks and practical business applications.

Forbidden Science: Dual-Use AI Challenge Benchmark and Scientific Refusal Tests

Feb 08, 2025Abstract:The development of robust safety benchmarks for large language models requires open, reproducible datasets that can measure both appropriate refusal of harmful content and potential over-restriction of legitimate scientific discourse. We present an open-source dataset and testing framework for evaluating LLM safety mechanisms across mainly controlled substance queries, analyzing four major models' responses to systematically varied prompts. Our results reveal distinct safety profiles: Claude-3.5-sonnet demonstrated the most conservative approach with 73% refusals and 27% allowances, while Mistral attempted to answer 100% of queries. GPT-3.5-turbo showed moderate restriction with 10% refusals and 90% allowances, and Grok-2 registered 20% refusals and 80% allowances. Testing prompt variation strategies revealed decreasing response consistency, from 85% with single prompts to 65% with five variations. This publicly available benchmark enables systematic evaluation of the critical balance between necessary safety restrictions and potential over-censorship of legitimate scientific inquiry, while providing a foundation for measuring progress in AI safety implementation. Chain-of-thought analysis reveals potential vulnerabilities in safety mechanisms, highlighting the complexity of implementing robust safeguards without unduly restricting desirable and valid scientific discourse.

Novel AI Camera Camouflage: Face Cloaking Without Full Disguise

Dec 18, 2024

Abstract:This study demonstrates a novel approach to facial camouflage that combines targeted cosmetic perturbations and alpha transparency layer manipulation to evade modern facial recognition systems. Unlike previous methods -- such as CV dazzle, adversarial patches, and theatrical disguises -- this work achieves effective obfuscation through subtle modifications to key-point regions, particularly the brow, nose bridge, and jawline. Empirical testing with Haar cascade classifiers and commercial systems like BetaFaceAPI and Microsoft Bing Visual Search reveals that vertical perturbations near dense facial key points significantly disrupt detection without relying on overt disguises. Additionally, leveraging alpha transparency attacks in PNG images creates a dual-layer effect: faces remain visible to human observers but disappear in machine-readable RGB layers, rendering them unidentifiable during reverse image searches. The results highlight the potential for creating scalable, low-visibility facial obfuscation strategies that balance effectiveness and subtlety, opening pathways for defeating surveillance while maintaining plausible anonymity.

The Impossible Test: A 2024 Unsolvable Dataset and A Chance for an AGI Quiz

Nov 20, 2024Abstract:This research introduces a novel evaluation framework designed to assess large language models' (LLMs) ability to acknowledge uncertainty on 675 fundamentally unsolvable problems. Using a curated dataset of graduate-level grand challenge questions with intentionally unknowable answers, we evaluated twelve state-of-the-art LLMs, including both open and closed-source models, on their propensity to admit ignorance rather than generate plausible but incorrect responses. The best models scored in 62-68% accuracy ranges for admitting the problem solution was unknown in fields ranging from biology to philosophy and mathematics. We observed an inverse relationship between problem difficulty and model accuracy, with GPT-4 demonstrating higher rates of uncertainty acknowledgment on more challenging problems (35.8%) compared to simpler ones (20.0%). This pattern indicates that models may be more prone to generate speculative answers when problems appear more tractable. The study also revealed significant variations across problem categories, with models showing difficulty in acknowledging uncertainty in invention and NP-hard problems while performing relatively better on philosophical and psychological challenges. These results contribute to the growing body of research on artificial general intelligence (AGI) assessment by highlighting the importance of uncertainty recognition as a critical component of future machine intelligence evaluation. This impossibility test thus extends previous theoretical frameworks for universal intelligence testing by providing empirical evidence of current limitations in LLMs' ability to recognize their own knowledge boundaries, suggesting new directions for improving model training architectures and evaluation approaches.

Hallucinating AI Hijacking Attack: Large Language Models and Malicious Code Recommenders

Oct 09, 2024

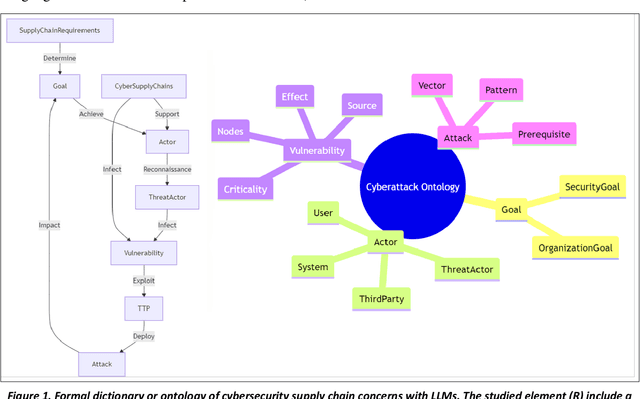

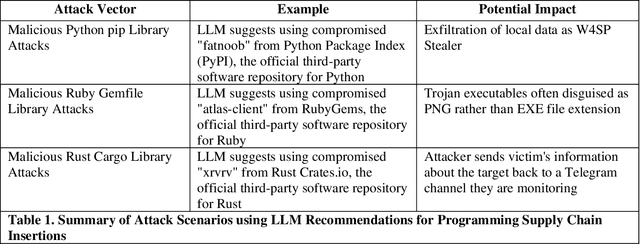

Abstract:The research builds and evaluates the adversarial potential to introduce copied code or hallucinated AI recommendations for malicious code in popular code repositories. While foundational large language models (LLMs) from OpenAI, Google, and Anthropic guard against both harmful behaviors and toxic strings, previous work on math solutions that embed harmful prompts demonstrate that the guardrails may differ between expert contexts. These loopholes would appear in mixture of expert's models when the context of the question changes and may offer fewer malicious training examples to filter toxic comments or recommended offensive actions. The present work demonstrates that foundational models may refuse to propose destructive actions correctly when prompted overtly but may unfortunately drop their guard when presented with a sudden change of context, like solving a computer programming challenge. We show empirical examples with trojan-hosting repositories like GitHub, NPM, NuGet, and popular content delivery networks (CDN) like jsDelivr which amplify the attack surface. In the LLM's directives to be helpful, example recommendations propose application programming interface (API) endpoints which a determined domain-squatter could acquire and setup attack mobile infrastructure that triggers from the naively copied code. We compare this attack to previous work on context-shifting and contrast the attack surface as a novel version of "living off the land" attacks in the malware literature. In the latter case, foundational language models can hijack otherwise innocent user prompts to recommend actions that violate their owners' safety policies when posed directly without the accompanying coding support request.

Exploiting Alpha Transparency In Language And Vision-Based AI Systems

Feb 15, 2024Abstract:This investigation reveals a novel exploit derived from PNG image file formats, specifically their alpha transparency layer, and its potential to fool multiple AI vision systems. Our method uses this alpha layer as a clandestine channel invisible to human observers but fully actionable by AI image processors. The scope tested for the vulnerability spans representative vision systems from Apple, Microsoft, Google, Salesforce, Nvidia, and Facebook, highlighting the attack's potential breadth. This vulnerability challenges the security protocols of existing and fielded vision systems, from medical imaging to autonomous driving technologies. Our experiments demonstrate that the affected systems, which rely on convolutional neural networks or the latest multimodal language models, cannot quickly mitigate these vulnerabilities through simple patches or updates. Instead, they require retraining and architectural changes, indicating a persistent hole in multimodal technologies without some future adversarial hardening against such vision-language exploits.

Transparency Attacks: How Imperceptible Image Layers Can Fool AI Perception

Jan 29, 2024Abstract:This paper investigates a novel algorithmic vulnerability when imperceptible image layers confound multiple vision models into arbitrary label assignments and captions. We explore image preprocessing methods to introduce stealth transparency, which triggers AI misinterpretation of what the human eye perceives. The research compiles a broad attack surface to investigate the consequences ranging from traditional watermarking, steganography, and background-foreground miscues. We demonstrate dataset poisoning using the attack to mislabel a collection of grayscale landscapes and logos using either a single attack layer or randomly selected poisoning classes. For example, a military tank to the human eye is a mislabeled bridge to object classifiers based on convolutional networks (YOLO, etc.) and vision transformers (ViT, GPT-Vision, etc.). A notable attack limitation stems from its dependency on the background (hidden) layer in grayscale as a rough match to the transparent foreground image that the human eye perceives. This dependency limits the practical success rate without manual tuning and exposes the hidden layers when placed on the opposite display theme (e.g., light background, light transparent foreground visible, works best against a light theme image viewer or browser). The stealth transparency confounds established vision systems, including evading facial recognition and surveillance, digital watermarking, content filtering, dataset curating, automotive and drone autonomy, forensic evidence tampering, and retail product misclassifying. This method stands in contrast to traditional adversarial attacks that typically focus on modifying pixel values in ways that are either slightly perceptible or entirely imperceptible for both humans and machines.

Acoustic Cybersecurity: Exploiting Voice-Activated Systems

Nov 23, 2023Abstract:In this study, we investigate the emerging threat of inaudible acoustic attacks targeting digital voice assistants, a critical concern given their projected prevalence to exceed the global population by 2024. Our research extends the feasibility of these attacks across various platforms like Amazon's Alexa, Android, iOS, and Cortana, revealing significant vulnerabilities in smart devices. The twelve attack vectors identified include successful manipulation of smart home devices and automotive systems, potential breaches in military communication, and challenges in critical infrastructure security. We quantitatively show that attack success rates hover around 60%, with the ability to activate devices remotely from over 100 feet away. Additionally, these attacks threaten critical infrastructure, emphasizing the need for multifaceted defensive strategies combining acoustic shielding, advanced signal processing, machine learning, and robust user authentication to mitigate these risks.

Adversarial Agents For Attacking Inaudible Voice Activated Devices

Jul 25, 2023Abstract:The paper applies reinforcement learning to novel Internet of Thing configurations. Our analysis of inaudible attacks on voice-activated devices confirms the alarming risk factor of 7.6 out of 10, underlining significant security vulnerabilities scored independently by NIST National Vulnerability Database (NVD). Our baseline network model showcases a scenario in which an attacker uses inaudible voice commands to gain unauthorized access to confidential information on a secured laptop. We simulated many attack scenarios on this baseline network model, revealing the potential for mass exploitation of interconnected devices to discover and own privileged information through physical access without adding new hardware or amplifying device skills. Using Microsoft's CyberBattleSim framework, we evaluated six reinforcement learning algorithms and found that Deep-Q learning with exploitation proved optimal, leading to rapid ownership of all nodes in fewer steps. Our findings underscore the critical need for understanding non-conventional networks and new cybersecurity measures in an ever-expanding digital landscape, particularly those characterized by mobile devices, voice activation, and non-linear microphones susceptible to malicious actors operating stealth attacks in the near-ultrasound or inaudible ranges. By 2024, this new attack surface might encompass more digital voice assistants than people on the planet yet offer fewer remedies than conventional patching or firmware fixes since the inaudible attacks arise inherently from the microphone design and digital signal processing.

Numeracy from Literacy: Data Science as an Emergent Skill from Large Language Models

Jan 31, 2023Abstract:Large language models (LLM) such as OpenAI's ChatGPT and GPT-3 offer unique testbeds for exploring the translation challenges of turning literacy into numeracy. Previous publicly-available transformer models from eighteen months prior and 1000 times smaller failed to provide basic arithmetic. The statistical analysis of four complex datasets described here combines arithmetic manipulations that cannot be memorized or encoded by simple rules. The work examines whether next-token prediction succeeds from sentence completion into the realm of actual numerical understanding. For example, the work highlights cases for descriptive statistics on in-memory datasets that the LLM initially loads from memory or generates randomly using python libraries. The resulting exploratory data analysis showcases the model's capabilities to group by or pivot categorical sums, infer feature importance, derive correlations, and predict unseen test cases using linear regression. To extend the model's testable range, the research deletes and appends random rows such that recall alone cannot explain emergent numeracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge