Floris van Breugel

COSMOS: A Data-Driven Probabilistic Time Series simulator for Chemical Plumes across Spatial Scales

May 28, 2025Abstract:The development of robust odor navigation strategies for automated environmental monitoring applications requires realistic simulations of odor time series for agents moving across large spatial scales. Traditional approaches that rely on computational fluid dynamics (CFD) methods can capture the spatiotemporal dynamics of odor plumes, but are impractical for large-scale simulations due to their computational expense. On the other hand, puff-based simulations, although computationally tractable for large scales and capable of capturing the stochastic nature of plumes, fail to reproduce naturalistic odor statistics. Here, we present COSMOS (Configurable Odor Simulation Model over Scalable Spaces), a data-driven probabilistic framework that synthesizes realistic odor time series from spatial and temporal features of real datasets. COSMOS generates similar distributions of key statistical features such as whiff frequency, duration, and concentration as observed in real data, while dramatically reducing computational overhead. By reproducing critical statistical properties across a variety of flow regimes and scales, COSMOS enables the development and evaluation of agent-based navigation strategies with naturalistic odor experiences. To demonstrate its utility, we compare odor-tracking agents exposed to CFD-generated plumes versus COSMOS simulations, showing that both their odor experiences and resulting behaviors are quite similar.

A Method for Classifying Snow Using Ski-Mounted Strain Sensors

Apr 27, 2023

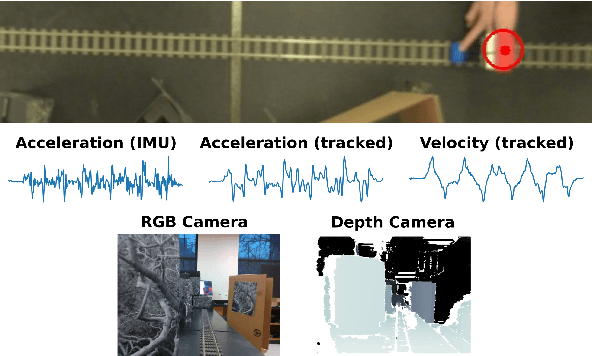

Abstract:Understanding the structure, quantity, and type of snow in mountain landscapes is crucial for assessing avalanche safety, interpreting satellite imagery, building accurate hydrology models, and choosing the right pair of skis for your weekend trip. Currently, such characteristics of snowpack are measured using a combination of remote satellite imagery, weather stations, and laborious point measurements and descriptions provided by local forecasters, guides, and backcountry users. Here, we explore how characteristics of the top layer of snowpack could be estimated while skiing using strain sensors mounted to the top surface of an alpine ski. We show that with two strain gauges and an inertial measurement unit it is feasible to correctly assign one of three qualitative labels (powder, slushy, or icy/groomed snow) to each 10 second segment of a trajectory with 97% accuracy, independent of skiing style. Our algorithm uses a combination of a data-driven linear model of the ski-snow interaction, dimensionality reduction, and a Naive Bayes classifier. Comparisons of classifier performance between strain gauges suggest that the optimal placement of strain gauges is halfway between the binding and the tip/tail of the ski, in the cambered section just before the point where the unweighted ski would touch the snow surface. The ability to classify snow, potentially in real-time, using skis opens the door to applications that range from citizen science efforts to map snow surface characteristics in the backcountry, and develop skis with automated stiffness tuning based on the snow type.

Emergent behavior and neural dynamics in artificial agents tracking turbulent plumes

Sep 25, 2021

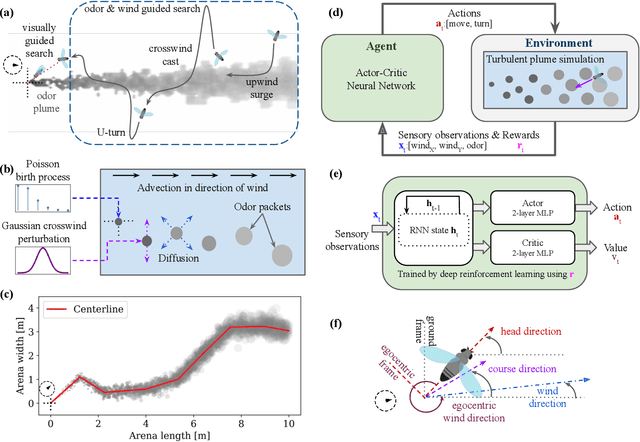

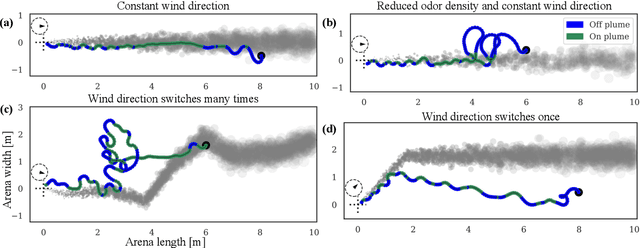

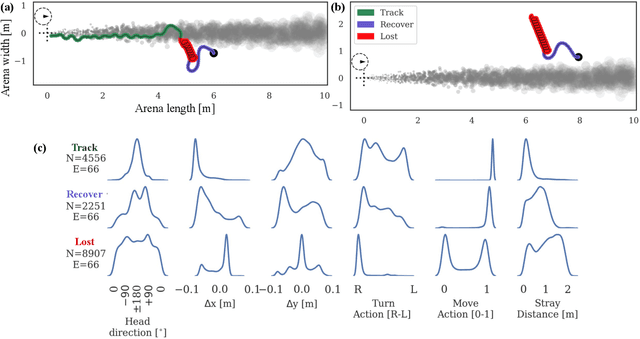

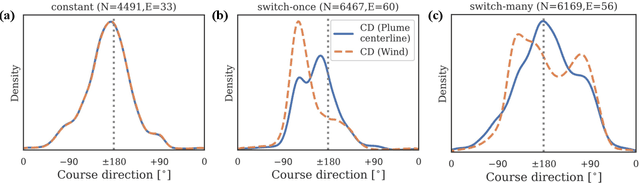

Abstract:Tracking a turbulent plume to locate its source is a complex control problem because it requires multi-sensory integration and must be robust to intermittent odors, changing wind direction, and variable plume statistics. This task is routinely performed by flying insects, often over long distances, in pursuit of food or mates. Several aspects of this remarkable behavior have been studied in detail in many experimental studies. Here, we take a complementary in silico approach, using artificial agents trained with reinforcement learning to develop an integrated understanding of the behaviors and neural computations that support plume tracking. Specifically, we use deep reinforcement learning (DRL) to train recurrent neural network (RNN) agents to locate the source of simulated turbulent plumes. Interestingly, the agents' emergent behaviors resemble those of flying insects, and the RNNs learn to represent task-relevant variables, such as head direction and time since last odor encounter. Our analyses suggest an intriguing experimentally testable hypothesis for tracking plumes in changing wind direction -- that agents follow local plume shape rather than the current wind direction. While reflexive short-memory behaviors are sufficient for tracking plumes in constant wind, longer timescales of memory are essential for tracking plumes that switch direction. At the level of neural dynamics, the RNNs' population activity is low-dimensional and organized into distinct dynamical structures, with some correspondence to behavioral modules. Our in silico approach provides key intuitions for turbulent plume tracking strategies and motivates future targeted experimental and theoretical developments.

FLIVVER: Fly Lobula Inspired Visual Velocity Estimation & Ranging

Apr 10, 2020

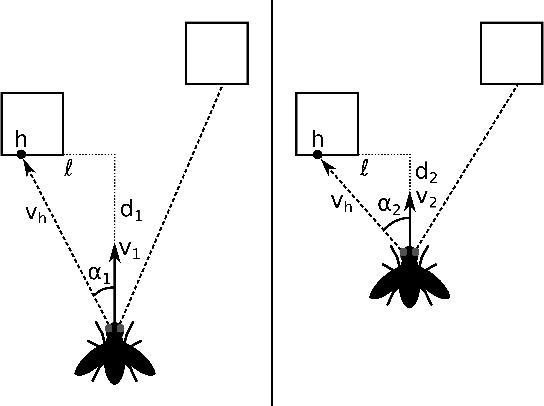

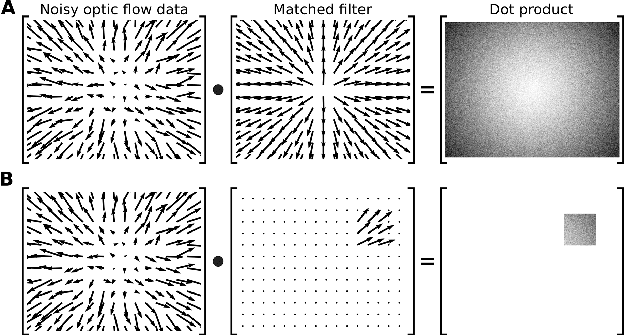

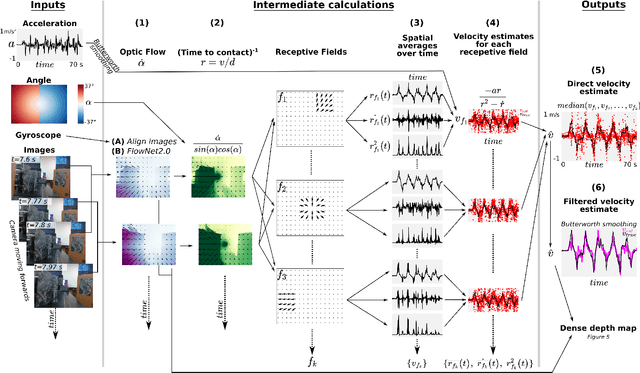

Abstract:The mechanism by which a tiny insect or insect-sized robot could estimate its absolute velocity and distance to nearby objects remains unknown. However, this ability is critical for behaviors that require estimating wind direction during flight, such as odor-plume tracking. Neuroscience and behavior studies with insects have shown that they rely on the perception of image motion, or optic flow, to estimate relative motion, equivalent to a ratio of their velocity and distance to objects in the world. The key open challenge is therefore to decouple these two states from a single measurement of their ratio. Although modern SLAM (Simultaneous Localization and Mapping) methods provide a solution to this problem for robotic systems, these methods typically rely on computations that insects likely cannot perform, such as simultaneously tracking multiple individual visual features, remembering a 3D map of the world, and solving nonlinear optimization problems using iterative algorithms. Here we present a novel algorithm, FLIVVER, which combines the geometry of dynamic forward motion with inspiration from insect visual processing to \textit{directly} estimate absolute ground velocity from a combination of optic flow and acceleration information. Our algorithm provides a clear hypothesis for how insects might estimate absolute velocity, and also provides a theoretical framework for designing fast analog circuitry for efficient state estimation, which could be applied to insect-sized robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge