Floris Bex

The Explabox: Model-Agnostic Machine Learning Transparency & Analysis

Nov 22, 2024Abstract:We present the Explabox: an open-source toolkit for transparent and responsible machine learning (ML) model development and usage. Explabox aids in achieving explainable, fair and robust models by employing a four-step strategy: explore, examine, explain and expose. These steps offer model-agnostic analyses that transform complex 'ingestibles' (models and data) into interpretable 'digestibles'. The toolkit encompasses digestibles for descriptive statistics, performance metrics, model behavior explanations (local and global), and robustness, security, and fairness assessments. Implemented in Python, Explabox supports multiple interaction modes and builds on open-source packages. It empowers model developers and testers to operationalize explainability, fairness, auditability, and security. The initial release focuses on text data and models, with plans for expansion. Explabox's code and documentation are available open-source at https://explabox.readthedocs.io/.

Contrastive Explanations for Argumentation-Based Conclusions

Jul 07, 2021

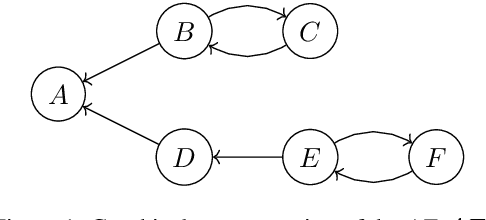

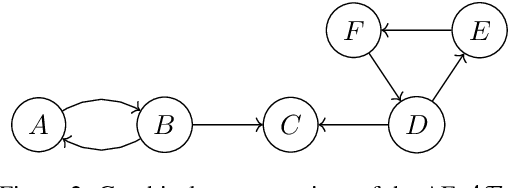

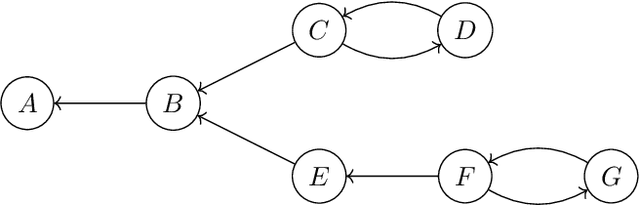

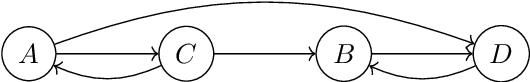

Abstract:In this paper we discuss contrastive explanations for formal argumentation - the question why a certain argument (the fact) can be accepted, whilst another argument (the foil) cannot be accepted under various extension-based semantics. The recent work on explanations for argumentation-based conclusions has mostly focused on providing minimal explanations for the (non-)acceptance of arguments. What is still lacking, however, is a proper argumentation-based interpretation of contrastive explanations. We show under which conditions contrastive explanations in abstract and structured argumentation are meaningful, and how argumentation allows us to make implicit foils explicit.

Necessary and Sufficient Explanations in Abstract Argumentation

Nov 04, 2020

Abstract:In this paper, we discuss necessary and sufficient explanations for formal argumentation - the question whether and why a certain argument can be accepted (or not) under various extension-based semantics. Given a framework with which explanations for argumentation-based conclusions can be derived, we study necessity and sufficiency: what (sets of) arguments are necessary or sufficient for the (non-)acceptance of an argument?

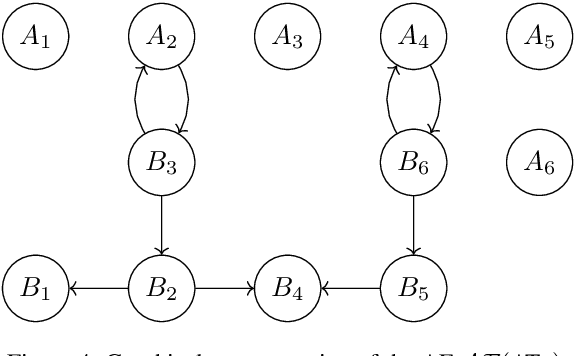

Deep Learning for Abstract Argumentation Semantics

Jul 16, 2020

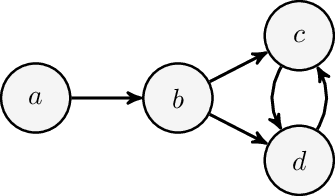

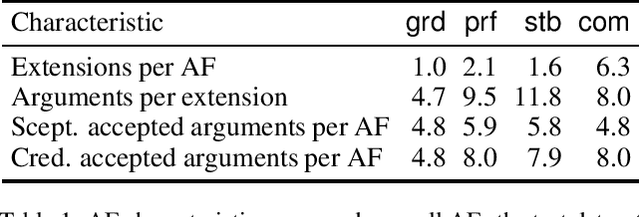

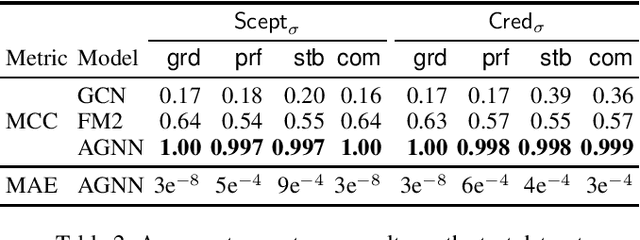

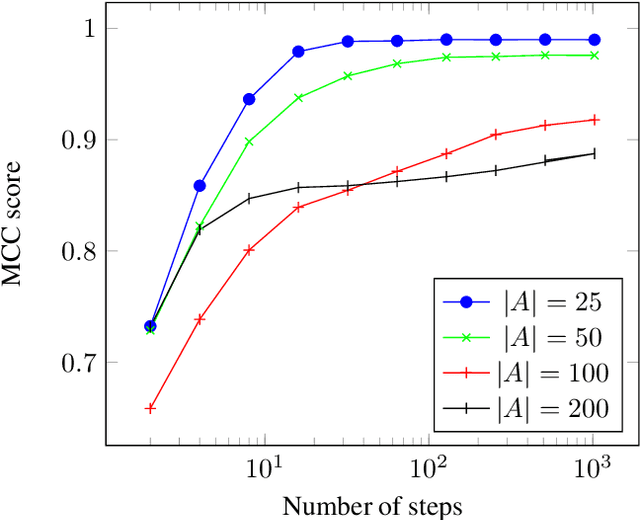

Abstract:In this paper, we present a learning-based approach to determining acceptance of arguments under several abstract argumentation semantics. More specifically, we propose an argumentation graph neural network (AGNN) that learns a message-passing algorithm to predict the likelihood of an argument being accepted. The experimental results demonstrate that the AGNN can almost perfectly predict the acceptability under different semantics and scales well for larger argumentation frameworks. Furthermore, analysing the behaviour of the message-passing algorithm shows that the AGNN learns to adhere to basic principles of argument semantics as identified in the literature, and can thus be trained to predict extensions under the different semantics - we show how the latter can be done for multi-extension semantics by using AGNNs to guide a basic search. We publish our code at https://github.com/DennisCraandijk/DL-Abstract-Argumentation

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge