Flavio Di Martino

Challenges and Limitations in the Synthetic Generation of mHealth Sensor Data

May 20, 2025Abstract:The widespread adoption of mobile sensors has the potential to provide massive and heterogeneous time series data, driving Artificial Intelligence applications in mHealth. However, data collection remains limited due to stringent ethical regulations, privacy concerns, and other constraints, hindering progress in the field. Synthetic data generation, particularly through Generative Adversarial Networks and Diffusion Models, has emerged as a promising solution to address both data scarcity and privacy issues. Yet, these models are often limited to short-term, unimodal signal patterns. This paper presents a systematic evaluation of state-of-the-art generative models for time series synthesis, with a focus on their ability to jointly handle multi-modality, long-range dependencies, and conditional generation-key challenges in the mHealth domain. To ensure a fair comparison, we introduce a novel evaluation framework designed to measure both the intrinsic quality of synthetic data and its utility in downstream predictive tasks. Our findings reveal critical limitations in the existing approaches, particularly in maintaining cross-modal consistency, preserving temporal coherence, and ensuring robust performance in train-on-synthetic, test-on-real, and data augmentation scenarios. Finally, we present our future research directions to enhance synthetic time series generation and improve the applicability of generative models in mHealth.

Explainable AI for Malnutrition Risk Prediction from m-Health and Clinical Data

May 31, 2023Abstract:Malnutrition is a serious and prevalent health problem in the older population, and especially in hospitalised or institutionalised subjects. Accurate and early risk detection is essential for malnutrition management and prevention. M-health services empowered with Artificial Intelligence (AI) may lead to important improvements in terms of a more automatic, objective, and continuous monitoring and assessment. Moreover, the latest Explainable AI (XAI) methodologies may make AI decisions interpretable and trustworthy for end users. This paper presents a novel AI framework for early and explainable malnutrition risk detection based on heterogeneous m-health data. We performed an extensive model evaluation including both subject-independent and personalised predictions, and the obtained results indicate Random Forest (RF) and Gradient Boosting as the best performing classifiers, especially when incorporating body composition assessment data. We also investigated several benchmark XAI methods to extract global model explanations. Model-specific explanation consistency assessment indicates that each selected model privileges similar subsets of the most relevant predictors, with the highest agreement shown between SHapley Additive ExPlanations (SHAP) and feature permutation method. Furthermore, we performed a preliminary clinical validation to verify that the learned feature-output trends are compliant with the current evidence-based assessment.

Explainable AI for clinical and remote health applications: a survey on tabular and time series data

Sep 14, 2022

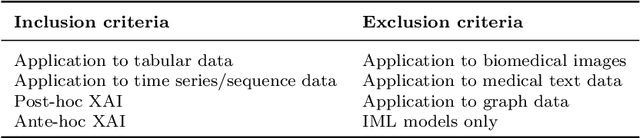

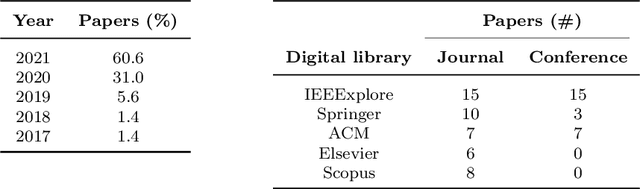

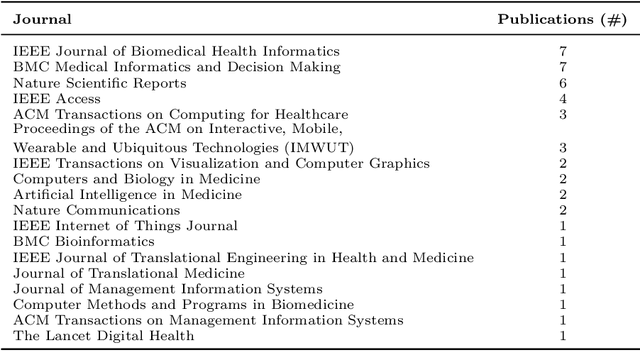

Abstract:Nowadays Artificial Intelligence (AI) has become a fundamental component of healthcare applications, both clinical and remote, but the best performing AI systems are often too complex to be self-explaining. Explainable AI (XAI) techniques are defined to unveil the reasoning behind the system's predictions and decisions, and they become even more critical when dealing with sensitive and personal health data. It is worth noting that XAI has not gathered the same attention across different research areas and data types, especially in healthcare. In particular, many clinical and remote health applications are based on tabular and time series data, respectively, and XAI is not commonly analysed on these data types, while computer vision and Natural Language Processing (NLP) are the reference applications. To provide an overview of XAI methods that are most suitable for tabular and time series data in the healthcare domain, this paper provides a review of the literature in the last 5 years, illustrating the type of generated explanations and the efforts provided to evaluate their relevance and quality. Specifically, we identify clinical validation, consistency assessment, objective and standardised quality evaluation, and human-centered quality assessment as key features to ensure effective explanations for the end users. Finally, we highlight the main research challenges in the field as well as the limitations of existing XAI methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge