Felix Schwock

Complex Clipping for Improved Generalization in Machine Learning

Feb 27, 2023

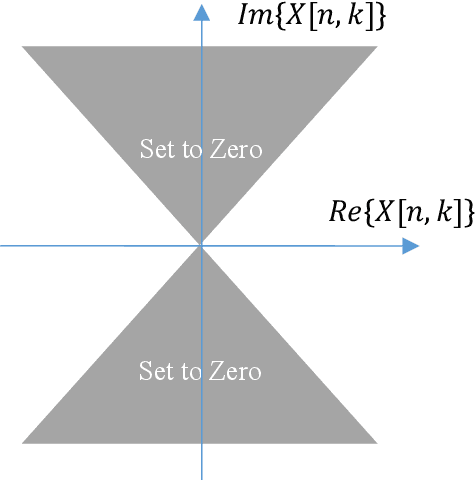

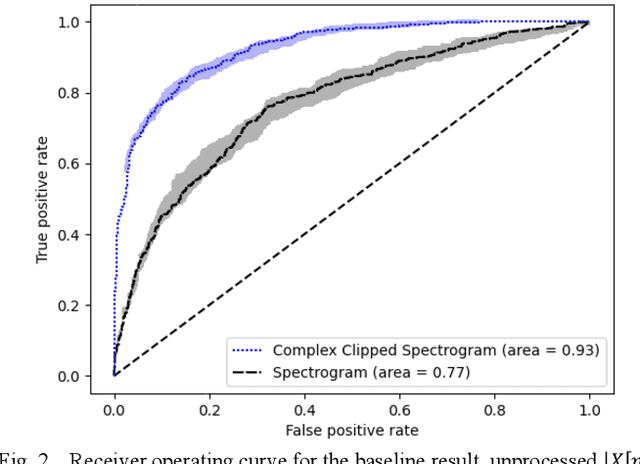

Abstract:For many machine learning applications, a common input representation is a spectrogram. The underlying representation for a spectrogram is a short time Fourier transform (STFT) which gives complex values. The spectrogram uses the magnitude of these complex values, a commonly used detector. Modern machine learning systems are commonly overparameterized, where possible ill-conditioning problems are ameliorated by regularization. The common use of rectified linear unit (ReLU) activation functions between layers of a deep net has been shown to help this regularization, improving system performance. We extend this idea of ReLU activation to detection for the complex STFT, providing a simple-to-compute modified and regularized spectrogram, which potentially results in better behaved training. We then confirmed the benefit of this approach on a noisy acoustic data set used for a real-world application. Generalization performance improved substantially. This approach might benefit other applications which use time-frequency mappings, for acoustic, audio, and other applications.

Estimating and Analyzing Neural Flow Using Signal Processing on Graphs

May 27, 2022

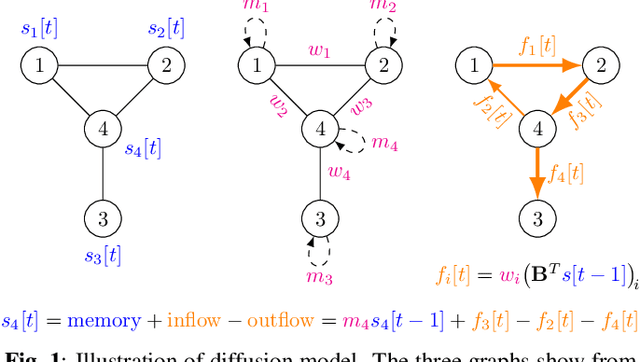

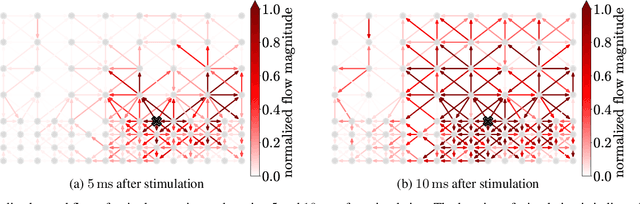

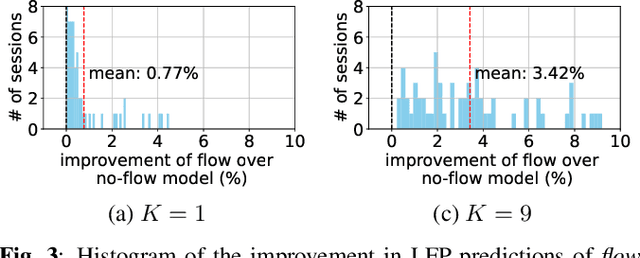

Abstract:Neural communication is fundamentally linked to the brain's overall state and health status. We demonstrate how communication in the brain can be estimated from recorded neural activity using concepts from graph signal processing. The communication is modeled as a flow signals on the edges of a graph and naturally arises from a graph diffusion process. We apply the diffusion model to local field potential (LFP) measurements of brain activity of two non-human primates to estimate the communication flow during a stimulation experiment. Comparisons with a baseline model demonstrate that adding the neural flow can improve LFP predictions. Finally, we demonstrate how the neural flow can be decomposed into a gradient and rotational component and show that the gradient component depends on the location of stimulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge