Farzana Yasmin Ahmad

A Comprehensive Evaluation of Generative Models in Calorimeter Shower Simulation

Jun 08, 2024

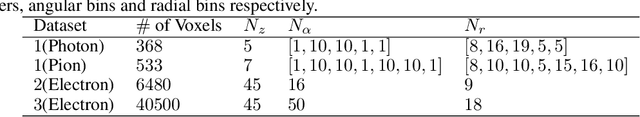

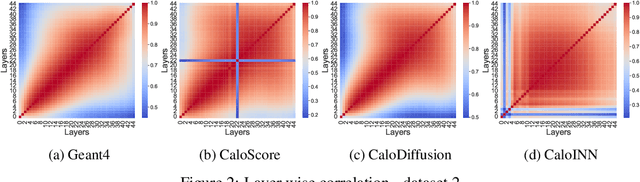

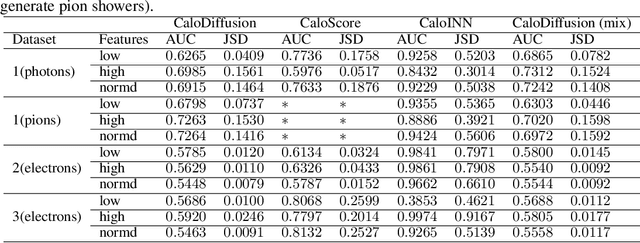

Abstract:The pursuit of understanding fundamental particle interactions has reached unparalleled precision levels. Particle physics detectors play a crucial role in generating low-level object signatures that encode collision physics. However, simulating these particle collisions is a demanding task in terms of memory and computation which will be exasperated with larger data volumes, more complex detectors, and a higher pileup environment in the High-Luminosity LHC. The introduction of "Fast Simulation" has been pivotal in overcoming computational bottlenecks. The use of deep-generative models has sparked a surge of interest in surrogate modeling for detector simulations, generating particle showers that closely resemble the observed data. Nonetheless, there is a pressing need for a comprehensive evaluation of their performance using a standardized set of metrics. In this study, we conducted a rigorous evaluation of three generative models using standard datasets and a diverse set of metrics derived from physics, computer vision, and statistics. Furthermore, we explored the impact of using full versus mixed precision modes during inference. Our evaluation revealed that the CaloDiffusion and CaloScore generative models demonstrate the most accurate simulation of particle showers, yet there remains substantial room for improvement. Our findings identified areas where the evaluated models fell short in accurately replicating Geant4 data.

PCV: A Point Cloud-Based Network Verifier

Jan 30, 2023

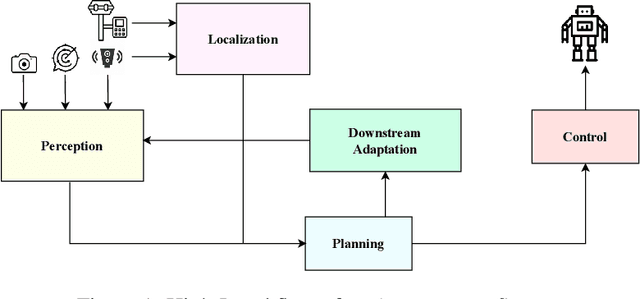

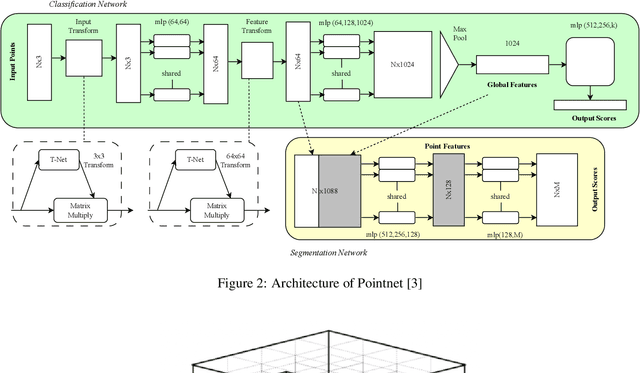

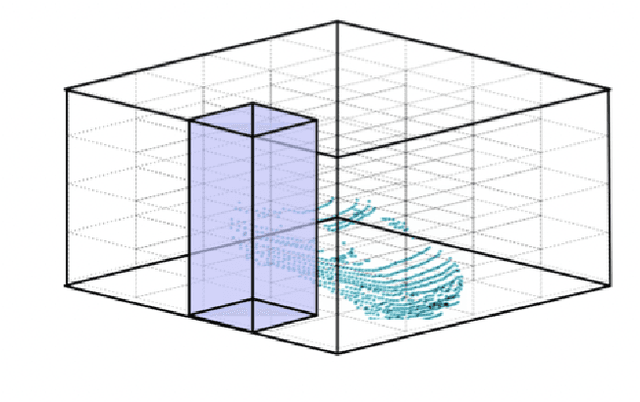

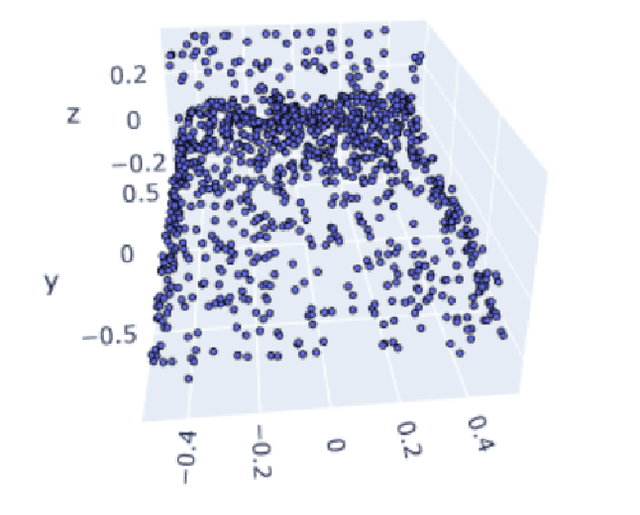

Abstract:3D vision with real-time LiDAR-based point cloud data became a vital part of autonomous system research, especially perception and prediction modules use for object classification, segmentation, and detection. Despite their success, point cloud-based network models are vulnerable to multiple adversarial attacks, where the certain factor of changes in the validation set causes significant performance drop in well-trained networks. Most of the existing verifiers work perfectly on 2D convolution. Due to complex architecture, dimension of hyper-parameter, and 3D convolution, no verifiers can perform the basic layer-wise verification. It is difficult to conclude the robustness of a 3D vision model without performing the verification. Because there will be always corner cases and adversarial input that can compromise the model's effectiveness. In this project, we describe a point cloud-based network verifier that successfully deals state of the art 3D classifier PointNet verifies the robustness by generating adversarial inputs. We have used extracted properties from the trained PointNet and changed certain factors for perturbation input. We calculate the impact on model accuracy versus property factor and can test PointNet network's robustness against a small collection of perturbing input states resulting from adversarial attacks like the suggested hybrid reverse signed attack. The experimental results reveal that the resilience property of PointNet is affected by our hybrid reverse signed perturbation strategy

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge