Farhan Rahman Wasee

Predicting Surface Reflectance Properties of Outdoor Scenes Under Unknown Natural Illumination

May 14, 2021

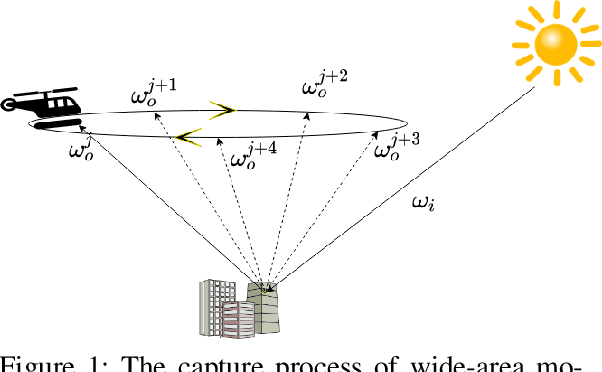

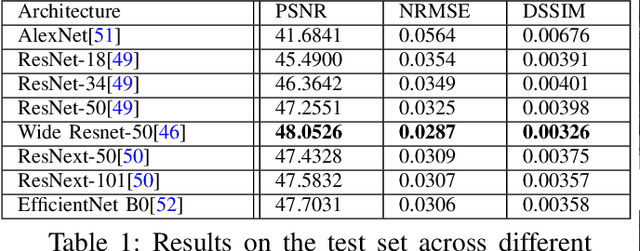

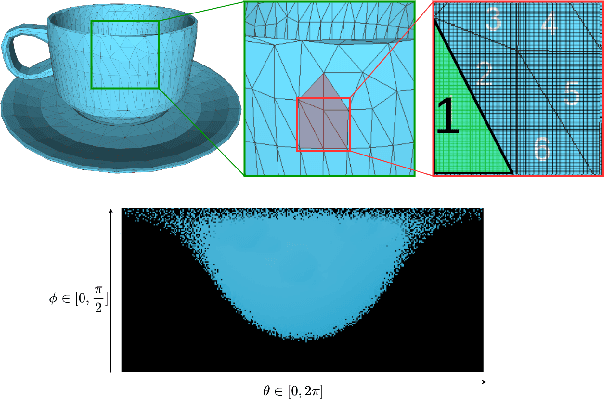

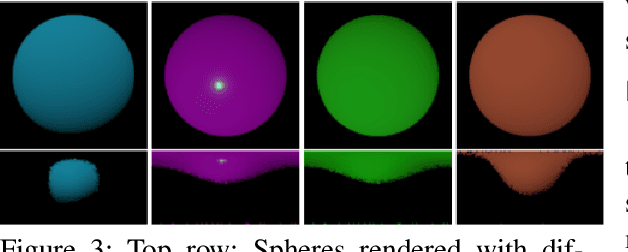

Abstract:Estimating and modelling the appearance of an object under outdoor illumination conditions is a complex process. Although there have been several studies on illumination estimation and relighting, very few of them focus on estimating the reflectance properties of outdoor objects and scenes. This paper addresses this problem and proposes a complete framework to predict surface reflectance properties of outdoor scenes under unknown natural illumination. Uniquely, we recast the problem into its two constituent components involving the BRDF incoming light and outgoing view directions: (i) surface points' radiance captured in the images, and outgoing view directions are aggregated and encoded into reflectance maps, and (ii) a neural network trained on reflectance maps of renders of a unit sphere under arbitrary light directions infers a low-parameter reflection model representing the reflectance properties at each surface in the scene. Our model is based on a combination of phenomenological and physics-based scattering models and can relight the scenes from novel viewpoints. We present experiments that show that rendering with the predicted reflectance properties results in a visually similar appearance to using textures that cannot otherwise be disentangled from the reflectance properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge