Fantin Girard

End-to-End Deep Learning Model for Cardiac Cycle Synchronization from Multi-View Angiographic Sequences

Sep 04, 2020

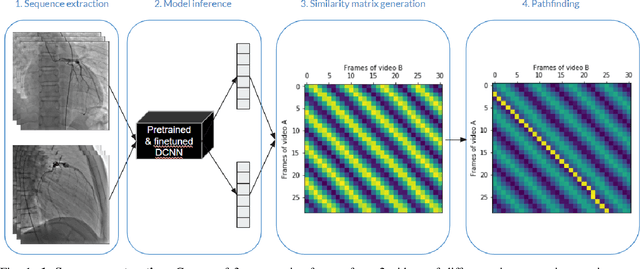

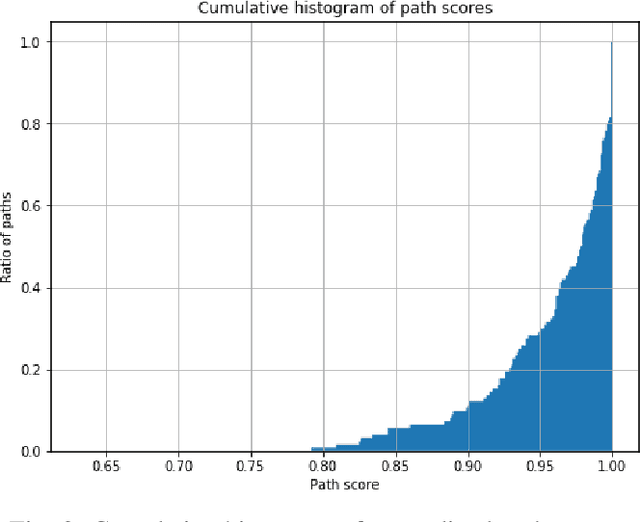

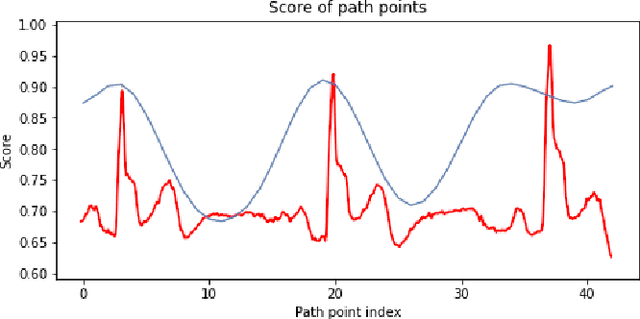

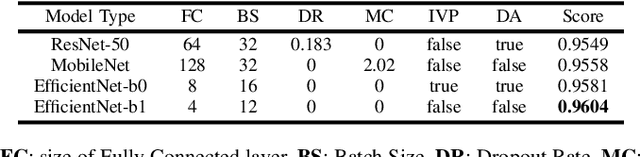

Abstract:Dynamic reconstructions (3D+T) of coronary arteries could give important perfusion details to clinicians. Temporal matching of the different views, which may not be acquired simultaneously, is a prerequisite for an accurate stereo-matching of the coronary segments. In this paper, we show how a neural network can be trained from angiographic sequences to synchronize different views during the cardiac cycle using raw x-ray angiography videos exclusively. First, we train a neural network model with angiographic sequences to extract features describing the progression of the cardiac cycle. Then, we compute the distance between the feature vectors of every frame from the first view with those from the second view to generate distance maps that display stripe patterns. Using pathfinding, we extract the best temporally coherent associations between each frame of both videos. Finally, we compare the synchronized frames of an evaluation set with the ECG signals to show an alignment with 96.04% accuracy.

Joint segmentation and classification of retinal arteries/veins from fundus images

Mar 04, 2019

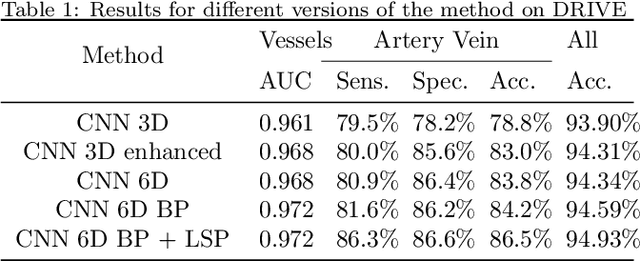

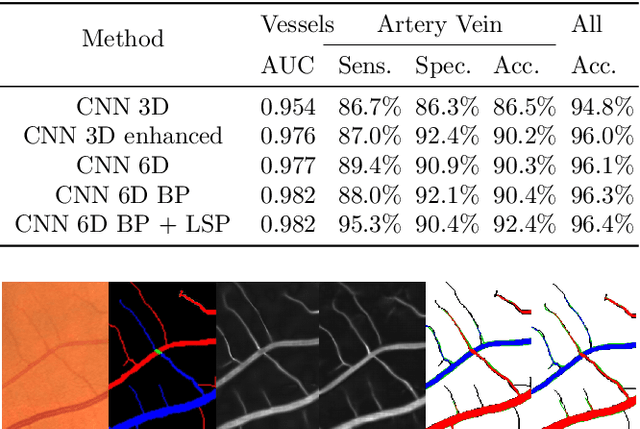

Abstract:Objective Automatic artery/vein (A/V) segmentation from fundus images is required to track blood vessel changes occurring with many pathologies including retinopathy and cardiovascular pathologies. One of the clinical measures that quantifies vessel changes is the arterio-venous ratio (AVR) which represents the ratio between artery and vein diameters. This measure significantly depends on the accuracy of vessel segmentation and classification into arteries and veins. This paper proposes a fast, novel method for semantic A/V segmentation combining deep learning and graph propagation. Methods A convolutional neural network (CNN) is proposed to jointly segment and classify vessels into arteries and veins. The initial CNN labeling is propagated through a graph representation of the retinal vasculature, whose nodes are defined as the vessel branches and edges are weighted by the cost of linking pairs of branches. To efficiently propagate the labels, the graph is simplified into its minimum spanning tree. Results The method achieves an accuracy of 94.8% for vessels segmentation. The A/V classification achieves a specificity of 92.9% with a sensitivity of 93.7% on the CT-DRIVE database compared to the state-of-the-art-specificity and sensitivity, both of 91.7%. Conclusion The results show that our method outperforms the leading previous works on a public dataset for A/V classification and is by far the fastest. Significance The proposed global AVR calculated on the whole fundus image using our automatic A/V segmentation method can better track vessel changes associated to diabetic retinopathy than the standard local AVR calculated only around the optic disc.

* Preprint accepted in Artificial Intelligence in Medicine

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge