Faisal Mohammad

Semiconductor Wafer Map Defect Classification with Tiny Vision Transformers

Apr 03, 2025Abstract:Semiconductor wafer defect classification is critical for ensuring high precision and yield in manufacturing. Traditional CNN-based models often struggle with class imbalances and recognition of the multiple overlapping defect types in wafer maps. To address these challenges, we propose ViT-Tiny, a lightweight Vision Transformer (ViT) framework optimized for wafer defect classification. Trained on the WM-38k dataset. ViT-Tiny outperforms its ViT-Base counterpart and state-of-the-art (SOTA) models, such as MSF-Trans and CNN-based architectures. Through extensive ablation studies, we determine that a patch size of 16 provides optimal performance. ViT-Tiny achieves an F1-score of 98.4%, surpassing MSF-Trans by 2.94% in four-defect classification, improving recall by 2.86% in two-defect classification, and increasing precision by 3.13% in three-defect classification. Additionally, it demonstrates enhanced robustness under limited labeled data conditions, making it a computationally efficient and reliable solution for real-world semiconductor defect detection.

Multimodal Learning for Just-In-Time Software Defect Prediction in Autonomous Driving Systems

Feb 28, 2025

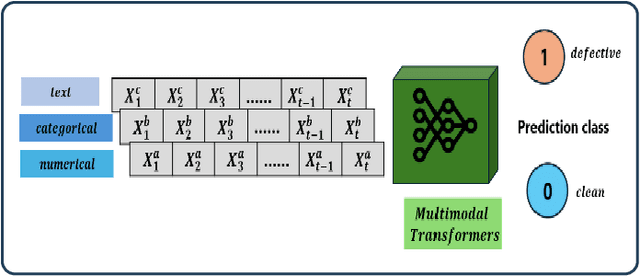

Abstract:In recent years, the rise of autonomous driving technologies has highlighted the critical importance of reliable software for ensuring safety and performance. This paper proposes a novel approach for just-in-time software defect prediction (JIT-SDP) in autonomous driving software systems using multimodal learning. The proposed model leverages the multimodal transformers in which the pre-trained transformers and a combining module deal with the multiple data modalities of the software system datasets such as code features, change metrics, and contextual information. The key point for adapting multimodal learning is to utilize the attention mechanism between the different data modalities such as text, numerical, and categorical. In the combining module, the output of a transformer model on text data and tabular features containing categorical and numerical data are combined to produce the predictions using the fully connected layers. Experiments conducted on three open-source autonomous driving system software projects collected from the GitHub repository (Apollo, Carla, and Donkeycar) demonstrate that the proposed approach significantly outperforms state-of-the-art deep learning and machine learning models regarding evaluation metrics. Our findings highlight the potential of multimodal learning to enhance the reliability and safety of autonomous driving software through improved defect prediction.

Short Term Load Forecasting Using Deep Neural Networks

Nov 08, 2018

Abstract:Electricity load forecasting plays an important role in the energy planning such as generation and distribution. However, the nonlinearity and dynamic uncertainties in the smart grid environment are the main obstacles in forecasting accuracy. Deep Neural Network (DNN) is a set of intelligent computational algorithms that provide a comprehensive solution for modelling a complicated nonlinear relationship between the input and output through multiple hidden layers. In this paper, we propose DNN based electricity load forecasting system to manage the energy consumption in an efficient manner. We investigate the applicability of two deep neural network architectures Feed-forward Deep Neural Network (Deep-FNN) and Recurrent Deep Neural Network (Deep-RNN) to the New York Independent System Operator (NYISO) electricity load forecasting task. We test our algorithm with various activation functions such as Sigmoid, Hyperbolic Tangent (tanh) and Rectifier Linear Unit (ReLU). The performance measurement of two network architectures is compared in terms of Mean Absolute Percentage Error (MAPE) metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge