Faisal Mehmood

AI in Oncology: Transforming Cancer Detection through Machine Learning and Deep Learning Applications

Jan 26, 2025

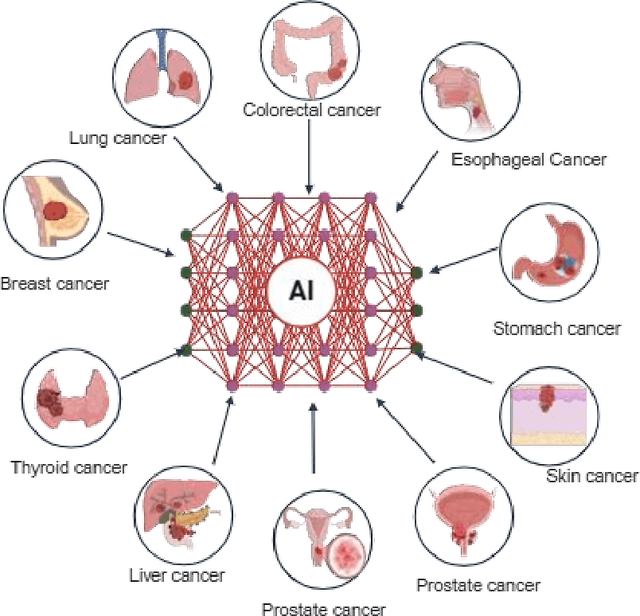

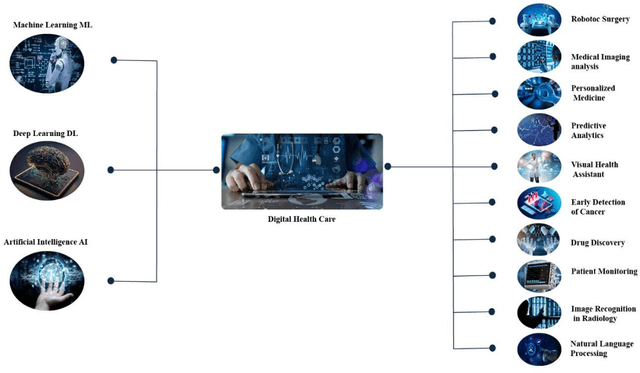

Abstract:Artificial intelligence (AI) has potential to revolutionize the field of oncology by enhancing the precision of cancer diagnosis, optimizing treatment strategies, and personalizing therapies for a variety of cancers. This review examines the limitations of conventional diagnostic techniques and explores the transformative role of AI in diagnosing and treating cancers such as lung, breast, colorectal, liver, stomach, esophageal, cervical, thyroid, prostate, and skin cancers. The primary objective of this paper is to highlight the significant advancements that AI algorithms have brought to oncology within the medical industry. By enabling early cancer detection, improving diagnostic accuracy, and facilitating targeted treatment delivery, AI contributes to substantial improvements in patient outcomes. The integration of AI in medical imaging, genomic analysis, and pathology enhances diagnostic precision and introduces a novel, less invasive approach to cancer screening. This not only boosts the effectiveness of medical facilities but also reduces operational costs. The study delves into the application of AI in radiomics for detailed cancer characterization, predictive analytics for identifying associated risks, and the development of algorithm-driven robots for immediate diagnosis. Furthermore, it investigates the impact of AI on addressing healthcare challenges, particularly in underserved and remote regions. The overarching goal of this platform is to support the development of expert recommendations and to provide universal, efficient diagnostic procedures. By reviewing existing research and clinical studies, this paper underscores the pivotal role of AI in improving the overall cancer care system. It emphasizes how AI-enabled systems can enhance clinical decision-making and expand treatment options, thereby underscoring the importance of AI in advancing precision oncology

Extended multi-stream temporal-attention module for skeleton-based human action recognition (HAR)

Nov 10, 2024

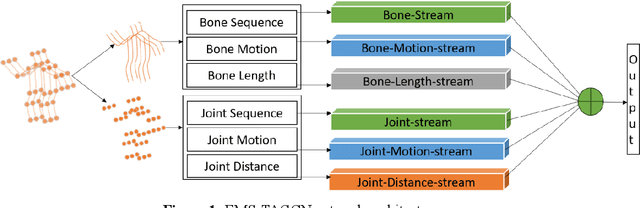

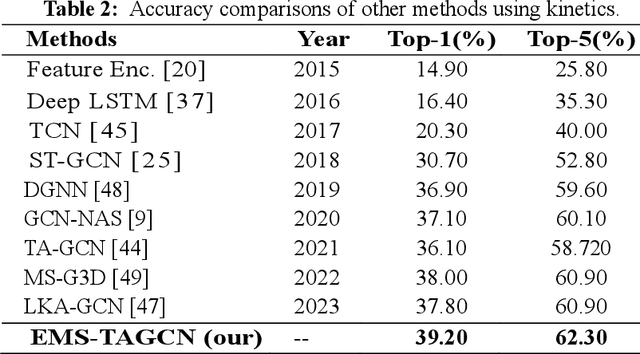

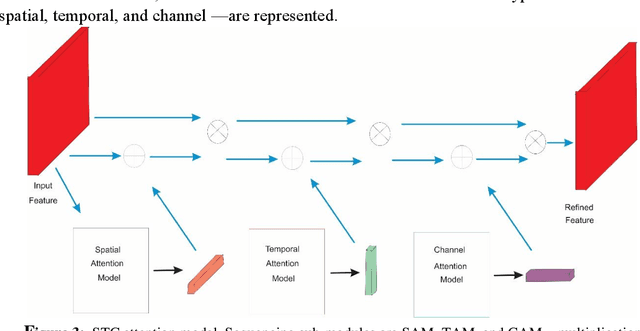

Abstract:Graph convolutional networks (GCNs) are an effective skeleton-based human action recognition (HAR) technique. GCNs enable the specification of CNNs to a non-Euclidean frame that is more flexible. The previous GCN-based models still have a lot of issues: (I) The graph structure is the same for all model layers and input data.

Human Action Recognition (HAR) Using Skeleton-based Quantum Spatial Temporal Relative Transformer Network: ST-RTR

Oct 31, 2024

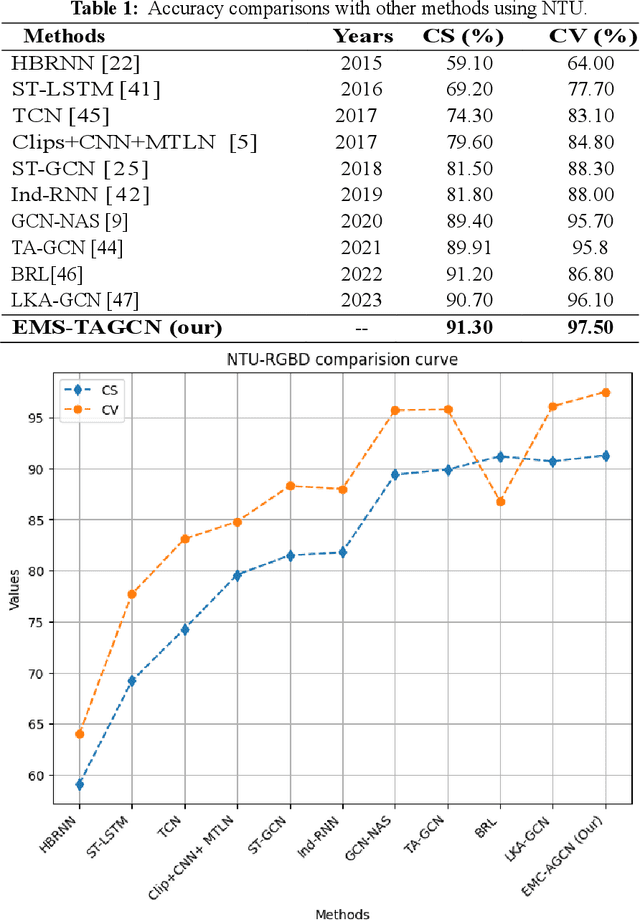

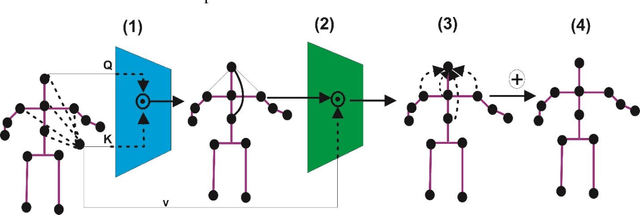

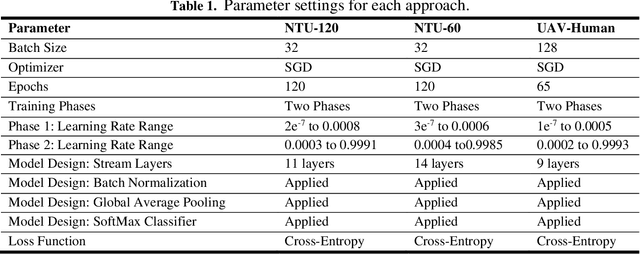

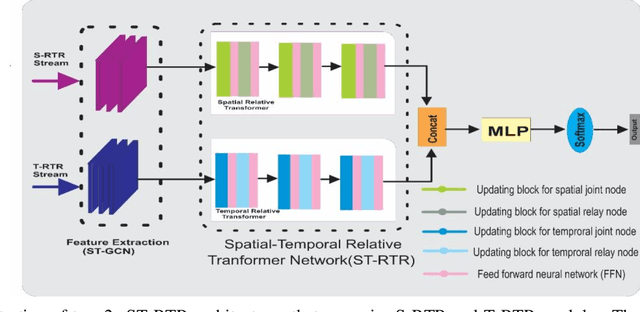

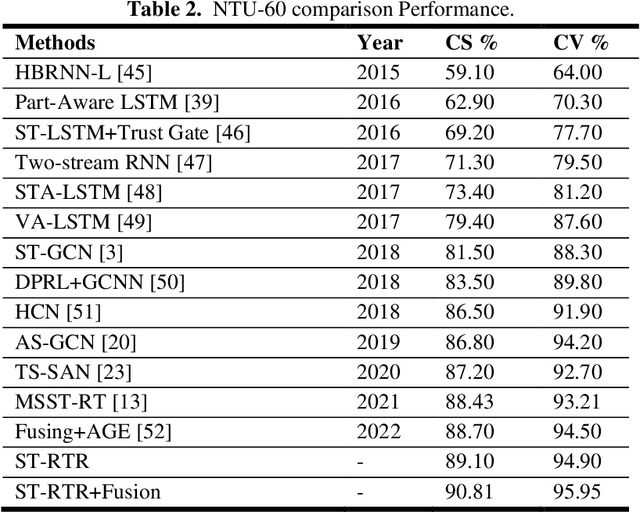

Abstract:Quantum Human Action Recognition (HAR) is an interesting research area in human-computer interaction used to monitor the activities of elderly and disabled individuals affected by physical and mental health. In the recent era, skeleton-based HAR has received much attention because skeleton data has shown that it can handle changes in striking, body size, camera views, and complex backgrounds. One key characteristic of ST-GCN is automatically learning spatial and temporal patterns from skeleton sequences. It has some limitations, as this method only works for short-range correlation due to its limited receptive field. Consequently, understanding human action requires long-range interconnection. To address this issue, we developed a quantum spatial-temporal relative transformer ST-RTR model. The ST-RTR includes joint and relay nodes, which allow efficient communication and data transmission within the network. These nodes help to break the inherent spatial and temporal skeleton topologies, which enables the model to understand long-range human action better. Furthermore, we combine quantum ST-RTR with a fusion model for further performance improvements. To assess the performance of the quantum ST-RTR method, we conducted experiments on three skeleton-based HAR benchmarks: NTU RGB+D 60, NTU RGB+D 120, and UAV-Human. It boosted CS and CV by 2.11 % and 1.45% on NTU RGB+D 60, 1.25% and 1.05% on NTU RGB+D 120. On UAV-Human datasets, accuracy improved by 2.54%. The experimental outcomes explain that the proposed ST-RTR model significantly improves action recognition associated with the standard ST-GCN method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge