Fabrício Breve

Model-agnostic interpretation by visualization of feature perturbations

Jan 26, 2021

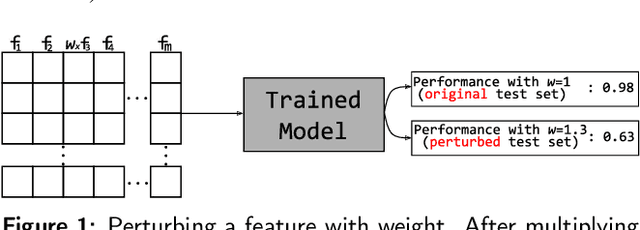

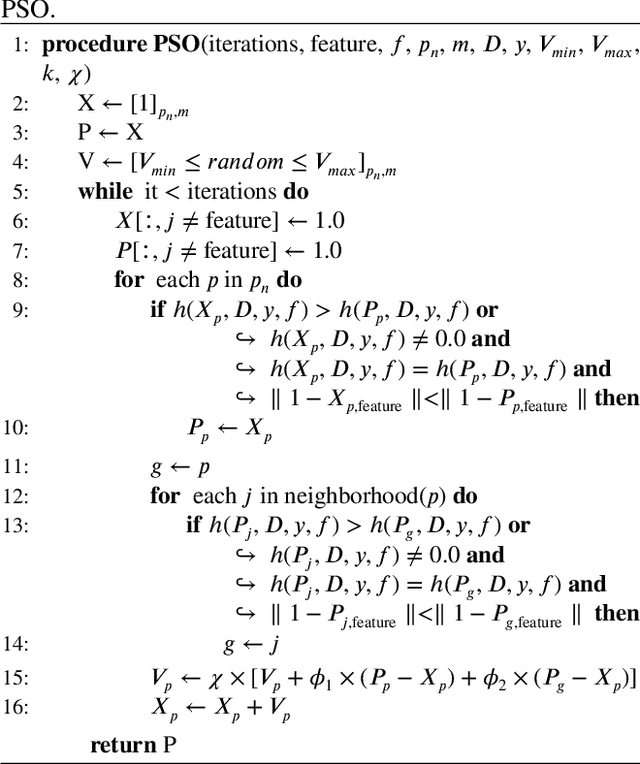

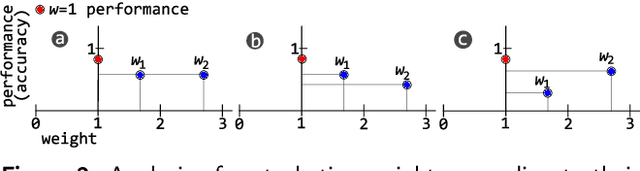

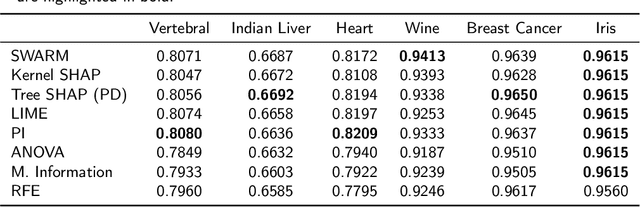

Abstract:Interpretation of machine learning models has become one of the most important topics of research due to the necessity of maintaining control and avoid bias in these algorithms. Since many machine learning algorithms are published every day, there is a need for novel model-agnostic interpretation approaches that could be used to interpret a great variety of algorithms. One particularly useful way to interpret machine learning models is to feed different input data to understand the changes in the prediction. Using such an approach, practitioners can define relations among patterns of data and a model's decision. In this work, we propose a model-agnostic interpretation approach that uses visualization of feature perturbations induced by the particle swarm optimization algorithm. We validate our approach both qualitatively and quantitatively on publicly available datasets, showing the capability to enhance the interpretation of different classifiers while yielding very stable results if compared with the state of the art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge