F. Paredes-Vallés

Back to Event Basics: Self-Supervised Learning of Image Reconstruction for Event Cameras via Photometric Constancy

Sep 17, 2020

Abstract:Event cameras are novel vision sensors that sample, in an asynchronous fashion, brightness increments with low latency and high temporal resolution. The resulting streams of events are of high value by themselves, especially for high speed motion estimation. However, a growing body of work has also focused on the reconstruction of intensity frames from the events, as this allows bridging the gap with the existing literature on appearance- and frame-based computer vision. Recent work has mostly approached this intensity reconstruction problem using neural networks trained with synthetic, ground-truth data. Nevertheless, since accurate ground truth is only available in simulation, these methods are subject to the reality gap and, to ensure generalizability, their training datasets need to be carefully designed. In this work, we approach, for the first time, the reconstruction problem from a self-supervised learning perspective. Our framework combines estimated optical flow and the event-based photometric constancy to train neural networks without the need for any ground-truth or synthetic data. Results across multiple datasets show that the performance of the proposed approach is in line with the state-of-the-art.

How Do Neural Networks Estimate Optical Flow? A Neuropsychology-Inspired Study

Apr 20, 2020

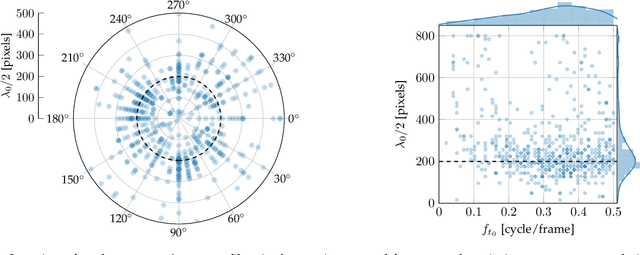

Abstract:End-to-end trained convolutional neural networks have led to a breakthrough in optical flow estimation. The most recent advances focus on improving the optical flow estimation by improving the architecture and setting a new benchmark on the publicly available MPI-Sintel dataset. Instead, in this article, we investigate how deep neural networks estimate optical flow. A better understanding of how these networks function is important for (i) assessing their generalization capabilities to unseen inputs, and (ii) suggesting changes to improve their performance. For our investigation, we focus on FlowNetS, as it is the prototype of an encoder-decoder neural network for optical flow estimation. Furthermore, we use a filter identification method that has played a major role in uncovering the motion filters present in animal brains in neuropsychological research. The method shows that the filters in the deepest layer of FlowNetS are sensitive to a variety of motion patterns. Not only do we find translation filters, as demonstrated in animal brains, but thanks to the easier measurements in artificial neural networks, we even unveil dilation, rotation, and occlusion filters. Furthermore, we find similarities in the refinement part of the network and the perceptual filling-in process which occurs in the mammal primary visual cortex.

Evolved Neuromorphic Control for High Speed Divergence-based Landings of MAVs

Mar 06, 2020

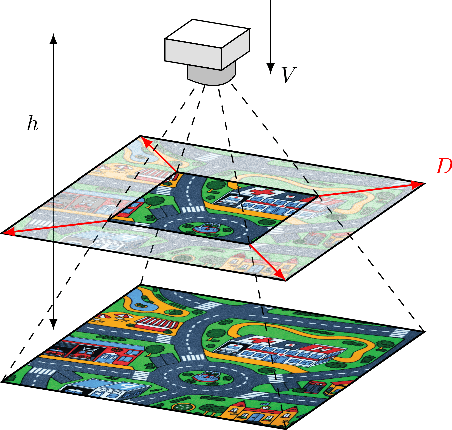

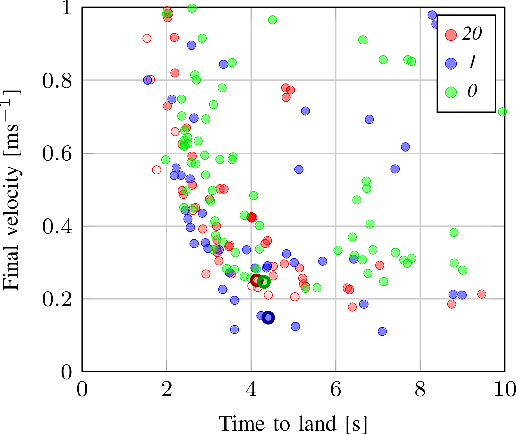

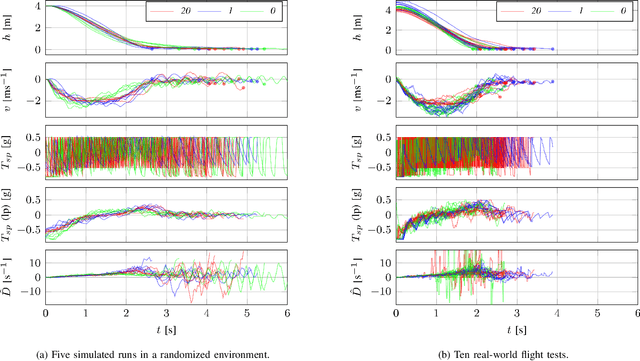

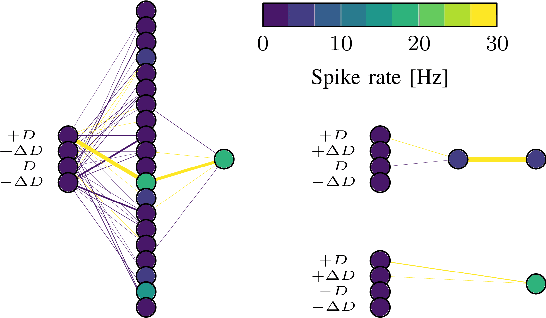

Abstract:Flying insects are capable of vision-based navigation in cluttered environments, reliably avoiding obstacles through fast and agile maneuvers, while being very efficient in the processing of visual stimuli. Meanwhile, autonomous micro air vehicles still lag far behind their biological counterparts, displaying inferior performance with a much higher energy consumption. In light of this, we want to mimic flying insects in terms of their processing capabilities, and consequently apply gained knowledge to a maneuver of relevance. This letter does so through evolving spiking neural networks for controlling landings of micro air vehicles using the divergence of the optical flow field of a downward-looking camera. We demonstrate that the resulting neuromorphic controllers transfer robustly from a highly abstracted simulation to the real world, performing fast and safe landings while keeping network spike rate minimal. Furthermore, we provide insight into the resources required for successfully solving the problem of divergence-based landing, showing that high-resolution control can potentially be learned with only a single spiking neuron. To the best of our knowledge, this is the first work integrating spiking neural networks in the control loop of a real-world flying robot. Videos of the experiments can be found at http://bit.ly/neuro-controller .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge