Evridiki Tsoureli-Nikita

COGNET-MD, an evaluation framework and dataset for Large Language Model benchmarks in the medical domain

May 17, 2024

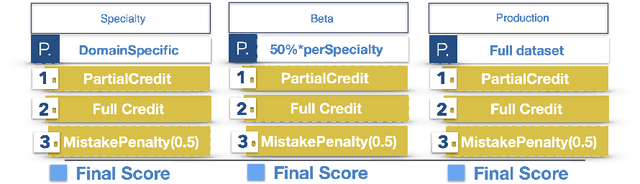

Abstract:Large Language Models (LLMs) constitute a breakthrough state-of-the-art Artificial Intelligence (AI) technology which is rapidly evolving and promises to aid in medical diagnosis either by assisting doctors or by simulating a doctor's workflow in more advanced and complex implementations. In this technical paper, we outline Cognitive Network Evaluation Toolkit for Medical Domains (COGNET-MD), which constitutes a novel benchmark for LLM evaluation in the medical domain. Specifically, we propose a scoring-framework with increased difficulty to assess the ability of LLMs in interpreting medical text. The proposed framework is accompanied with a database of Multiple Choice Quizzes (MCQs). To ensure alignment with current medical trends and enhance safety, usefulness, and applicability, these MCQs have been constructed in collaboration with several associated medical experts in various medical domains and are characterized by varying degrees of difficulty. The current (first) version of the database includes the medical domains of Psychiatry, Dentistry, Pulmonology, Dermatology and Endocrinology, but it will be continuously extended and expanded to include additional medical domains.

Dermacen Analytica: A Novel Methodology Integrating Multi-Modal Large Language Models with Machine Learning in tele-dermatology

Mar 21, 2024

Abstract:The rise of Artificial Intelligence creates great promise in the field of medical discovery, diagnostics and patient management. However, the vast complexity of all medical domains require a more complex approach that combines machine learning algorithms, classifiers, segmentation algorithms and, lately, large language models. In this paper, we describe, implement and assess an Artificial Intelligence-empowered system and methodology aimed at assisting the diagnosis process of skin lesions and other skin conditions within the field of dermatology that aims to holistically address the diagnostic process in this domain. The workflow integrates large language, transformer-based vision models and sophisticated machine learning tools. This holistic approach achieves a nuanced interpretation of dermatological conditions that simulates and facilitates a dermatologist's workflow. We assess our proposed methodology through a thorough cross-model validation technique embedded in an evaluation pipeline that utilizes publicly available medical case studies of skin conditions and relevant images. To quantitatively score the system performance, advanced machine learning and natural language processing tools are employed which focus on similarity comparison and natural language inference. Additionally, we incorporate a human expert evaluation process based on a structured checklist to further validate our results. We implemented the proposed methodology in a system which achieved approximate (weighted) scores of 0.87 for both contextual understanding and diagnostic accuracy, demonstrating the efficacy of our approach in enhancing dermatological analysis. The proposed methodology is expected to prove useful in the development of next-generation tele-dermatology applications, enhancing remote consultation capabilities and access to care, especially in underserved areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge