Evan Piermont

Subjective Causality

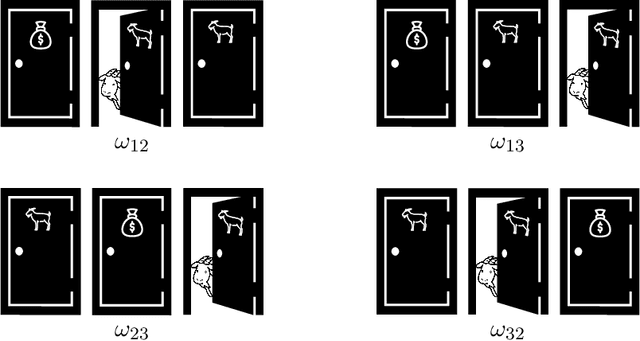

Jan 17, 2024Abstract:We show that it is possible to understand and identify a decision maker's subjective causal judgements by observing her preferences over interventions. Following Pearl [2000], we represent causality using causal models (also called structural equations models), where the world is described by a collection of variables, related by equations. We show that if a preference relation over interventions satisfies certain axioms (related to standard axioms regarding counterfactuals), then we can define (i) a causal model, (ii) a probability capturing the decision-maker's uncertainty regarding the external factors in the world and (iii) a utility on outcomes such that each intervention is associated with an expected utility and such that intervention $A$ is preferred to $B$ iff the expected utility of $A$ is greater than that of $B$. In addition, we characterize when the causal model is unique. Thus, our results allow a modeler to test the hypothesis that a decision maker's preferences are consistent with some causal model and to identify causal judgements from observed behavior.

Hypothetical Expected Utility

Jul 20, 2021

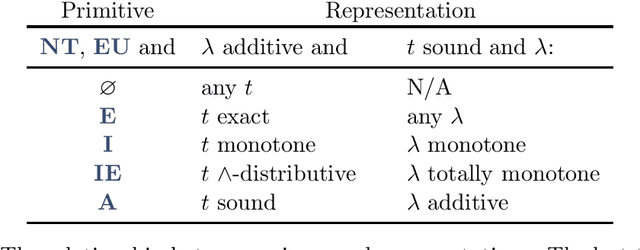

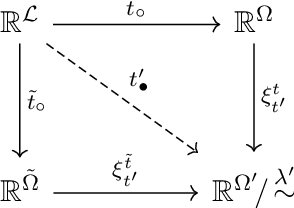

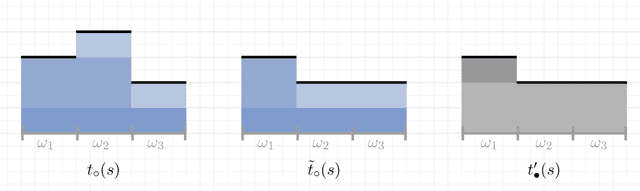

Abstract:This paper provides a model to analyze and identify a decision maker's (DM's) hypothetical reasoning. Using this model, I show that a DM's propensity to engage in hypothetical thinking is captured exactly by her ability to recognize implications (i.e., to identify that one hypothesis implies another) and that this later relation is encoded by a DM's observable behavior. Thus, this characterization both provides a concrete definition of (flawed) hypothetical reasoning and, importantly, yields a methodology to identify these judgments from standard economic data.

Failures of Contingent Thinking

Jul 15, 2020

Abstract:In this paper, we provide a theoretical framework to analyze an agent who misinterprets or misperceives the true decision problem she faces. Within this framework, we show that a wide range of behavior observed in experimental settings manifest as failures to perceive implications, in other words, to properly account for the logical relationships between various payoff relevant contingencies. We present behavioral characterizations corresponding to several benchmarks of logical sophistication and show how it is possible to identify which implications the agent fails to perceive. Thus, our framework delivers both a methodology for assessing an agent's level of contingent thinking and a strategy for identifying her beliefs in the absence full rationality.

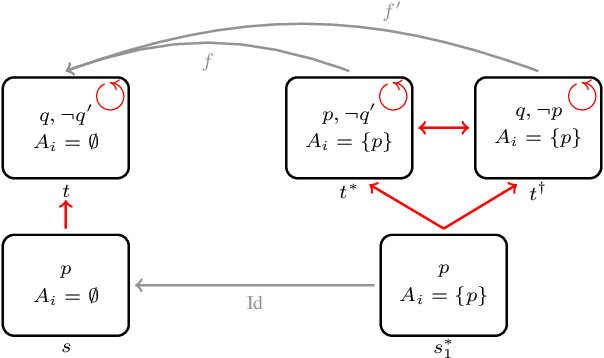

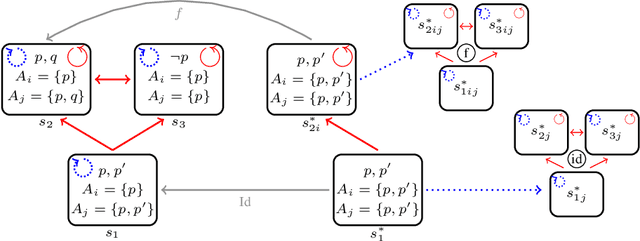

Dynamic Awareness

Jul 06, 2020

Abstract:We investigate how to model the beliefs of an agent who becomes more aware. We use the framework of Halpern and Rego (2013) by adding probability, and define a notion of a model transition that describes constraints on how, if an agent becomes aware of a new formula $\phi$ in state $s$ of a model $M$, she transitions to state $s^*$ in a model $M^*$. We then discuss how such a model can be applied to information disclosure.

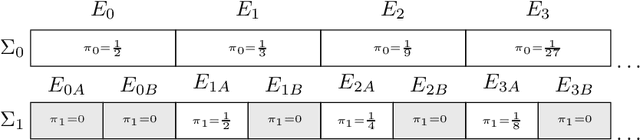

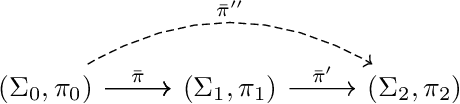

Unforeseen Evidence

Jul 17, 2019

Abstract:I propose a normative updating rule, extended Bayesianism, for the incorporation of probabilistic information arising from the process of becoming more aware. Extended Bayesianism generalizes standard Bayesian updating to allow the posterior to reside on richer probability space than the prior. I then provide an observable criterion on prior and posterior beliefs such that they were consistent with extended Bayesianism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge