Eric Heitz

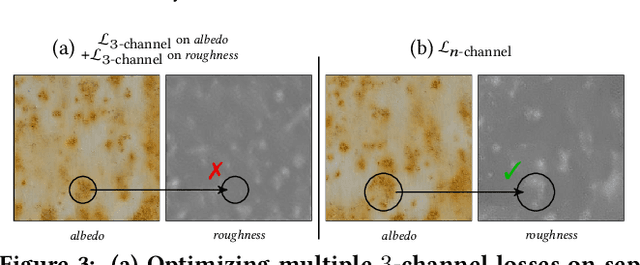

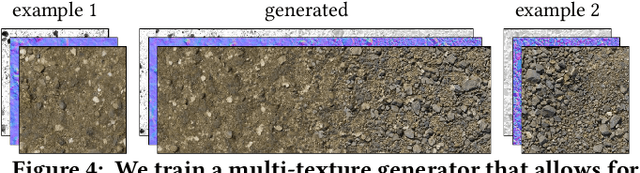

Passing Multi-Channel Material Textures to a 3-Channel Loss

May 27, 2021

Abstract:Our objective is to compute a textural loss that can be used to train texture generators with multiple material channels typically used for physically based rendering such as albedo, normal, roughness, metalness, ambient occlusion, etc. Neural textural losses often build on top of the feature spaces of pretrained convolutional neural networks. Unfortunately, these pretrained models are only available for 3-channel RGB data and hence limit neural textural losses to this format. To overcome this limitation, we show that passing random triplets to a 3-channel loss provides a multi-channel loss that can be used to generate high-quality material textures.

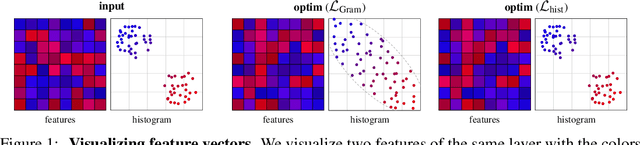

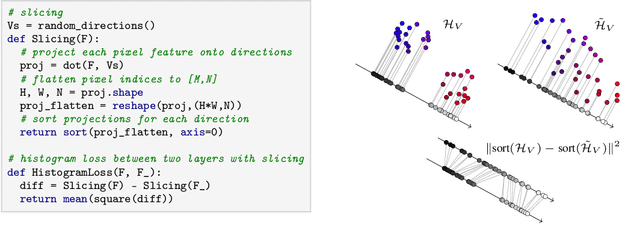

Pitfalls of the Gram Loss for Neural Texture Synthesis in Light of Deep Feature Histograms

Jun 16, 2020

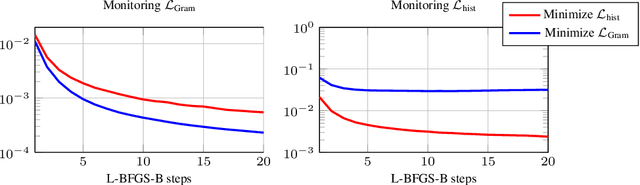

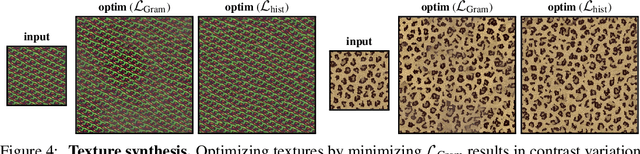

Abstract:Neural texture synthesis and style transfer are both powered by the Gram matrix as a means to measure deep feature statistics. Despite its ubiquity, this second-order feature descriptor has several shortcomings resulting in visual artifacts, ill-defined interpolation, or inability to capture spatial constraints. Many previous works acknowledge these shortcomings but do not really explain why they occur. Fixing them is thus usually approached by adding new losses, which require parameter tuning and make the problem even more ill-defined, or architecturing complex and/or adversarial networks. In this paper, we propose a comprehensive study of these problems in the light of the multi-dimensional histograms of deep features. With the insights gained from our analysis, we show how to compute a well-defined and efficient textural loss based on histogram transformations. Our textural loss outperforms the Gram matrix in terms of quality, robustness, spatial control, and interpolation. It does not require additional learning or parameter tuning, and can be implemented in a few lines of code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge