Emily Hadley

Investigating Algorithm Review Boards for Organizational Responsible Artificial Intelligence Governance

Jan 23, 2024Abstract:Organizations including companies, nonprofits, governments, and academic institutions are increasingly developing, deploying, and utilizing artificial intelligence (AI) tools. Responsible AI (RAI) governance approaches at organizations have emerged as important mechanisms to address potential AI risks and harms. In this work, we interviewed 17 technical contributors across organization types (Academic, Government, Industry, Nonprofit) and sectors (Finance, Health, Tech, Other) about their experiences with internal RAI governance. Our findings illuminated the variety of organizational definitions of RAI and accompanying internal governance approaches. We summarized the first detailed findings on algorithm review boards (ARBs) and similar review committees in practice, including their membership, scope, and measures of success. We confirmed known robust model governance in finance sectors and revealed extensive algorithm and AI governance with ARB-like review boards in health sectors. Our findings contradict the idea that Institutional Review Boards alone are sufficient for algorithm governance and posit that ARBs are among the more impactful internal RAI governance approaches. Our results suggest that integration with existing internal regulatory approaches and leadership buy-in are among the most important attributes for success and that financial tensions are the greatest challenge to effective organizational RAI. We make a variety of suggestions for how organizational partners can learn from these findings when building their own internal RAI frameworks. We outline future directions for developing and measuring effectiveness of ARBs and other internal RAI governance approaches.

Prioritizing Policies for Furthering Responsible Artificial Intelligence in the United States

Nov 30, 2022Abstract:Several policy options exist, or have been proposed, to further responsible artificial intelligence (AI) development and deployment. Institutions, including U.S. government agencies, states, professional societies, and private and public sector businesses, are well positioned to implement these policies. However, given limited resources, not all policies can or should be equally prioritized. We define and review nine suggested policies for furthering responsible AI, rank each policy on potential use and impact, and recommend prioritization relative to each institution type. We find that pre-deployment audits and assessments and post-deployment accountability are likely to have the highest impact but also the highest barriers to adoption. We recommend that U.S. government agencies and companies highly prioritize development of pre-deployment audits and assessments, while the U.S. national legislature should highly prioritize post-deployment accountability. We suggest that U.S. government agencies and professional societies should highly prioritize policies that support responsible AI research and that states should highly prioritize support of responsible AI education. We propose that companies can highly prioritize involving community stakeholders in development efforts and supporting diversity in AI development. We advise lower levels of prioritization across institutions for AI ethics statements and databases of AI technologies or incidents. We recognize that no one policy will lead to responsible AI and instead advocate for strategic policy implementation across institutions.

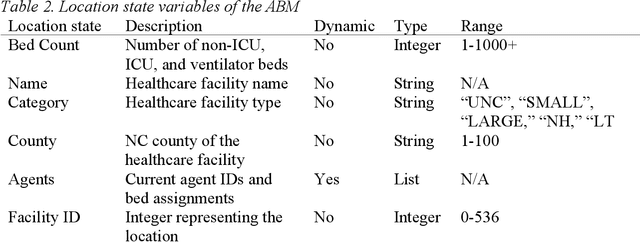

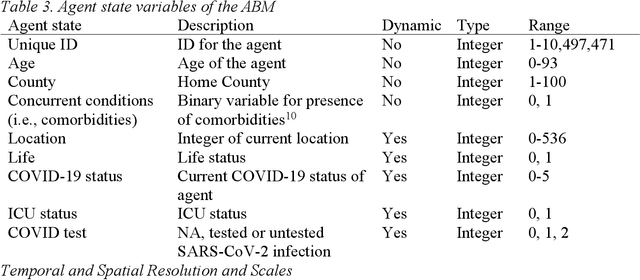

North Carolina COVID-19 Agent-Based Model Framework for Hospitalization Forecasting Overview, Design Concepts, and Details Protocol

Jun 08, 2021

Abstract:This Overview, Design Concepts, and Details Protocol (ODD) provides a detailed description of an agent-based model (ABM) that was developed to simulate hospitalizations during the COVID-19 pandemic. Using the descriptions of submodels, provided parameters, and the links to data sources, modelers will be able to replicate the creation and results of this model.

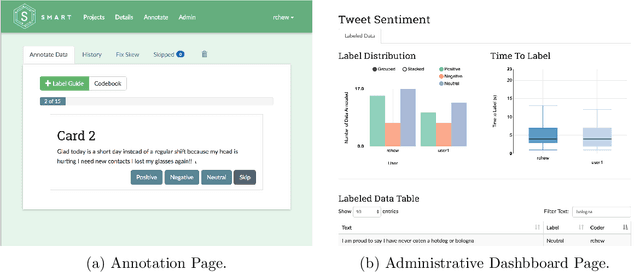

SMART: An Open Source Data Labeling Platform for Supervised Learning

Dec 11, 2018

Abstract:SMART is an open source web application designed to help data scientists and research teams efficiently build labeled training data sets for supervised machine learning tasks. SMART provides users with an intuitive interface for creating labeled data sets, supports active learning to help reduce the required amount of labeled data, and incorporates inter-rater reliability statistics to provide insight into label quality. SMART is designed to be platform agnostic and easily deployable to meet the needs of as many different research teams as possible. The project website contains links to the code repository and extensive user documentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge