Elvira Fleig

Edge-Aligned Initialization of Kernels for Steered Mixture-of-Experts

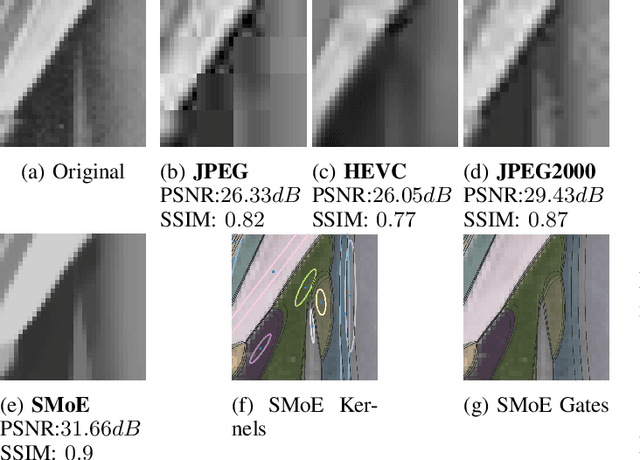

Feb 02, 2026Abstract:Steered Mixture-of-Experts (SMoE) has recently emerged as a powerful framework for spatial-domain image modeling, enabling high-fidelity image representation using a remarkably small number of parameters. Its ability to steer kernel-based experts toward structural image features has led to successful applications in image compression, denoising, super-resolution, and light field processing. However, practical adoption is hindered by the reliance on gradient-based optimization to estimate model parameters on a per-image basis - a process that is computationally intensive and difficult to scale. Initialization strategies for SMoE are an essential component that directly affects convergence and reconstruction quality. In this paper, we propose a novel, edge-based initialization scheme that achieves good reconstruction qualities while reducing the need for stochastic optimization significantly. Through a method that leverages Canny edge detection to extract a sparse set of image contours, kernel positions and orientations are deterministically inferred. A separate approach enables the direct estimation of initial expert coefficients. This initialization reduces both memory consumption and computational cost.

Enclosing Prototypical Variational Autoencoder for Explainable Out-of-Distribution Detection

Jun 17, 2025Abstract:Understanding the decision-making and trusting the reliability of Deep Machine Learning Models is crucial for adopting such methods to safety-relevant applications. We extend self-explainable Prototypical Variational models with autoencoder-based out-of-distribution (OOD) detection: A Variational Autoencoder is applied to learn a meaningful latent space which can be used for distance-based classification, likelihood estimation for OOD detection, and reconstruction. The In-Distribution (ID) region is defined by a Gaussian mixture distribution with learned prototypes representing the center of each mode. Furthermore, a novel restriction loss is introduced that promotes a compact ID region in the latent space without collapsing it into single points. The reconstructive capabilities of the Autoencoder ensure the explainability of the prototypes and the ID region of the classifier, further aiding the discrimination of OOD samples. Extensive evaluations on common OOD detection benchmarks as well as a large-scale dataset from a real-world railway application demonstrate the usefulness of the approach, outperforming previous methods.

Steered Mixture-of-Experts Autoencoder Design for Real-Time Image Modelling and Denoising

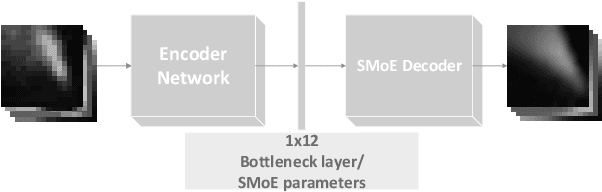

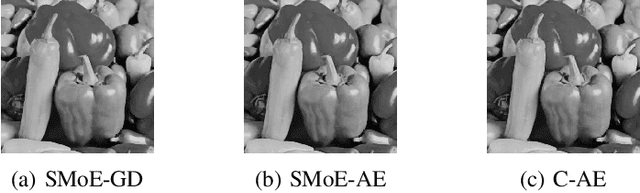

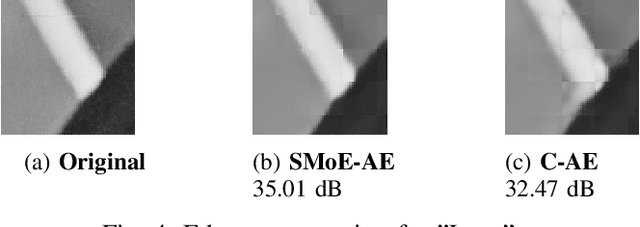

May 05, 2023Abstract:Research in the past years introduced Steered Mixture-of-Experts (SMoE) as a framework to form sparse, edge-aware models for 2D- and higher dimensional pixel data, applicable to compression, denoising, and beyond, and capable to compete with state-of-the-art compression methods. To circumvent the computationally demanding, iterative optimization method used in prior works an autoencoder design is introduced that reduces the run-time drastically while simultaneously improving reconstruction quality for block-based SMoE approaches. Coupling a deep encoder network with a shallow, parameter-free SMoE decoder enforces an efficent and explainable latent representation. Our initial work on the autoencoder design presented a simple model, with limited applicability to compression and beyond. In this paper, we build on the foundation of the first autoencoder design and improve the reconstruction quality by expanding it to models of higher complexity and different block sizes. Furthermore, we improve the noise robustness of the autoencoder for SMoE denoising applications. Our results reveal that the newly adapted autoencoders allow ultra-fast estimation of parameters for complex SMoE models with excellent reconstruction quality, both for noise free input and under severe noise. This enables the SMoE image model framework for a wide range of image processing applications, including compression, noise reduction, and super-resolution.

Edge-Aware Autoencoder Design for Real-Time Mixture-of-Experts Image Compression

Jul 25, 2022

Abstract:Steered-Mixtures-of-Experts (SMoE) models provide sparse, edge-aware representations, applicable to many use-cases in image processing. This includes denoising, super-resolution and compression of 2D- and higher dimensional pixel data. Recent works for image compression indicate that compression of images based on SMoE models can provide competitive performance to the state-of-the-art. Unfortunately, the iterative model-building process at the encoder comes with excessive computational demands. In this paper we introduce a novel edge-aware Autoencoder (AE) strategy designed to avoid the time-consuming iterative optimization of SMoE models. This is done by directly mapping pixel blocks to model parameters for compression, in spirit similar to recent works on "unfolding" of algorithms, while maintaining full compatibility to the established SMoE framework. With our plug-in AE encoder, we achieve a quantum-leap in performance with encoder run-time savings by a factor of 500 to 1000 with even improved image reconstruction quality. For image compression the plug-in AE encoder has real-time properties and improves RD-performance compared to our previous works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge