Elmira Mousa Rezabeyk

Saliency Assisted Quantization for Neural Networks

Nov 07, 2024

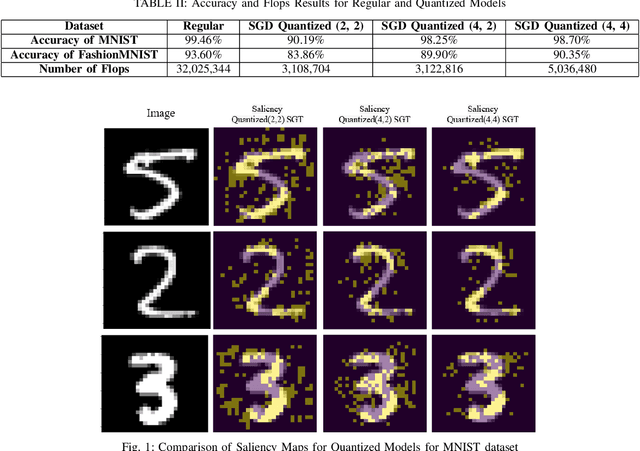

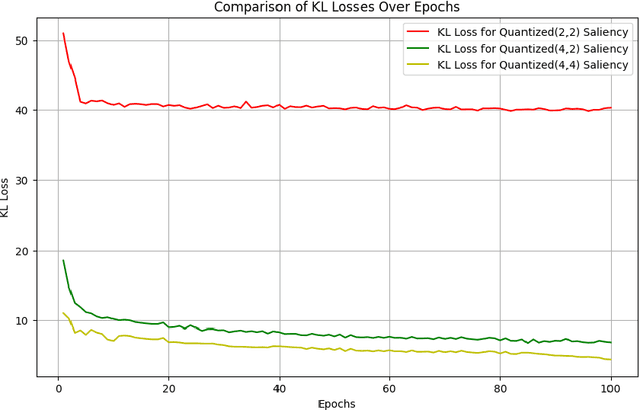

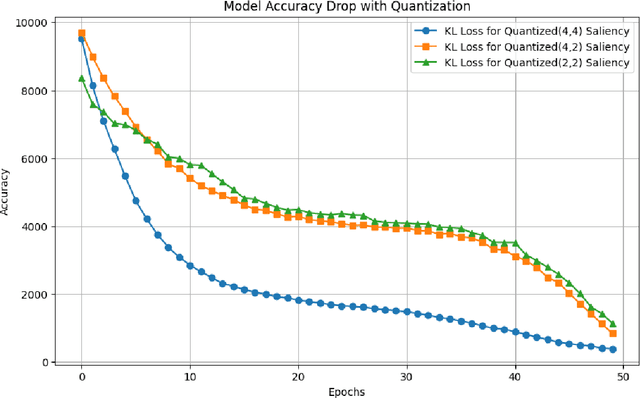

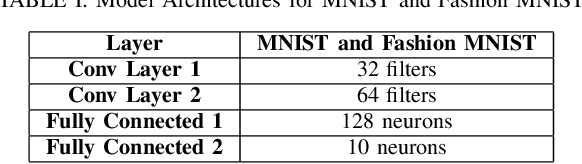

Abstract:Deep learning methods have established a significant place in image classification. While prior research has focused on enhancing final outcomes, the opaque nature of the decision-making process in these models remains a concern for experts. Additionally, the deployment of these methods can be problematic in resource-limited environments. This paper tackles the inherent black-box nature of these models by providing real-time explanations during the training phase, compelling the model to concentrate on the most distinctive and crucial aspects of the input. Furthermore, we employ established quantization techniques to address resource constraints. To assess the effectiveness of our approach, we explore how quantization influences the interpretability and accuracy of Convolutional Neural Networks through a comparative analysis of saliency maps from standard and quantized models. Quantization is implemented during the training phase using the Parameterized Clipping Activation method, with a focus on the MNIST and FashionMNIST benchmark datasets. We evaluated three bit-width configurations (2-bit, 4-bit, and mixed 4/2-bit) to explore the trade-off between efficiency and interpretability, with each configuration designed to highlight varying impacts on saliency map clarity and model accuracy. The results indicate that while quantization is crucial for implementing models on resource-limited devices, it necessitates a trade-off between accuracy and interpretability. Lower bit-widths result in more pronounced reductions in both metrics, highlighting the necessity of meticulous quantization parameter selection in applications where model transparency is paramount. The study underscores the importance of achieving a balance between efficiency and interpretability in the deployment of neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge