Elliot H. Young

Clustered random forests with correlated data for optimal estimation and inference under potential covariate shift

Mar 16, 2025

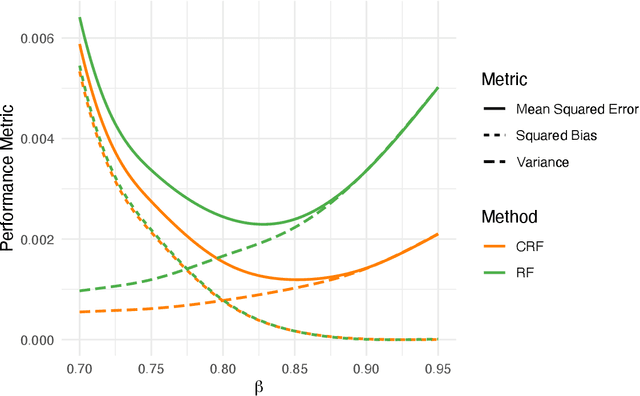

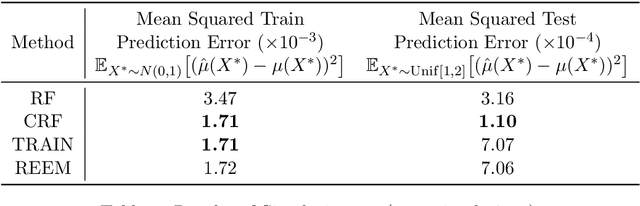

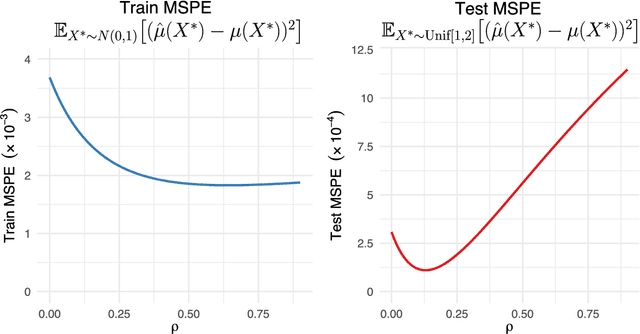

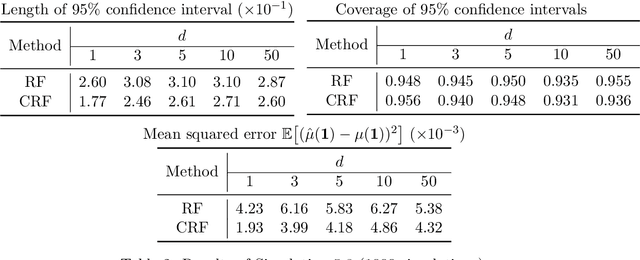

Abstract:We develop Clustered Random Forests, a random forests algorithm for clustered data, arising from independent groups that exhibit within-cluster dependence. The leaf-wise predictions for each decision tree making up clustered random forests takes the form of a weighted least squares estimator, which leverage correlations between observations for improved prediction accuracy. Clustered random forests are shown for certain tree splitting criteria to be minimax rate optimal for pointwise conditional mean estimation, while being computationally competitive with standard random forests. Further, we observe that the optimality of a clustered random forest, with regards to how (population level) optimal weights are chosen within this framework i.e. those that minimise mean squared prediction error, vary under covariate distribution shift. In light of this, we advocate weight estimation to be determined by a user-chosen covariate distribution with respect to which optimal prediction or inference is desired. This highlights a key difference in behaviour, between correlated and independent data, with regards to nonparametric conditional mean estimation under covariate shift. We demonstrate our theoretical findings numerically in a number of simulated and real-world settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge