Elizabeth Bonsignore

Examining the Values Reflected by Children during AI Problem Formulation

Sep 27, 2023

Abstract:Understanding how children design and what they value in AI interfaces that allow them to explicitly train their models such as teachable machines, could help increase such activities' impact and guide the design of future technologies. In a co-design session using a modified storyboard, a team of 5 children (aged 7-13 years) and adult co-designers, engaged in AI problem formulation activities where they imagine their own teachable machines. Our findings, leveraging an established psychological value framework (the Rokeach Value Survey), illuminate how children conceptualize and embed their values in AI systems that they themselves devise to support their everyday activities. Specifically, we find that children's proposed ideas require advanced system intelligence, e.g. emotion detection and understanding the social relationships of a user. The underlying models could be trained under multiple modalities and any errors would be fixed by adding more data or by anticipating negative examples. Children's ideas showed they cared about family and expected machines to understand their social context before making decisions.

Exploring Machine Teaching with Children

Sep 27, 2021

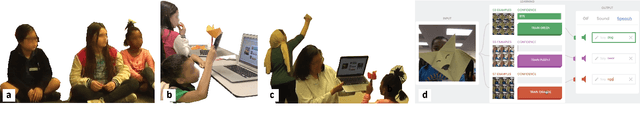

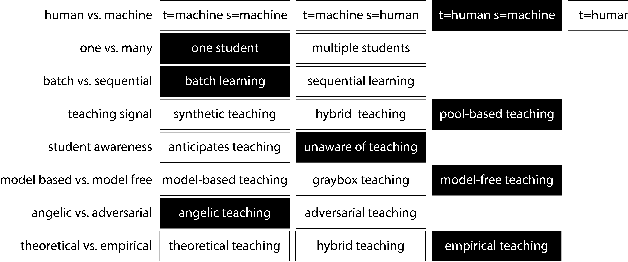

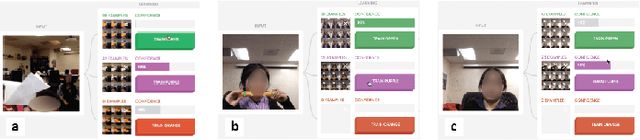

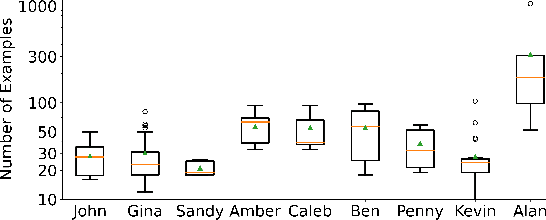

Abstract:Iteratively building and testing machine learning models can help children develop creativity, flexibility, and comfort with machine learning and artificial intelligence. We explore how children use machine teaching interfaces with a team of 14 children (aged 7-13 years) and adult co-designers. Children trained image classifiers and tested each other's models for robustness. Our study illuminates how children reason about ML concepts, offering these insights for designing machine teaching experiences for children: (i) ML metrics (e.g. confidence scores) should be visible for experimentation; (ii) ML activities should enable children to exchange models for promoting reflection and pattern recognition; and (iii) the interface should allow quick data inspection (e.g. images vs. gestures).

* 11 pages, 8 images

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge