Elif Sertel

HRPlanes: High Resolution Airplane Dataset for Deep Learning

Apr 22, 2022

Abstract:Airplane detection from satellite imagery is a challenging task due to the complex backgrounds in the images and differences in data acquisition conditions caused by the sensor geometry and atmospheric effects. Deep learning methods provide reliable and accurate solutions for automatic detection of airplanes; however, huge amount of training data is required to obtain promising results. In this study, we create a novel airplane detection dataset called High Resolution Planes (HRPlanes) by using images from Google Earth (GE) and labeling the bounding box of each plane on the images. HRPlanes include GE images of several different airports across the world to represent a variety of landscape, seasonal and satellite geometry conditions obtained from different satellites. We evaluated our dataset with two widely used object detection methods namely YOLOv4 and Faster R-CNN. Our preliminary results show that the proposed dataset can be a valuable data source and benchmark data set for future applications. Moreover, proposed architectures and results of this study could be used for transfer learning of different datasets and models for airplane detection.

A Multi-Task Deep Learning Framework for Building Footprint Segmentation

Apr 19, 2021

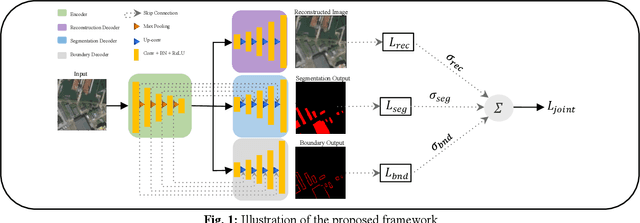

Abstract:The task of building footprint segmentation has been well-studied in the context of remote sensing (RS) as it provides valuable information in many aspects, however, difficulties brought by the nature of RS images such as variations in the spatial arrangements and in-consistent constructional patterns require studying further, since it often causes poorly classified segmentation maps. We address this need by designing a joint optimization scheme for the task of building footprint delineation and introducing two auxiliary tasks; image reconstruction and building footprint boundary segmentation with the intent to reveal the common underlying structure to advance the classification accuracy of a single task model under the favor of auxiliary tasks. In particular, we propose a deep multi-task learning (MTL) based unified fully convolutional framework which operates in an end-to-end manner by making use of joint loss function with learnable loss weights considering the homoscedastic uncertainty of each task loss. Experimental results conducted on the SpaceNet6 dataset demonstrate the potential of the proposed MTL framework as it improves the classification accuracy considerably compared to single-task and lesser compounded tasks.

Rethinking CNN-Based Pansharpening: Guided Colorization of Panchromatic Images via GANs

Jun 30, 2020

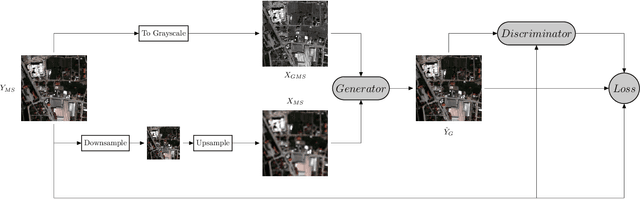

Abstract:Convolutional Neural Networks (CNN)-based approaches have shown promising results in pansharpening of satellite images in recent years. However, they still exhibit limitations in producing high-quality pansharpening outputs. To that end, we propose a new self-supervised learning framework, where we treat pansharpening as a colorization problem, which brings an entirely novel perspective and solution to the problem compared to existing methods that base their solution solely on producing a super-resolution version of the multispectral image. Whereas CNN-based methods provide a reduced resolution panchromatic image as input to their model along with reduced resolution multispectral images, hence learn to increase their resolution together, we instead provide the grayscale transformed multispectral image as input, and train our model to learn the colorization of the grayscale input. We further address the fixed downscale ratio assumption during training, which does not generalize well to the full-resolution scenario. We introduce a noise injection into the training by randomly varying the downsampling ratios. Those two critical changes, along with the addition of adversarial training in the proposed PanColorization Generative Adversarial Networks (PanColorGAN) framework, help overcome the spatial detail loss and blur problems that are observed in CNN-based pansharpening. The proposed approach outperforms the previous CNN-based and traditional methods as demonstrated in our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge