Ehsan Zobeidi

Distributed Gaussian Process Mapping for Robot Teams with Time-varying Communication

Oct 12, 2021

Abstract:Multi-agent mapping is a fundamentally important capability for autonomous robot task coordination and execution in complex environments. While successful algorithms have been proposed for mapping using individual platforms, cooperative online mapping for teams of robots remains largely a challenge. We focus on probabilistic variants of mapping due to its potential utility in downstream tasks such as uncertainty-aware path-planning. A critical question to enabling this capability is how to process and aggregate incrementally observed local information among individual platforms, especially when their ability to communicate is intermittent. We put forth an Incremental Sparse Gaussian Process (GP) methodology for multi-robot mapping, where the regression is over a truncated signed-distance field (TSDF). Doing so permits each robot in the network to track a local estimate of a pseudo-point approximation GP posterior and perform weighted averaging of its parameters with those of its (possibly time-varying) set of neighbors. We establish conditions on the pseudo-point representation, as well as communication protocol, such that robots' local GPs converge to the one with globally aggregated information. We further provide experiments that corroborate our theoretical findings for probabilistic multi-robot mapping.

A Deep Signed Directional Distance Function for Object Shape Representation

Jul 23, 2021

Abstract:Neural networks that map 3D coordinates to signed distance function (SDF) or occupancy values have enabled high-fidelity implicit representations of object shape. This paper develops a new shape model that allows synthesizing novel distance views by optimizing a continuous signed directional distance function (SDDF). Similar to deep SDF models, our SDDF formulation can represent whole categories of shapes and complete or interpolate across shapes from partial input data. Unlike an SDF, which measures distance to the nearest surface in any direction, an SDDF measures distance in a given direction. This allows training an SDDF model without 3D shape supervision, using only distance measurements, readily available from depth camera or Lidar sensors. Our model also removes post-processing steps like surface extraction or rendering by directly predicting distance at arbitrary locations and viewing directions. Unlike deep view-synthesis techniques, such as Neural Radiance Fields, which train high-capacity black-box models, our model encodes by construction the property that SDDF values decrease linearly along the viewing direction. This structure constraint not only results in dimensionality reduction but also provides analytical confidence about the accuracy of SDDF predictions, regardless of the distance to the object surface.

Dense Incremental Metric-Semantic Mapping for Multi-Agent Systems via Sparse Gaussian Process Regression

Mar 30, 2021

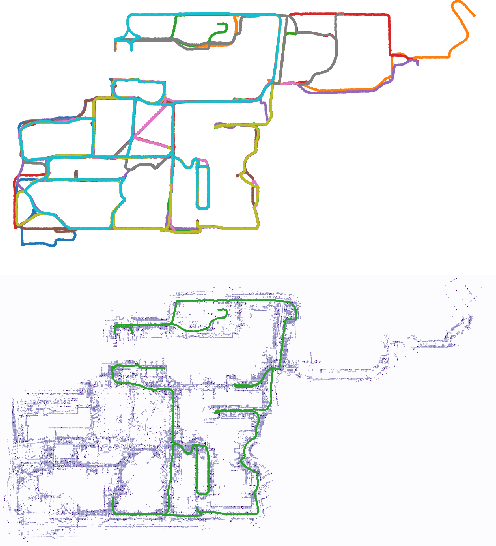

Abstract:We develop an online probabilistic metric-semantic mapping approach for mobile robot teams relying on streaming RGB-D observations. The generated maps contain full continuous distributional information about the geometric surfaces and semantic labels (e.g., chair, table, wall). Our approach is based on online Gaussian Process (GP) training and inference, and avoids the complexity of GP classification by regressing a truncated signed distance function (TSDF) of the regions occupied by different semantic classes. Online regression is enabled through a sparse pseudo-point approximation of the GP posterior. To scale to large environments, we further consider spatial domain partitioning via an octree data structure with overlapping leaves. An extension to the multi-robot setting is developed by having each robot execute its own online measurement update and then combine its posterior parameters via local weighted geometric averaging with those of its neighbors. This yields a distributed information processing architecture in which the GP map estimates of all robots converge to a common map of the environment while relying only on local one-hop communication. Our experiments demonstrate the effectiveness of the probabilistic metric-semantic mapping technique in 2-D and 3-D environments in both single and multi-robot settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge