Eduards Sidorovics

Differentiable Disentanglement Filter: an Application Agnostic Core Concept Discovery Probe

Jul 24, 2019

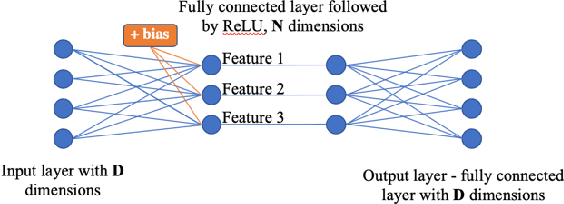

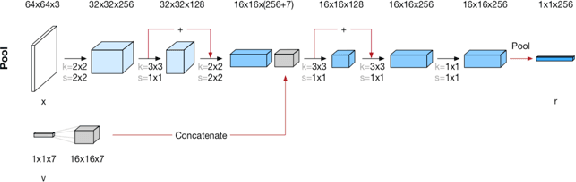

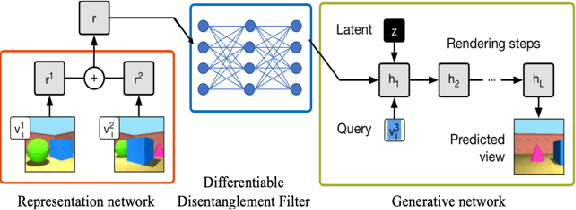

Abstract:It has long been speculated that deep neural networks function by discovering a hierarchical set of domain-specific core concepts or patterns, which are further combined to recognize even more elaborate concepts for the classification or other machine learning tasks. Meanwhile disentangling the actual core concepts engrained in the word embeddings (like word2vec or BERT) or deep convolutional image recognition neural networks (like PG-GAN) is difficult and some success there has been achieved only recently. In this paper we propose a novel neural network nonlinearity named Differentiable Disentanglement Filter (DDF) which can be transparently inserted into any existing neural network layer to automatically disentangle the core concepts used by that layer. The DDF probe is inspired by the obscure properties of the hyper-dimensional computing theory. The DDF proof-of-concept implementation is shown to disentangle concepts within the neural 3D scene representation - a task vital for visual grounding of natural language narratives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge