Eduard Frankford

A Survey Study on the State of the Art of Programming Exercise Generation using Large Language Models

May 30, 2024Abstract:This paper analyzes Large Language Models (LLMs) with regard to their programming exercise generation capabilities. Through a survey study, we defined the state of the art, extracted their strengths and weaknesses and finally proposed an evaluation matrix, helping researchers and educators to decide which LLM is the best fitting for the programming exercise generation use case. We also found that multiple LLMs are capable of producing useful programming exercises. Nevertheless, there exist challenges like the ease with which LLMs might solve exercises generated by LLMs. This paper contributes to the ongoing discourse on the integration of LLMs in education.

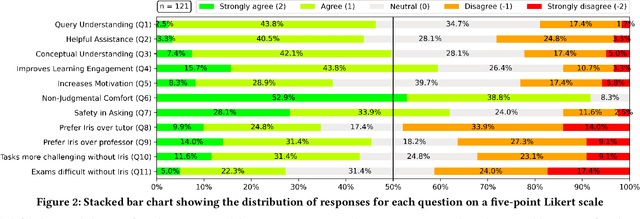

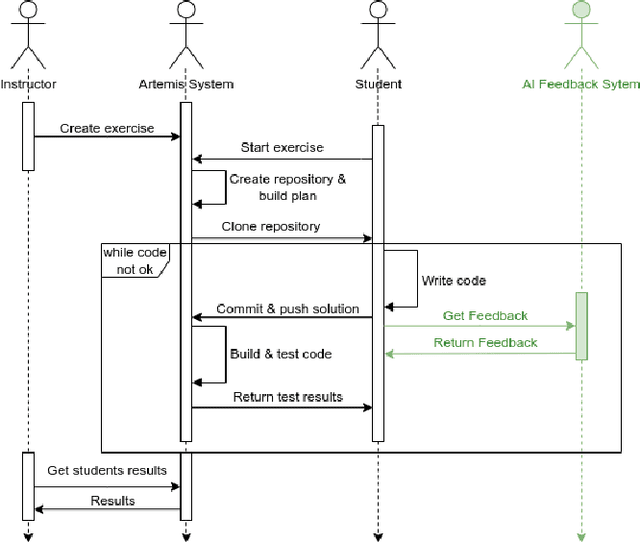

Iris: An AI-Driven Virtual Tutor For Computer Science Education

May 09, 2024

Abstract:Integrating AI-driven tools in higher education is an emerging area with transformative potential. This paper introduces Iris, a chat-based virtual tutor integrated into the interactive learning platform Artemis that offers personalized, context-aware assistance in large-scale educational settings. Iris supports computer science students by guiding them through programming exercises and is designed to act as a tutor in a didactically meaningful way. Its calibrated assistance avoids revealing complete solutions, offering subtle hints or counter-questions to foster independent problem-solving skills. For each question, it issues multiple prompts in a Chain-of-Thought to GPT-3.5-Turbo. The prompts include a tutor role description and examples of meaningful answers through few-shot learning. Iris employs contextual awareness by accessing the problem statement, student code, and automated feedback to provide tailored advice. An empirical evaluation shows that students perceive Iris as effective because it understands their questions, provides relevant support, and contributes to the learning process. While students consider Iris a valuable tool for programming exercises and homework, they also feel confident solving programming tasks in computer-based exams without Iris. The findings underscore students' appreciation for Iris' immediate and personalized support, though students predominantly view it as a complement to, rather than a replacement for, human tutors. Nevertheless, Iris creates a space for students to ask questions without being judged by others.

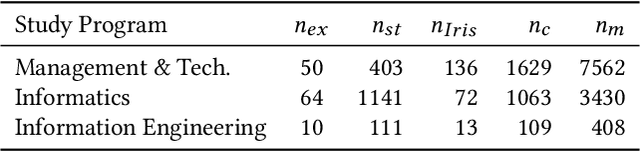

AI-Tutoring in Software Engineering Education

Apr 05, 2024

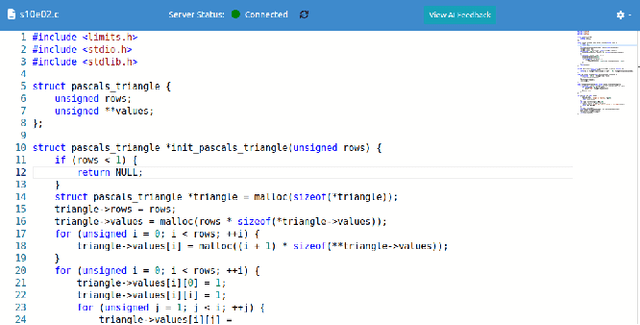

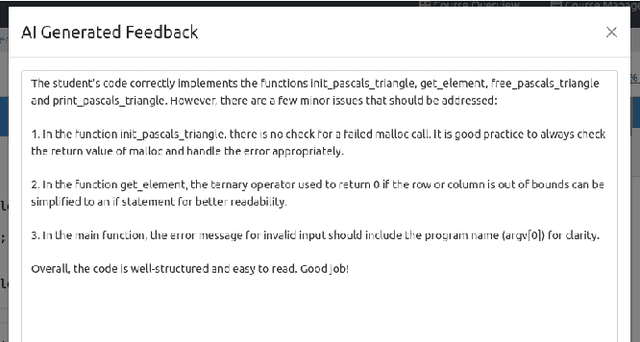

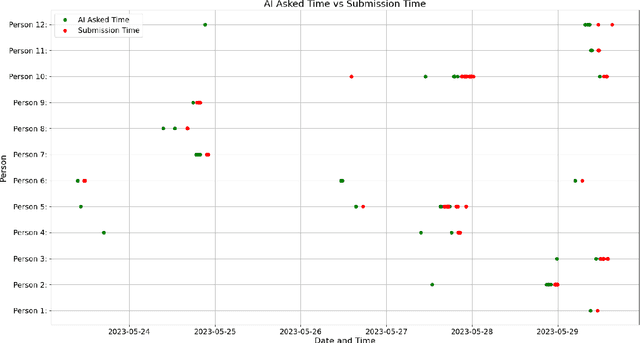

Abstract:With the rapid advancement of artificial intelligence (AI) in various domains, the education sector is set for transformation. The potential of AI-driven tools in enhancing the learning experience, especially in programming, is immense. However, the scientific evaluation of Large Language Models (LLMs) used in Automated Programming Assessment Systems (APASs) as an AI-Tutor remains largely unexplored. Therefore, there is a need to understand how students interact with such AI-Tutors and to analyze their experiences. In this paper, we conducted an exploratory case study by integrating the GPT-3.5-Turbo model as an AI-Tutor within the APAS Artemis. Through a combination of empirical data collection and an exploratory survey, we identified different user types based on their interaction patterns with the AI-Tutor. Additionally, the findings highlight advantages, such as timely feedback and scalability. However, challenges like generic responses and students' concerns about a learning progress inhibition when using the AI-Tutor were also evident. This research adds to the discourse on AI's role in education.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge