Edmundo Monteiro

Computational Complexity-Constrained Spectral Efficiency Analysis for 6G Waveforms

Jul 08, 2024

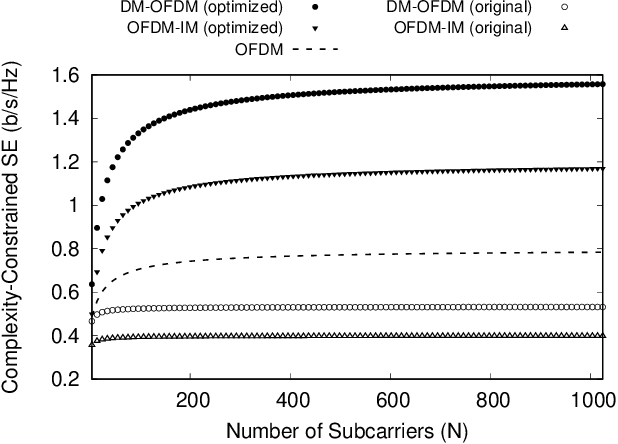

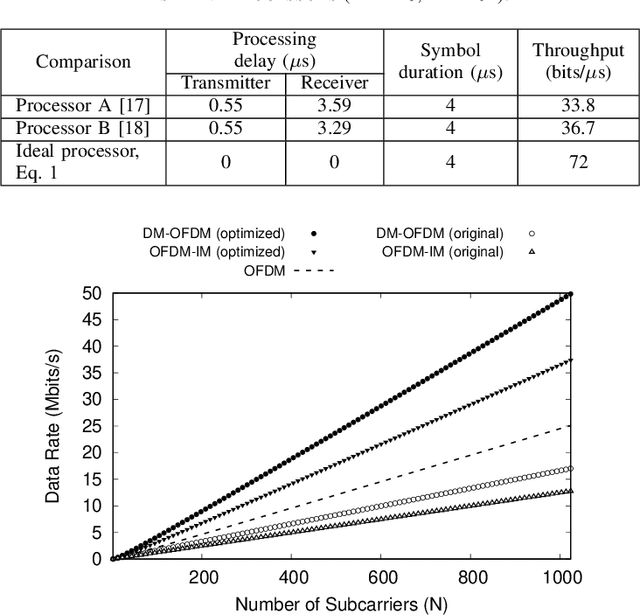

Abstract:In this work, we present a tutorial on how to account for the computational time complexity overhead of signal processing in the spectral efficiency (SE) analysis of wireless waveforms. Our methodology is particularly relevant in scenarios where achieving higher SE entails a penalty in complexity, a common trade-off present in 6G candidate waveforms. We consider that SE derives from the data rate, which is impacted by time-dependent overheads. Thus, neglecting the computational complexity overhead in the SE analysis grants an unfair advantage to more computationally complex waveforms, as they require larger computational resources to meet a signal processing runtime below the symbol period. We demonstrate our points with two case studies. In the first, we refer to IEEE 802.11a-compliant baseband processors from the literature to show that their runtime significantly impacts the SE perceived by upper layers. In the second case study, we show that waveforms considered less efficient in terms of SE can outperform their more computationally expensive counterparts if provided with equivalent high-performance computational resources. Based on these cases, we believe our tutorial can address the comparative SE analysis of waveforms that operate under different computational resource constraints.

Fast Computation of the Discrete Fourier Transform Square Index Coefficients

Jun 28, 2024

Abstract:The $N$-point discrete Fourier transform (DFT) is a cornerstone for several signal processing applications. Many of these applications operate in real-time, making the computational complexity of the DFT a critical performance indicator to be optimized. Unfortunately, whether the $\mathcal{O}(N\log_2 N)$ time complexity of the fast Fourier transform (FFT) can be outperformed remains an unresolved question in the theory of computation. However, in many applications of the DFT -- such as compressive sensing, image processing, and wideband spectral analysis -- only a small fraction of the output signal needs to be computed because the signal is sparse. This motivates the development of algorithms that compute specific DFT coefficients more efficiently than the FFT algorithm. In this article, we show that the number of points of some DFT coefficients can be dramatically reduced by means of elementary mathematical properties. We present an algorithm that compacts the square index coefficients (SICs) of DFT (i.e., $X_{k\sqrt{N}}$, $k=0,1,\cdots, \sqrt{N}-1$, for a square number $N$) from $N$ to $\sqrt{N}$ points at the expense of $N-1$ complex sums and no multiplication. Based on this, any regular DFT algorithm can be straightforwardly applied to compute the SICs with a reduced number of complex multiplications. If $N$ is a power of two, one can combine our algorithm with the FFT to calculate all SICs in $\mathcal{O}(\sqrt{N}\log_2\sqrt{N})$ time complexity.

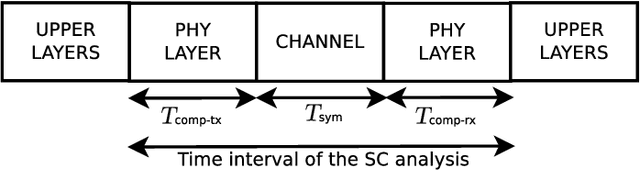

Computation-Limited Signals: A Channel Capacity Regime Constrained by Computational Complexity

Oct 09, 2023Abstract:In this letter, we introduce the computational-limited (comp-limited) signals, a communication capacity regime in which the signal time computational complexity overhead is the key constraint -- rather than power or bandwidth -- to the overall communication capacity. To relate capacity and time complexity, we propose a novel mathematical framework that builds on concepts of information theory and computational complexity. In particular, the algorithmic capacity stands for the ratio between the upper-bound number of bits modulated in a symbol and the lower-bound time complexity required to turn these bits into a communication symbol. By setting this ratio as function of the channel resources, we classify a given signal design as comp-limited if its algorithmic capacity nullifies as the channel resources grow. As a use-case, we show that an uncoded OFDM transmitter is comp-limited unless the lower-bound computational complexity of the N-point DFT problem verifies as $\Omega(N)$, which remains an open challenge in theoretical computer science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge