Edgar Galvan

An Analysis on the Effects of Evolving the Monte Carlo Tree Search Upper Confidence for Trees Selection Policy on Unimodal, Multimodal and Deceptive Landscapes

Nov 21, 2023

Abstract:Monte Carlo Tree Search (MCTS) is a best-first sampling method employed in the search for optimal decisions. The effectiveness of MCTS relies on the construction of its statistical tree, with the selection policy playing a crucial role. A selection policy that works particularly well in MCTS is the Upper Confidence Bounds for Trees, referred to as UCT. The research community has also put forth more sophisticated bounds aimed at enhancing MCTS performance on specific problem domains. Thus, while MCTS UCT generally performs well, there may be variants that outperform it. This has led to various efforts to evolve selection policies for use in MCTS. While all of these previous works are inspiring, none have undertaken an in-depth analysis to shed light on the circumstances in which an evolved alternative to MCTS UCT might prove advantageous. Most of these studies have focused on a single type of problem. In sharp contrast, this work explores the use of five functions of different natures, ranging from unimodal to multimodal and deceptive functions. We illustrate how the evolution of MCTS UCT can yield benefits in multimodal and deceptive scenarios, whereas MCTS UCT is robust in all of the functions used in this work.

Neural Architecture Search Using Genetic Algorithm for Facial Expression Recognition

Apr 12, 2023Abstract:Facial expression is one of the most powerful, natural, and universal signals for human beings to express emotional states and intentions. Thus, it is evident the importance of correct and innovative facial expression recognition (FER) approaches in Artificial Intelligence. The current common practice for FER is to correctly design convolutional neural networks' architectures (CNNs) using human expertise. However, finding a well-performing architecture is often a very tedious and error-prone process for deep learning researchers. Neural architecture search (NAS) is an area of growing interest as demonstrated by the large number of scientific works published in recent years thanks to the impressive results achieved in recent years. We propose a genetic algorithm approach that uses an ingenious encoding-decoding mechanism that allows to automatically evolve CNNs on FER tasks attaining high accuracy classification rates. The experimental results demonstrate that the proposed algorithm achieves the best-known results on the CK+ and FERG datasets as well as competitive results on the JAFFE dataset.

Towards Understanding the Effects of Evolving the MCTS UCT Selection Policy

Feb 07, 2023

Abstract:Monte Carlo Tree Search (MCTS) is a sampling best-first method to search for optimal decisions. The success of MCTS depends heavily on how the MCTS statistical tree is built and the selection policy plays a fundamental role in this. A particular selection policy that works particularly well, widely adopted in MCTS, is the Upper Confidence Bounds for Trees, referred to as UCT. Other more sophisticated bounds have been proposed by the community with the goal to improve MCTS performance on particular problems. Thus, it is evident that while the MCTS UCT behaves generally well, some variants might behave better. As a result of this, multiple works have been proposed to evolve a selection policy to be used in MCTS. Although all these works are inspiring, none of them have carried out an in-depth analysis shedding light under what circumstances an evolved alternative of MCTS UCT might be beneficial in MCTS due to focusing on a single type of problem. In sharp contrast to this, in this work we use five functions of different nature, going from a unimodal function, covering multimodal functions to deceptive functions. We demonstrate how the evolution of the MCTS UCT might be beneficial in multimodal and deceptive scenarios, whereas the MCTS UCT is robust in unimodal scenarios and competitive in the rest of the scenarios used in this study.

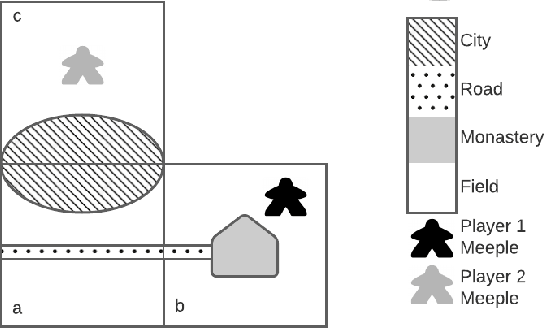

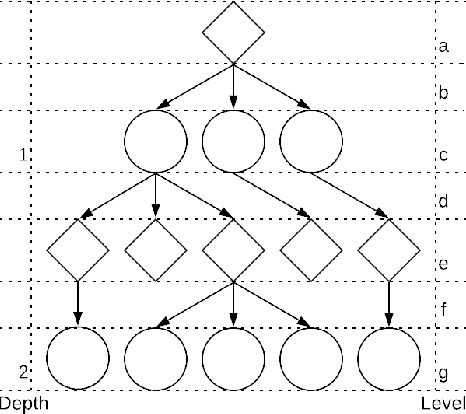

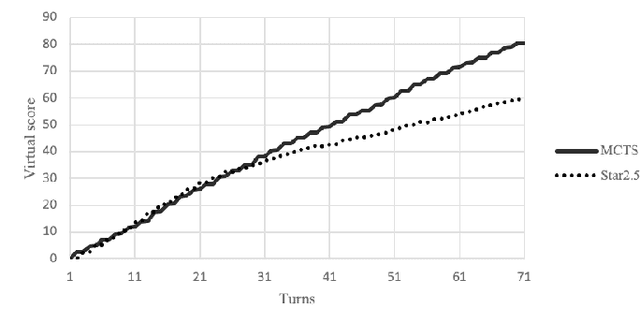

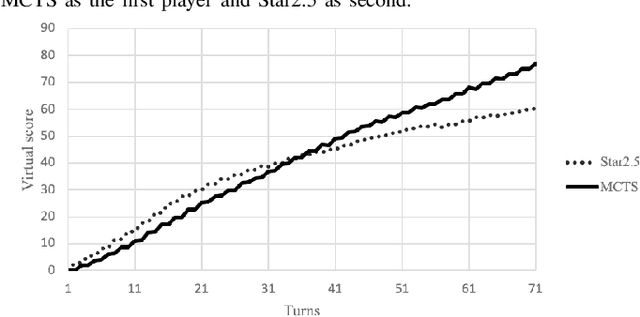

Playing Carcassonne with Monte Carlo Tree Search

Oct 04, 2020

Abstract:Monte Carlo Tree Search (MCTS) is a relatively new sampling method with multiple variants in the literature. They can be applied to a wide variety of challenging domains including board games, video games, and energy-based problems to mention a few. In this work, we explore the use of the vanilla MCTS and the MCTS with Rapid Action Value Estimation (MCTS-RAVE) in the game of Carcassonne, a stochastic game with a deceptive scoring system where limited research has been conducted. We compare the strengths of the MCTS-based methods with the Star2.5 algorithm, previously reported to yield competitive results in the game of Carcassonne when a domain-specific heuristic is used to evaluate the game states. We analyse the particularities of the strategies adopted by the algorithms when they share a common reward system. The MCTS-based methods consistently outperformed the Star2.5 algorithm given their ability to find and follow long-term strategies, with the vanilla MCTS exhibiting a more robust game-play than the MCTS-RAVE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge