E. Hossain

Explainable Artificial Intelligence (XAI): An Engineering Perspective

Jan 10, 2021

Abstract:The remarkable advancements in Deep Learning (DL) algorithms have fueled enthusiasm for using Artificial Intelligence (AI) technologies in almost every domain; however, the opaqueness of these algorithms put a question mark on their applications in safety-critical systems. In this regard, the `explainability' dimension is not only essential to both explain the inner workings of black-box algorithms, but it also adds accountability and transparency dimensions that are of prime importance for regulators, consumers, and service providers. eXplainable Artificial Intelligence (XAI) is the set of techniques and methods to convert the so-called black-box AI algorithms to white-box algorithms, where the results achieved by these algorithms and the variables, parameters, and steps taken by the algorithm to reach the obtained results, are transparent and explainable. To complement the existing literature on XAI, in this paper, we take an `engineering' approach to illustrate the concepts of XAI. We discuss the stakeholders in XAI and describe the mathematical contours of XAI from engineering perspective. Then we take the autonomous car as a use-case and discuss the applications of XAI for its different components such as object detection, perception, control, action decision, and so on. This work is an exploratory study to identify new avenues of research in the field of XAI.

Distributed Machine Learning for Wireless Communication Networks: Techniques, Architectures, and Applications

Dec 02, 2020

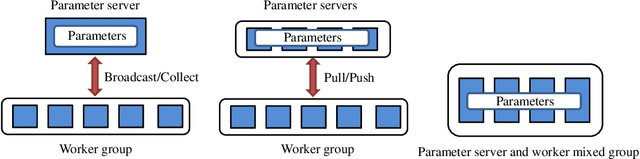

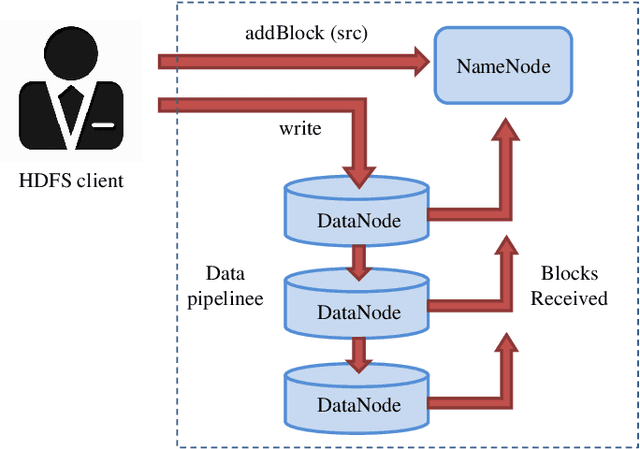

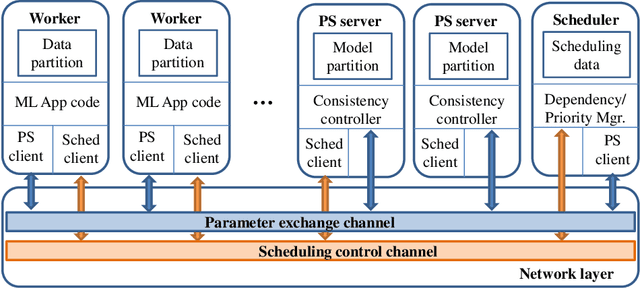

Abstract:Distributed machine learning (DML) techniques, such as federated learning, partitioned learning, and distributed reinforcement learning, have been increasingly applied to wireless communications. This is due to improved capabilities of terminal devices, explosively growing data volume, congestion in the radio interfaces, and increasing concern of data privacy. The unique features of wireless systems, such as large scale, geographically dispersed deployment, user mobility, and massive amount of data, give rise to new challenges in the design of DML techniques. There is a clear gap in the existing literature in that the DML techniques are yet to be systematically reviewed for their applicability to wireless systems. This survey bridges the gap by providing a contemporary and comprehensive survey of DML techniques with a focus on wireless networks. Specifically, we review the latest applications of DML in power control, spectrum management, user association, and edge cloud computing. The optimality, scalability, convergence rate, computation cost, and communication overhead of DML are analyzed. We also discuss the potential adversarial attacks faced by DML applications, and describe state-of-the-art countermeasures to preserve privacy and security. Last but not least, we point out a number of key issues yet to be addressed, and collate potentially interesting and challenging topics for future research.

Deep Learning for Radio Resource Allocation in Multi-Cell Networks

Aug 02, 2018

Abstract:Increased complexity and heterogeneity of emerging 5G and beyond 5G (B5G) wireless networks will require a paradigm shift from traditional resource allocation mechanisms. Deep learning (DL) is a powerful tool where a multi-layer neural network can be trained to model a resource management algorithm using network data.Therefore, resource allocation decisions can be obtained without intensive online computations which would be required otherwise for the solution of resource allocation problems. In this context, this article focuses on the application of DL to obtain solutions for the radio resource allocation problems in multi-cell networks. Starting with a brief overview of a deep neural network (DNN) as a DL model, relevant DNN architectures and the data training procedure, we provide an overview of existing state-of-the-art applying DL in the context of radio resource allocation. A qualitative comparison is provided in terms of their objectives, inputs/outputs, learning and data training methods. Then, we present a supervised DL model to solve the sub-band and power allocation problem in a multi-cell network. Using the data generated by a genetic algorithm, we first train the model and then test the accuracy of the proposed model in predicting the resource allocation solutions. Simulation results show that the trained DL model is able to provide the desired optimal solution 86.3% of time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge