E. Dmitriev

MGTCOM: Community Detection in Multimodal Graphs

Nov 10, 2022

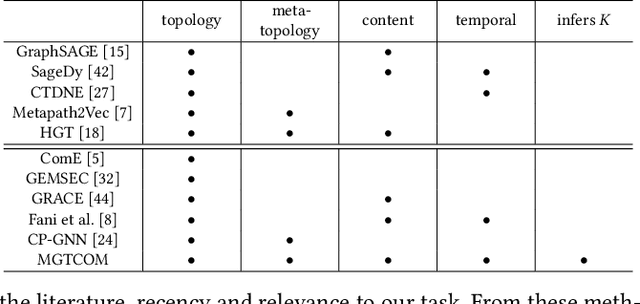

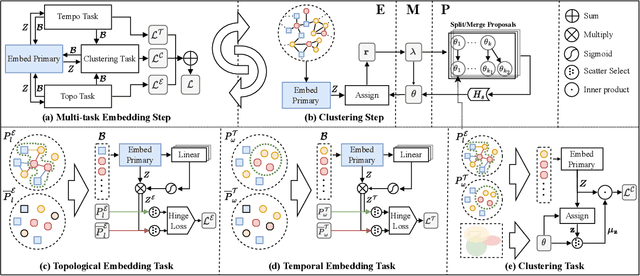

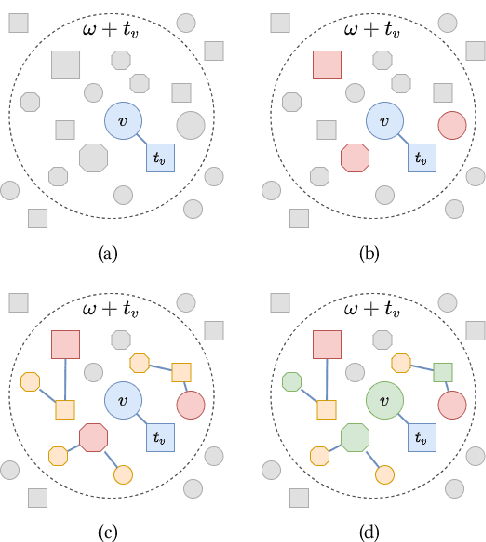

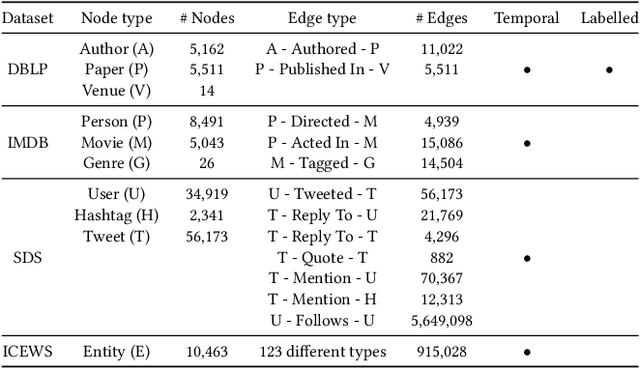

Abstract:Community detection is the task of discovering groups of nodes sharing similar patterns within a network. With recent advancements in deep learning, methods utilizing graph representation learning and deep clustering have shown great results in community detection. However, these methods often rely on the topology of networks (i) ignoring important features such as network heterogeneity, temporality, multimodality, and other possibly relevant features. Besides, (ii) the number of communities is not known a priori and is often left to model selection. In addition, (iii) in multimodal networks all nodes are assumed to be symmetrical in their features; while true for homogeneous networks, most of the real-world networks are heterogeneous where feature availability often varies. In this paper, we propose a novel framework (named MGTCOM) that overcomes the above challenges (i)--(iii). MGTCOM identifies communities through multimodal feature learning by leveraging a new sampling technique for unsupervised learning of temporal embeddings. Importantly, MGTCOM is an end-to-end framework optimizing network embeddings, communities, and the number of communities in tandem. In order to assess its performance, we carried out an extensive evaluation on a number of multimodal networks. We found out that our method is competitive against state-of-the-art and performs well in inductive inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge