Dong-Yeun Koh

EARL-BO: Reinforcement Learning for Multi-Step Lookahead, High-Dimensional Bayesian Optimization

Oct 31, 2024

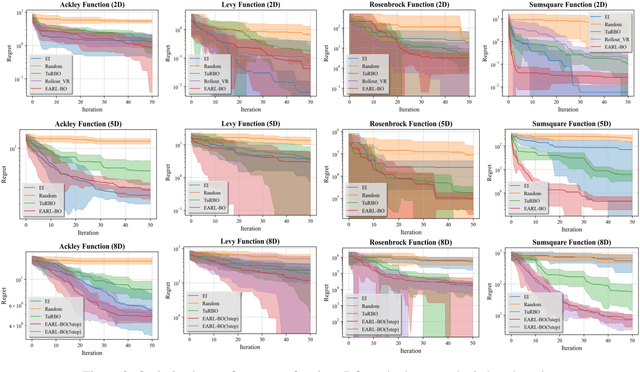

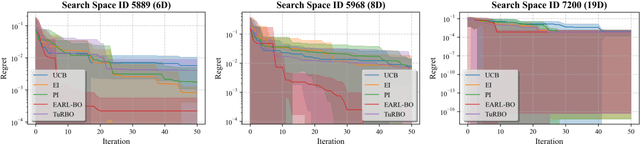

Abstract:Conventional methods for Bayesian optimization (BO) primarily involve one-step optimal decisions (e.g., maximizing expected improvement of the next step). To avoid myopic behavior, multi-step lookahead BO algorithms such as rollout strategies consider the sequential decision-making nature of BO, i.e., as a stochastic dynamic programming (SDP) problem, demonstrating promising results in recent years. However, owing to the curse of dimensionality, most of these methods make significant approximations or suffer scalability issues, e.g., being limited to two-step lookahead. This paper presents a novel reinforcement learning (RL)-based framework for multi-step lookahead BO in high-dimensional black-box optimization problems. The proposed method enhances the scalability and decision-making quality of multi-step lookahead BO by efficiently solving the SDP of the BO process in a near-optimal manner using RL. We first introduce an Attention-DeepSets encoder to represent the state of knowledge to the RL agent and employ off-policy learning to accelerate its initial training. We then propose a multi-task, fine-tuning procedure based on end-to-end (encoder-RL) on-policy learning. We evaluate the proposed method, EARL-BO (Encoder Augmented RL for Bayesian Optimization), on both synthetic benchmark functions and real-world hyperparameter optimization problems, demonstrating significantly improved performance compared to existing multi-step lookahead and high-dimensional BO methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge