Dmitrii Fedotov

Is Everything Fine, Grandma? Acoustic and Linguistic Modeling for Robust Elderly Speech Emotion Recognition

Sep 07, 2020

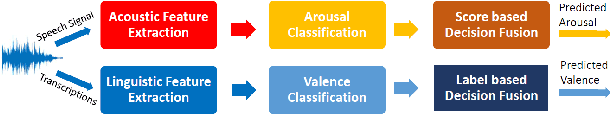

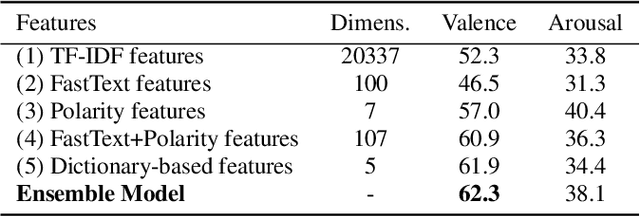

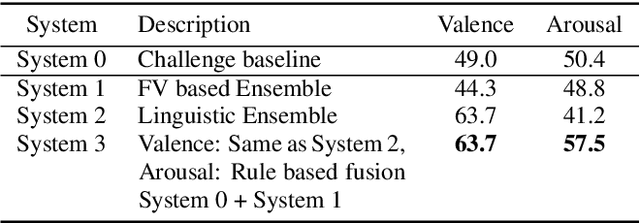

Abstract:Acoustic and linguistic analysis for elderly emotion recognition is an under-studied and challenging research direction, but essential for the creation of digital assistants for the elderly, as well as unobtrusive telemonitoring of elderly in their residences for mental healthcare purposes. This paper presents our contribution to the INTERSPEECH 2020 Computational Paralinguistics Challenge (ComParE) - Elderly Emotion Sub-Challenge, which is comprised of two ternary classification tasks for arousal and valence recognition. We propose a bi-modal framework, where these tasks are modeled using state-of-the-art acoustic and linguistic features, respectively. In this study, we demonstrate that exploiting task-specific dictionaries and resources can boost the performance of linguistic models, when the amount of labeled data is small. Observing a high mismatch between development and test set performances of various models, we also propose alternative training and decision fusion strategies to better estimate and improve the generalization performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge