Diego Sebastián Pérez

Deep Learning for 2D grapevine bud detection

Aug 27, 2020

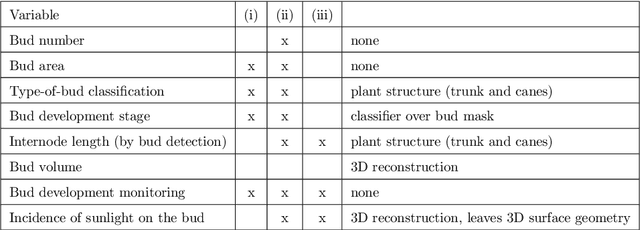

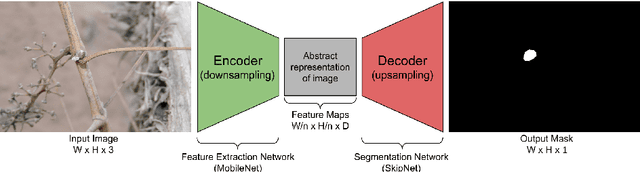

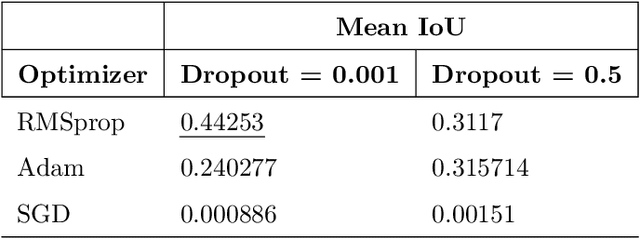

Abstract:In Viticulture, visual inspection of the plant is a necessary task for measuring relevant variables. In many cases, these visual inspections are susceptible to automation through computer vision methods. Bud detection is one such visual task, central for the measurement of important variables such as: measurement of bud sunlight exposure, autonomous pruning, bud counting, type-of-bud classification, bud geometric characterization, internode length, bud area, and bud development stage, among others. This paper presents a computer method for grapevine bud detection based on a Fully Convolutional Networks MobileNet architecture (FCN-MN). To validate its performance, this architecture was compared in the detection task with a strong method for bud detection, the scanning windows with patch classifier method, showing improvements over three aspects of detection: segmentation, correspondence identification and localization. In its best version of configuration parameters, the present approach showed a detection precision of $95.6\%$, a detection recall of $93.6\%$, a mean Dice measure of $89.1\%$ for correct detection (i.e., detections whose mask overlaps the true bud), with small and nearby false alarms (i.e., detections not overlapping the true bud) as shown by a mean pixel area of only $8\%$ the area of a true bud, and a distance (between mass centers) of $1.1$ true bud diameters. We conclude by discussing how these results for FCN-MN would produce sufficiently accurate measurements of variables bud number, bud area, and internode length, suggesting a good performance in a practical setup.

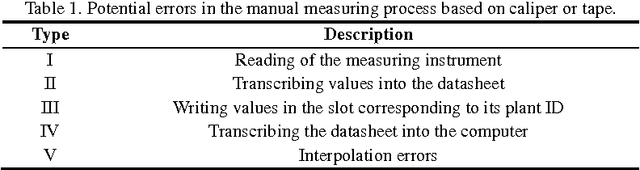

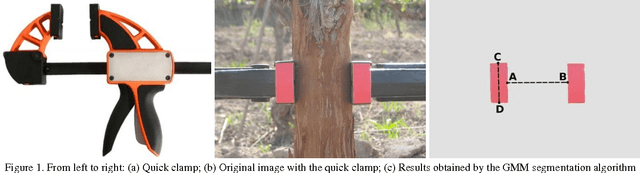

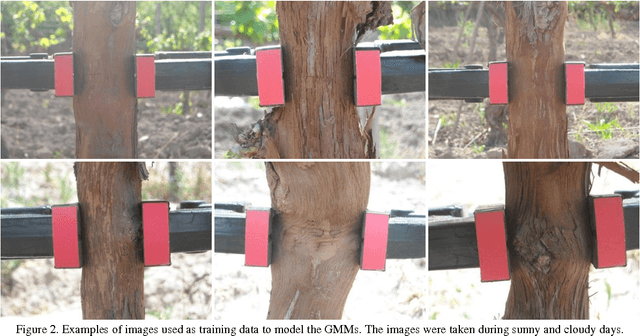

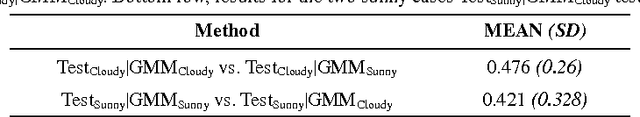

Computer Vision Approach for Low Cost, High Precision Measurement of Grapevine Trunk Diameter in Outdoor Conditions

May 09, 2016

Abstract:Trunk diameter is a variable of agricultural interest, used mainly in the prediction of fruit trees production. It is correlated with leaf area and biomass of trees, and consequently gives a good estimate of the potential production of the plants. This work presents a low cost, high precision method for the measurement of trunk diameter of grapevines based on Computer Vision techniques. Several methods based on Computer Vision and other techniques are introduced in the literature. These methods present different advantages for crop management: they are amenable to be operated by unknowledgeable personnel, with lower operational costs; they result in lower stress levels to knowledgeable personnel, avoiding the deterioration of the measurement quality over time; and they make the measurement process amenable to be embedded in larger autonomous systems, allowing more measurements to be taken with equivalent costs. To date, all existing autonomous methods are either of low precision, or have a prohibitive cost for massive agricultural adoption, leaving the manual Vernier caliper or tape measure as the only choice in most situations. In this work we present a semi-autonomous measurement method that is susceptible to be fully automated, cost effective for mass adoption, and its precision is competitive (with slight improvements) over the caliper manual method.

Image Classification of Grapevine Buds using Scale-Invariant Features Transform, Bag of Features and Support Vector Machines

May 09, 2016

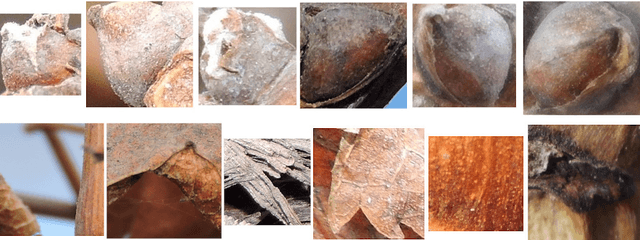

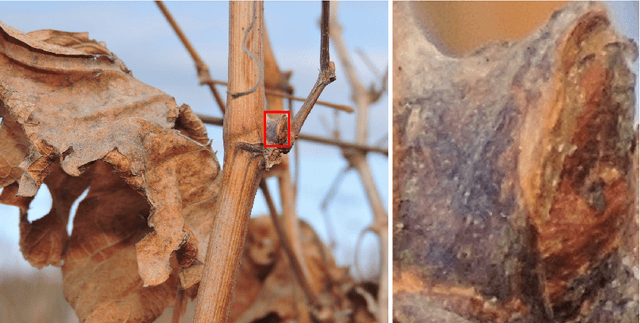

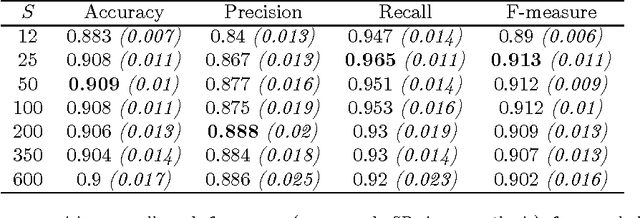

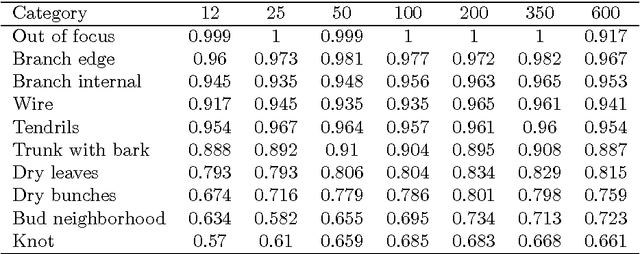

Abstract:In viticulture, there are several applications where bud detection in vineyard images is a necessary task, susceptible of being automated through the use of computer vision methods. A common and effective family of visual detection algorithms are the scanning-window type, that slide a (usually) fixed size window along the original image, classifying each resulting windowed-patch as containing or not containing the target object. The simplicity of these algorithms finds its most challenging aspect in the classification stage. Interested in grapevine buds detection in natural field conditions, this paper presents a classification method for images of grapevine buds ranging 100 to 1600 pixels in diameter, captured in outdoor, under natural field conditions, in winter (i.e., no grape bunches, very few leaves, and dormant buds), without artificial background, and with minimum equipment requirements. The proposed method uses well-known computer vision technologies: Scale-Invariant Feature Transform for calculating low-level features, Bag of Features for building an image descriptor, and Support Vector Machines for training a classifier. When evaluated over images containing buds of at least 100 pixels in diameter, the approach achieves a recall higher than 0.9 and a precision of 0.86 over all windowed-patches covering the whole bud and down to 60% of it, and scaled up to window patches containing a proportion of 20%-80% of bud versus background pixels. This robustness on the position and size of the window demonstrates its viability for use as the classification stage in a scanning-window detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge