Diego Gonzalez Morin

Full Body Video-Based Self-Avatars for Mixed Reality: from E2E System to User Study

Aug 24, 2022

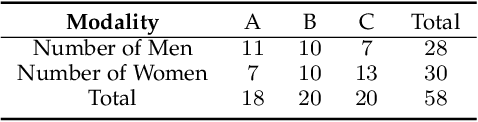

Abstract:In this work we explore the creation of self-avatars through video pass-through in Mixed Reality (MR) applications. We present our end-to-end system, including: custom MR video pass-through implementation on a commercial head mounted display (HMD), our deep learning-based real-time egocentric body segmentation algorithm, and our optimized offloading architecture, to communicate the segmentation server with the HMD. To validate this technology, we designed an immersive VR experience where the user has to walk through a narrow tiles path over an active volcano crater. The study was performed under three body representation conditions: virtual hands, video pass-through with color-based full-body segmentation and video pass-through with deep learning full-body segmentation. This immersive experience was carried out by 30 women and 28 men. To the best of our knowledge, this is the first user study focused on evaluating video-based self-avatars to represent the user in a MR scene. Results showed no significant differences between the different body representations in terms of presence, with moderate improvements in some Embodiment components between the virtual hands and full-body representations. Visual Quality results showed better results from the deep-learning algorithms in terms of the whole body perception and overall segmentation quality. We provide some discussion regarding the use of video-based self-avatars, and some reflections on the evaluation methodology. The proposed E2E solution is in the boundary of the state of the art, so there is still room for improvement before it reaches maturity. However, this solution serves as a crucial starting point for novel MR distributed solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge