Diego Fabiano

Impact of multiple modalities on emotion recognition: investigation into 3d facial landmarks, action units, and physiological data

May 17, 2020

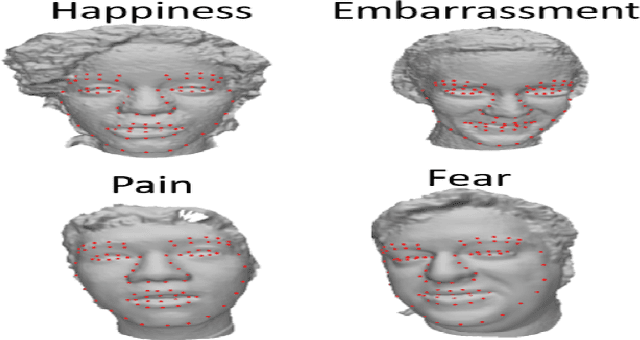

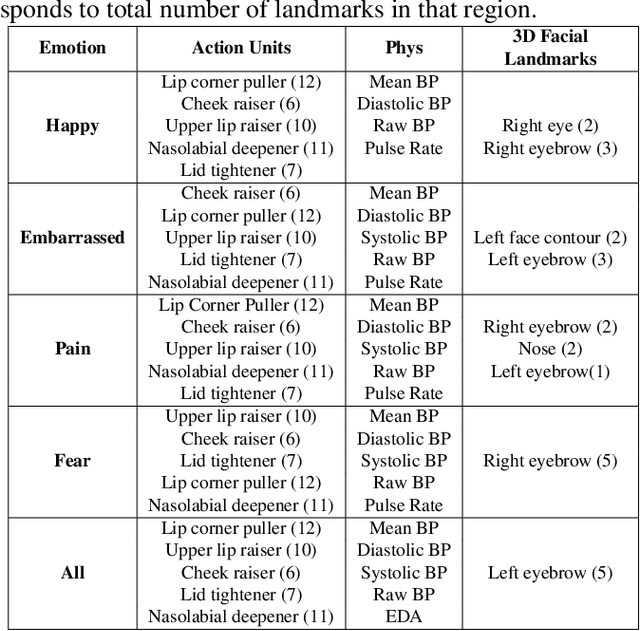

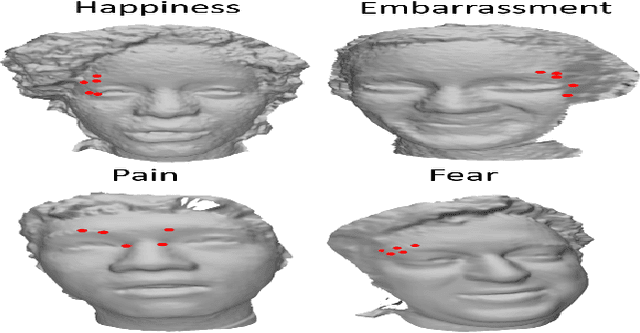

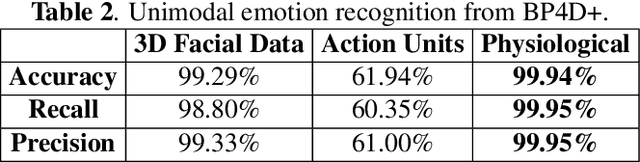

Abstract:To fully understand the complexities of human emotion, the integration of multiple physical features from different modalities can be advantageous. Considering this, we present an analysis of 3D facial data, action units, and physiological data as it relates to their impact on emotion recognition. We analyze each modality independently, as well as the fusion of each for recognizing human emotion. This analysis includes which features are most important for specific emotions (e.g. happy). Our analysis indicates that both 3D facial landmarks and physiological data are encouraging for expression/emotion recognition. On the other hand, while action units can positively impact emotion recognition when fused with other modalities, the results suggest it is difficult to detect emotion using them in a unimodal fashion.

Subject Identification Across Large Expression Variations Using 3D Facial Landmarks

May 17, 2020

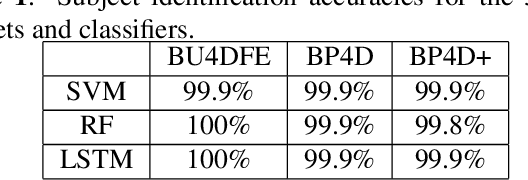

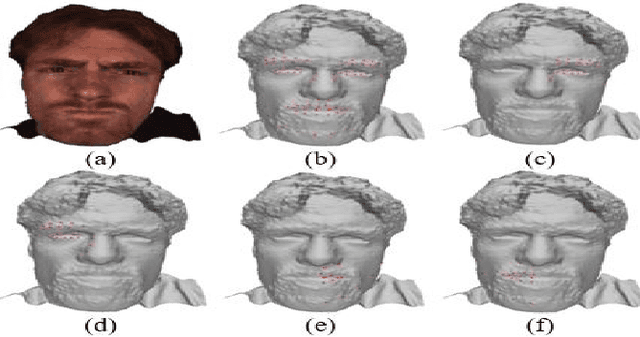

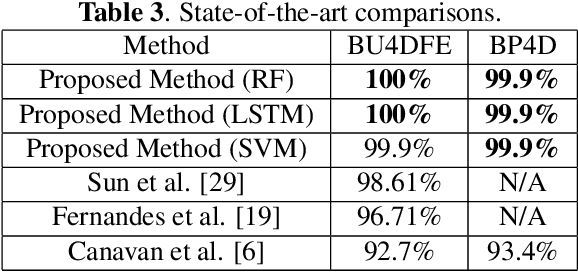

Abstract:Landmark localization is an important first step towards geometric based vision research including subject identification. Considering this, we propose to use 3D facial landmarks for the task of subject identification, over a range of expressed emotion. Landmarks are detected, using a Temporal Deformable Shape Model and used to train a Support Vector Machine (SVM), Random Forest (RF), and Long Short-term Memory (LSTM) neural network for subject identification. As we are interested in subject identification with large variations in expression, we conducted experiments on 3 emotion-based databases, namely the BU-4DFE, BP4D, and BP4D+ 3D/4D face databases. We show that our proposed method outperforms current state of the art methods for subject identification on BU-4DFE and BP4D. To the best of our knowledge, this is the first work to investigate subject identification on the BP4D+, resulting in a baseline for the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge