Diana Aldana

SASNet: Spatially-Adaptive Sinusoidal Neural Networks

Mar 12, 2025Abstract:Sinusoidal neural networks (SNNs) have emerged as powerful implicit neural representations (INRs) for low-dimensional signals in computer vision and graphics. They enable high-frequency signal reconstruction and smooth manifold modeling; however, they often suffer from spectral bias, training instability, and overfitting. To address these challenges, we propose SASNet, Spatially-Adaptive SNNs that robustly enhance the capacity of compact INRs to fit detailed signals. SASNet integrates a frequency embedding layer to control frequency components and mitigate spectral bias, along with jointly optimized, spatially-adaptive masks that localize neuron influence, reducing network redundancy and improving convergence stability. Robust to hyperparameter selection, SASNet faithfully reconstructs high-frequency signals without overfitting low-frequency regions. Our experiments show that SASNet outperforms state-of-the-art INRs, achieving strong fitting accuracy, super-resolution capability, and noise suppression, without sacrificing model compactness.

Taming the Frequency Factory of Sinusoidal Networks

Jul 30, 2024

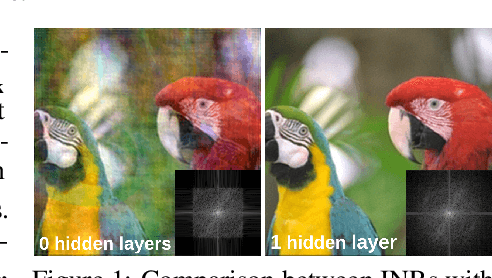

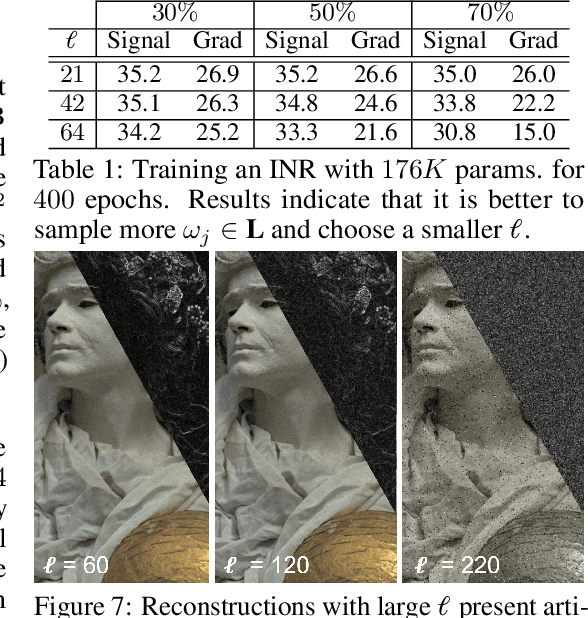

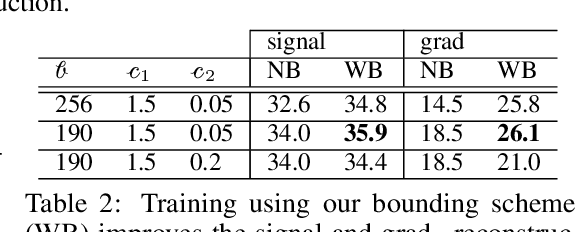

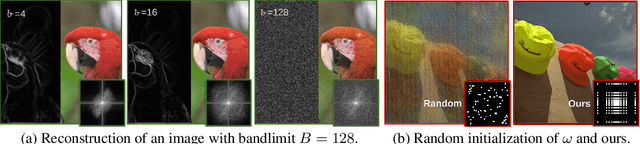

Abstract:This work investigates the structure and representation capacity of $sinusoidal$ MLPs, which have recently shown promising results in encoding low-dimensional signals. This success can be attributed to its smoothness and high representation capacity. The first allows the use of the network's derivatives during training, enabling regularization. However, defining the architecture and initializing its parameters to achieve a desired capacity remains an empirical task. This work provides theoretical and experimental results justifying the capacity property of sinusoidal MLPs and offers control mechanisms for their initialization and training. We approach this from a Fourier series perspective and link the training with the model's spectrum. Our analysis is based on a $harmonic$ expansion of the sinusoidal MLP, which says that the composition of sinusoidal layers produces a large number of new frequencies expressed as integer linear combinations of the input frequencies (weights of the input layer). We use this novel $identity$ to initialize the input neurons which work as a sampling in the signal spectrum. We also note that each hidden neuron produces the same frequencies with amplitudes completely determined by the hidden weights. Finally, we give an upper bound for these amplitudes, which results in a $bounding$ scheme for the network's spectrum during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge