Dhruv Srivastava

MALeR: Improving Compositional Fidelity in Layout-Guided Generation

Nov 08, 2025

Abstract:Recent advances in text-to-image models have enabled a new era of creative and controllable image generation. However, generating compositional scenes with multiple subjects and attributes remains a significant challenge. To enhance user control over subject placement, several layout-guided methods have been proposed. However, these methods face numerous challenges, particularly in compositional scenes. Unintended subjects often appear outside the layouts, generated images can be out-of-distribution and contain unnatural artifacts, or attributes bleed across subjects, leading to incorrect visual outputs. In this work, we propose MALeR, a method that addresses each of these challenges. Given a text prompt and corresponding layouts, our method prevents subjects from appearing outside the given layouts while being in-distribution. Additionally, we propose a masked, attribute-aware binding mechanism that prevents attribute leakage, enabling accurate rendering of subjects with multiple attributes, even in complex compositional scenes. Qualitative and quantitative evaluation demonstrates that our method achieves superior performance in compositional accuracy, generation consistency, and attribute binding compared to previous work. MALeR is particularly adept at generating images of scenes with multiple subjects and multiple attributes per subject.

"Previously on ..." From Recaps to Story Summarization

May 19, 2024

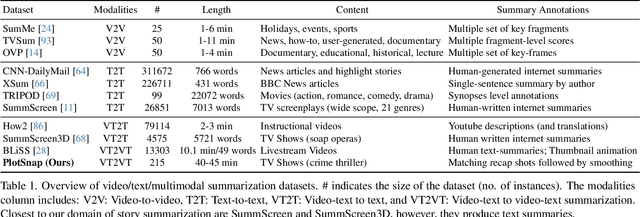

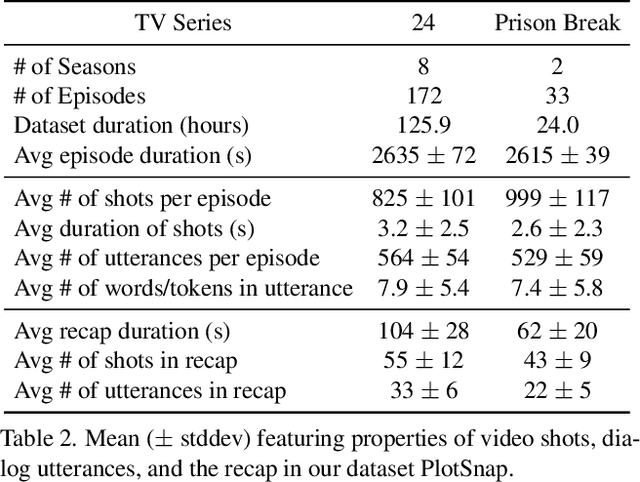

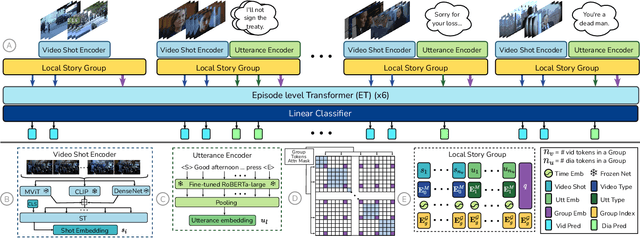

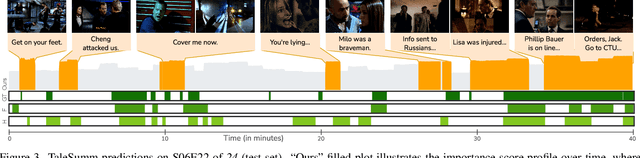

Abstract:We introduce multimodal story summarization by leveraging TV episode recaps - short video sequences interweaving key story moments from previous episodes to bring viewers up to speed. We propose PlotSnap, a dataset featuring two crime thriller TV shows with rich recaps and long episodes of 40 minutes. Story summarization labels are unlocked by matching recap shots to corresponding sub-stories in the episode. We propose a hierarchical model TaleSumm that processes entire episodes by creating compact shot and dialog representations, and predicts importance scores for each video shot and dialog utterance by enabling interactions between local story groups. Unlike traditional summarization, our method extracts multiple plot points from long videos. We present a thorough evaluation on story summarization, including promising cross-series generalization. TaleSumm also shows good results on classic video summarization benchmarks.

How you feelin'? Learning Emotions and Mental States in Movie Scenes

Apr 12, 2023Abstract:Movie story analysis requires understanding characters' emotions and mental states. Towards this goal, we formulate emotion understanding as predicting a diverse and multi-label set of emotions at the level of a movie scene and for each character. We propose EmoTx, a multimodal Transformer-based architecture that ingests videos, multiple characters, and dialog utterances to make joint predictions. By leveraging annotations from the MovieGraphs dataset, we aim to predict classic emotions (e.g. happy, angry) and other mental states (e.g. honest, helpful). We conduct experiments on the most frequently occurring 10 and 25 labels, and a mapping that clusters 181 labels to 26. Ablation studies and comparison against adapted state-of-the-art emotion recognition approaches shows the effectiveness of EmoTx. Analyzing EmoTx's self-attention scores reveals that expressive emotions often look at character tokens while other mental states rely on video and dialog cues.

Algorithms for screening of Cervical Cancer: A chronological review

Nov 02, 2018

Abstract:There are various algorithms and methodologies used for automated screening of cervical cancer by segmenting and classifying cervical cancer cells into different categories. This study presents a critical review of different research papers published that integrated AI methods in screening cervical cancer via different approaches analyzed in terms of typical metrics like dataset size, drawbacks, accuracy etc. An attempt has been made to furnish the reader with an insight of Machine Learning algorithms like SVM (Support Vector Machines), GLCM (Gray Level Co-occurrence Matrix), k-NN (k-Nearest Neighbours), MARS (Multivariate Adaptive Regression Splines), CNNs (Convolutional Neural Networks), spatial fuzzy clustering algorithms, PNNs (Probabilistic Neural Networks), Genetic Algorithm, RFT (Random Forest Trees), C5.0, CART (Classification and Regression Trees) and Hierarchical clustering algorithm for feature extraction, cell segmentation and classification. This paper also covers the publicly available datasets related to cervical cancer. It presents a holistic review on the computational methods that have evolved over the period of time, in chronological order in detection of malignant cells.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge