Dezső Ribli

aiMotive Dataset: A Multimodal Dataset for Robust Autonomous Driving with Long-Range Perception

Nov 17, 2022

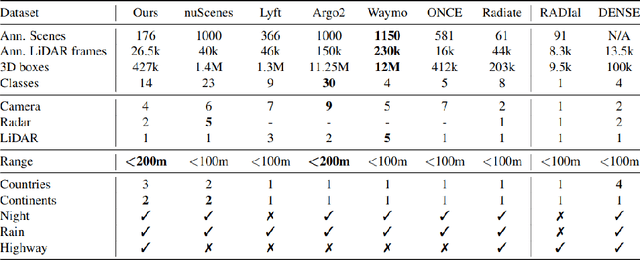

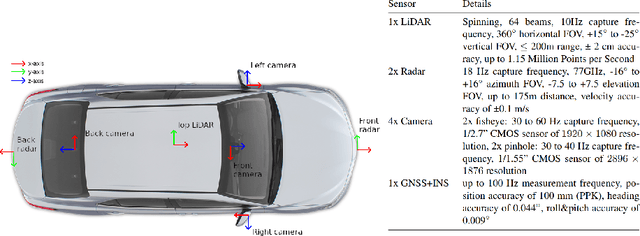

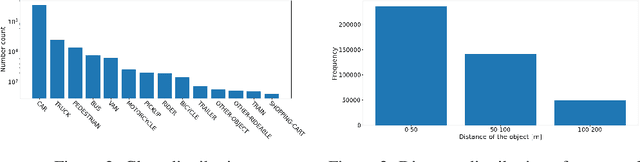

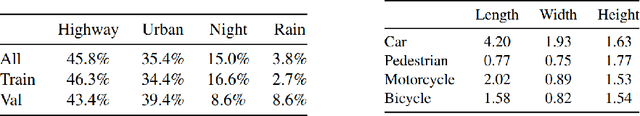

Abstract:Autonomous driving is a popular research area within the computer vision research community. Since autonomous vehicles are highly safety-critical, ensuring robustness is essential for real-world deployment. While several public multimodal datasets are accessible, they mainly comprise two sensor modalities (camera, LiDAR) which are not well suited for adverse weather. In addition, they lack far-range annotations, making it harder to train neural networks that are the base of a highway assistant function of an autonomous vehicle. Therefore, we introduce a multimodal dataset for robust autonomous driving with long-range perception. The dataset consists of 176 scenes with synchronized and calibrated LiDAR, camera, and radar sensors covering a 360-degree field of view. The collected data was captured in highway, urban, and suburban areas during daytime, night, and rain and is annotated with 3D bounding boxes with consistent identifiers across frames. Furthermore, we trained unimodal and multimodal baseline models for 3D object detection. Data are available at \url{https://github.com/aimotive/aimotive_dataset}.

Detecting and classifying lesions in mammograms with Deep Learning

Nov 09, 2017

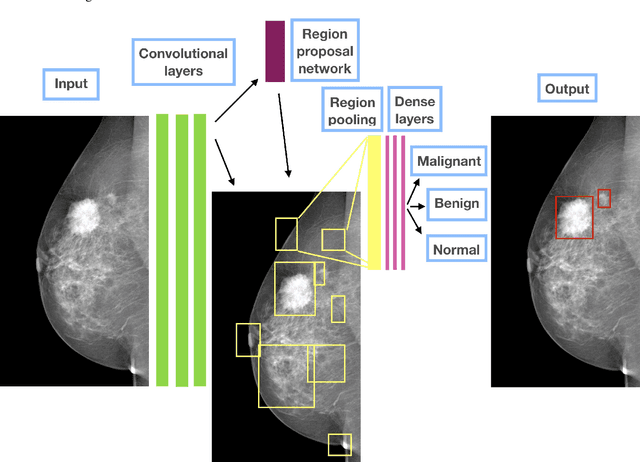

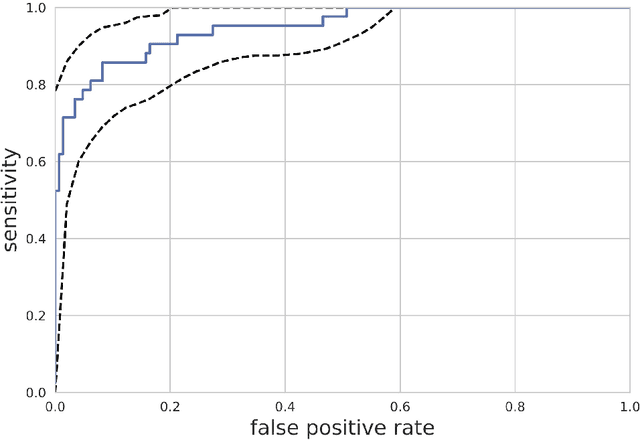

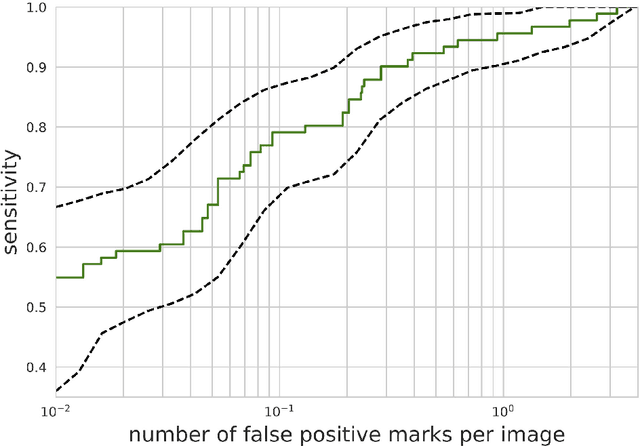

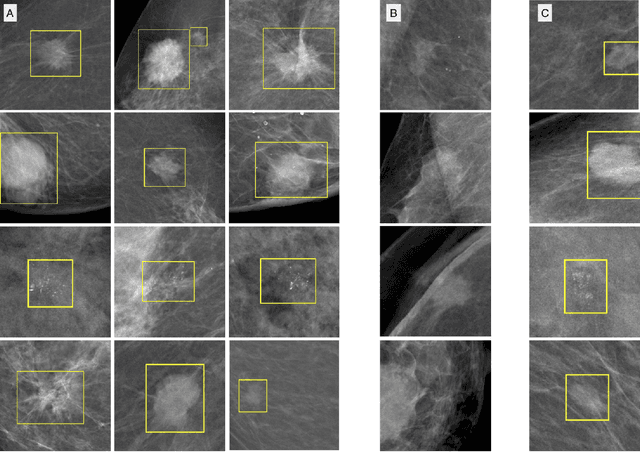

Abstract:In the last two decades Computer Aided Diagnostics (CAD) systems were developed to help radiologists analyze screening mammograms. The benefits of current CAD technologies appear to be contradictory and they should be improved to be ultimately considered useful. Since 2012 deep convolutional neural networks (CNN) have been a tremendous success in image recognition, reaching human performance. These methods have greatly surpassed the traditional approaches, which are similar to currently used CAD solutions. Deep CNN-s have the potential to revolutionize medical image analysis. We propose a CAD system based on one of the most successful object detection frameworks, Faster R-CNN. The system detects and classifies malignant or benign lesions on a mammogram without any human intervention. The proposed method sets the state of the art classification performance on the public INbreast database, AUC = 0.95 . The approach described here has achieved the 2nd place in the Digital Mammography DREAM Challenge with AUC = 0.85 . When used as a detector, the system reaches high sensitivity with very few false positive marks per image on the INbreast dataset. Source code, the trained model and an OsiriX plugin are availaible online at https://github.com/riblidezso/frcnn_cad .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge