Dezhi Han

A Reinforcement Learning-based Offensive semantics Censorship System for Chatbots

Jul 13, 2022

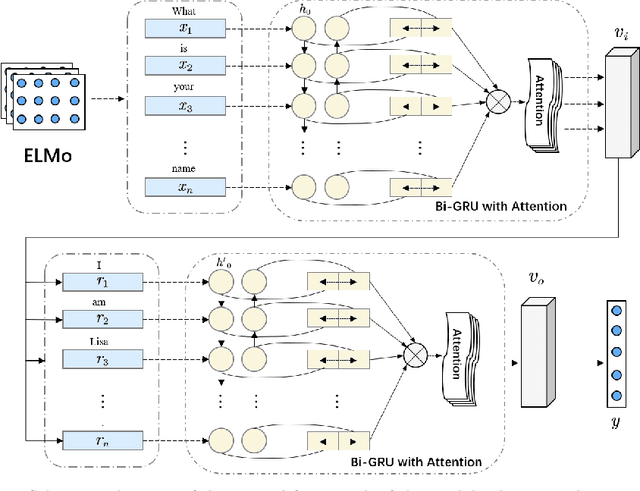

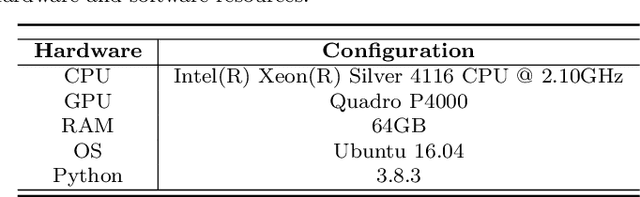

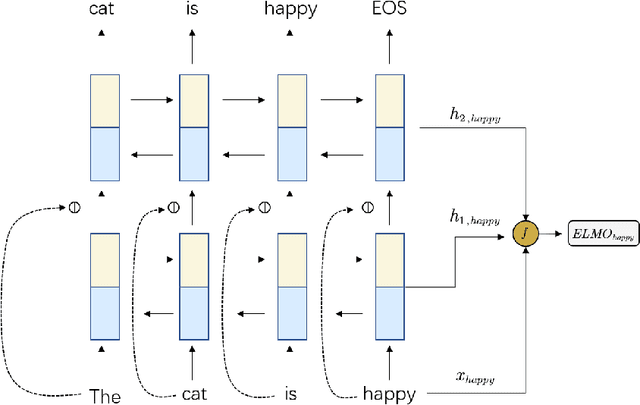

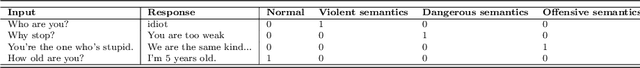

Abstract:The rapid development of artificial intelligence (AI) technology has enabled large-scale AI applications to land in the market and practice. However, while AI technology has brought many conveniences to people in the productization process, it has also exposed many security issues. Especially, attacks against online learning vulnerabilities of chatbots occur frequently. Therefore, this paper proposes a semantics censorship chatbot system based on reinforcement learning, which is mainly composed of two parts: the Offensive semantics censorship model and the semantics purification model. Offensive semantics review can combine the context of user input sentences to detect the rapid evolution of Offensive semantics and respond to Offensive semantics responses. The semantics purification model For the case of chatting robot models, it has been contaminated by large numbers of offensive semantics, by strengthening the offensive reply learned by the learning algorithm, rather than rolling back to the early versions. In addition, by integrating a once-through learning approach, the speed of semantics purification is accelerated while reducing the impact on the quality of replies. The experimental results show that our proposed approach reduces the probability of the chat model generating offensive replies and that the integration of the few-shot learning algorithm improves the training speed rapidly while effectively slowing down the decline in BLEU values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge