Denton Bobeldyk

Crafting Generative Art through Genetic Improvement: Managing Creative Outputs in Diverse Fitness Landscapes

Jul 29, 2024Abstract:Generative art is a rules-driven approach to creating artistic outputs in various mediums. For example, a fluid simulation can govern the flow of colored pixels across a digital display or a rectangle placement algorithm can yield a Mondrian-style painting. Previously, we investigated how genetic improvement, a sub-field of genetic programming, can automatically create and optimize generative art drawing programs. One challenge of applying genetic improvement to generative art is defining fitness functions and their interaction in a many-objective evolutionary algorithm such as Lexicase selection. Here, we assess the impact of each fitness function in terms of the their individual effects on generated images, characteristics of generated programs, and impact of bloat on this specific domain. Furthermore, we have added an additional fitness function that uses a classifier for mimicking a human's assessment as to whether an output is considered as "art." This classifier is trained on a dataset of input images resembling the glitch art aesthetic that we aim to create. Our experimental results show that with few fitness functions, individual generative techniques sweep across populations. Moreover, we found that compositions tended to be driven by one technique with our current fitness functions. Lastly, we show that our classifier is best suited for filtering out noisy images, ideally leading towards more outputs relevant to user preference.

Predicting Gender and Race from Near Infrared Iris and Periocular Images

Jul 28, 2018

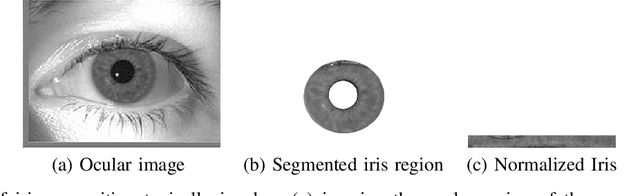

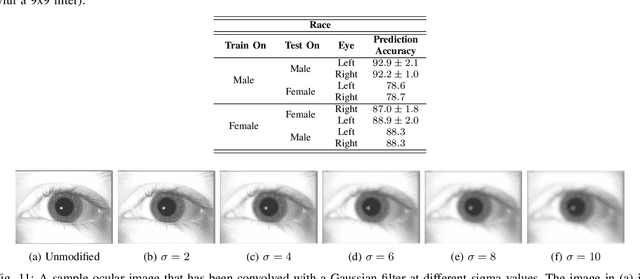

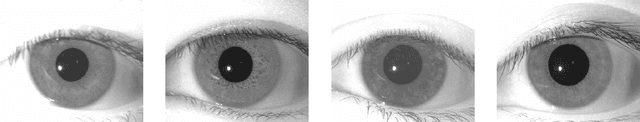

Abstract:Recent research has explored the possibility of automatically deducing information such as gender, age and race of an individual from their biometric data. While the face modality has been extensively studied in this regard, relatively less research has been conducted in the context of the iris modality. In this paper, we first review the medical literature to establish a biological basis for extracting gender and race cues from the iris. Then, we demonstrate that it is possible to use simple texture descriptors, like BSIF (Binarized Statistical Image Feature) and LBP (Local Binary Patterns), to extract gender and race attributes from a NIR ocular image used in a typical iris recognition system. The proposed method predicts race and gender from a single eye image with an accuracy of 86% and 90%, respectively. In addition, the following analysis are conducted: (a) the role of different parts of the ocular region on attribute prediction; (b) the influence of gender on race prediction, and vice-versa; (c) the impact of eye color on gender and race prediction; (d) the impact of image blur on gender and race prediction; (e) the generalizability of the method across different datasets, i.e., cross-dataset performance; and (f) the consistency of prediction performance across the left and right eyes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge