Predicting Gender and Race from Near Infrared Iris and Periocular Images

Paper and Code

Jul 28, 2018

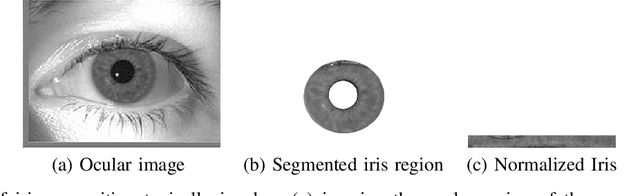

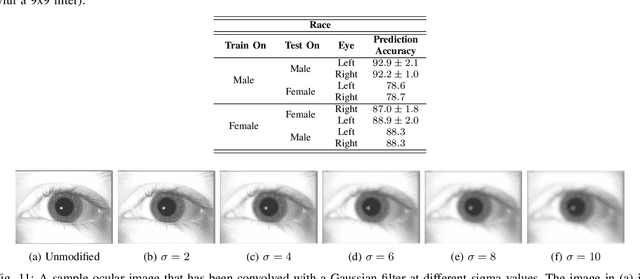

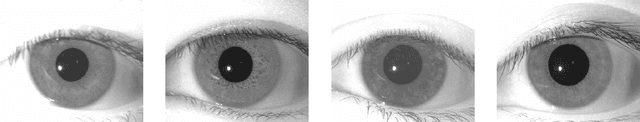

Recent research has explored the possibility of automatically deducing information such as gender, age and race of an individual from their biometric data. While the face modality has been extensively studied in this regard, relatively less research has been conducted in the context of the iris modality. In this paper, we first review the medical literature to establish a biological basis for extracting gender and race cues from the iris. Then, we demonstrate that it is possible to use simple texture descriptors, like BSIF (Binarized Statistical Image Feature) and LBP (Local Binary Patterns), to extract gender and race attributes from a NIR ocular image used in a typical iris recognition system. The proposed method predicts race and gender from a single eye image with an accuracy of 86% and 90%, respectively. In addition, the following analysis are conducted: (a) the role of different parts of the ocular region on attribute prediction; (b) the influence of gender on race prediction, and vice-versa; (c) the impact of eye color on gender and race prediction; (d) the impact of image blur on gender and race prediction; (e) the generalizability of the method across different datasets, i.e., cross-dataset performance; and (f) the consistency of prediction performance across the left and right eyes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge